mirror of

https://github.com/dbt-labs/dbt-core

synced 2025-12-17 19:31:34 +00:00

Compare commits

136 Commits

leahwicz-p

...

performanc

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

1fe53750fa | ||

|

|

8609c02383 | ||

|

|

355b0c496e | ||

|

|

cd6894acf4 | ||

|

|

b90b3a9c19 | ||

|

|

06cc0c57e8 | ||

|

|

87072707ed | ||

|

|

ef63319733 | ||

|

|

2068dd5510 | ||

|

|

3e1e171c66 | ||

|

|

5f9ed1a83c | ||

|

|

3d9e54d970 | ||

|

|

52a0fdef6c | ||

|

|

d9b02fb0a0 | ||

|

|

6c8de62b24 | ||

|

|

2d3d1b030a | ||

|

|

88acf0727b | ||

|

|

02839ec779 | ||

|

|

44a8f6a3bf | ||

|

|

751ea92576 | ||

|

|

02007b3619 | ||

|

|

fe0b9e7ef5 | ||

|

|

4b1c6b51f9 | ||

|

|

0b4689f311 | ||

|

|

b77eff8f6f | ||

|

|

2782a33ecf | ||

|

|

94c6cf1b3c | ||

|

|

3c8daacd3e | ||

|

|

2f9907b072 | ||

|

|

287c4d2b03 | ||

|

|

ba9d76b3f9 | ||

|

|

486afa9fcd | ||

|

|

1f189f5225 | ||

|

|

580b1fdd68 | ||

|

|

bad0198a36 | ||

|

|

252280b56e | ||

|

|

64bf9c8885 | ||

|

|

935c138736 | ||

|

|

5891b59790 | ||

|

|

4e020c3878 | ||

|

|

3004969a93 | ||

|

|

873e9714f8 | ||

|

|

fe24dd43d4 | ||

|

|

ed91ded2c1 | ||

|

|

757614d57f | ||

|

|

faff8c00b3 | ||

|

|

80244a09fe | ||

|

|

37e86257f5 | ||

|

|

c182c05c2f | ||

|

|

b02875a12b | ||

|

|

03332b2955 | ||

|

|

f1f99a2371 | ||

|

|

95116dbb5b | ||

|

|

868fd64adf | ||

|

|

2f7ab2d038 | ||

|

|

3d4a82cca2 | ||

|

|

6ba837d73d | ||

|

|

f4775d7673 | ||

|

|

429396aa02 | ||

|

|

8a5e9b71a5 | ||

|

|

fa78102eaf | ||

|

|

5466d474c5 | ||

|

|

80951ae973 | ||

|

|

d5662ef34c | ||

|

|

45bb955b55 | ||

|

|

4ddba7e44c | ||

|

|

37b31d10c8 | ||

|

|

c8bc25d11a | ||

|

|

4c06689ff5 | ||

|

|

a45c9d0192 | ||

|

|

34e2c4f90b | ||

|

|

c0e2023c81 | ||

|

|

108b55bdc3 | ||

|

|

a29367b7fe | ||

|

|

1d7e8349ed | ||

|

|

75d3d87d64 | ||

|

|

4ff3f6d4e8 | ||

|

|

d0773f3346 | ||

|

|

ee58d27d94 | ||

|

|

9e3da391a7 | ||

|

|

9f62ec2153 | ||

|

|

372eca76b8 | ||

|

|

e3cb050bbc | ||

|

|

0ae93c7f54 | ||

|

|

1f6386d760 | ||

|

|

66eb3964e2 | ||

|

|

f460d275ba | ||

|

|

fb91bad800 | ||

|

|

eaec22ae53 | ||

|

|

b7c1768cca | ||

|

|

387b26a202 | ||

|

|

8a1e6438f1 | ||

|

|

aaac5ff2e6 | ||

|

|

4dc29630b5 | ||

|

|

f716631439 | ||

|

|

648a780850 | ||

|

|

de0919ff88 | ||

|

|

8b1ea5fb6c | ||

|

|

85627aafcd | ||

|

|

49065158f5 | ||

|

|

bdb3049218 | ||

|

|

e10d1b0f86 | ||

|

|

83b98c8ebf | ||

|

|

b9d5123aa3 | ||

|

|

c09300bfd2 | ||

|

|

fc490cee7b | ||

|

|

3baa3d7fe8 | ||

|

|

764c7c0fdc | ||

|

|

c97ebbbf35 | ||

|

|

85fe32bd08 | ||

|

|

eba3fd2255 | ||

|

|

e2f2c07873 | ||

|

|

70850cd362 | ||

|

|

16992e6391 | ||

|

|

fd0d95140e | ||

|

|

ac65fcd557 | ||

|

|

4d246567b9 | ||

|

|

1ad1c834f3 | ||

|

|

41610b822c | ||

|

|

c794600242 | ||

|

|

9d414f6ec3 | ||

|

|

552e831306 | ||

|

|

c712c96a0b | ||

|

|

eb46bfc3d6 | ||

|

|

f52537b606 | ||

|

|

762419d2fe | ||

|

|

4feb7cb15b | ||

|

|

eb47b85148 | ||

|

|

9faa019a07 | ||

|

|

9589dc91fa | ||

|

|

14507a283e | ||

|

|

af0fe120ec | ||

|

|

16501ec1c6 | ||

|

|

bf867f6aff | ||

|

|

eb4ad4444f | ||

|

|

8fdba17ac6 |

@@ -1,23 +1,27 @@

|

||||

[bumpversion]

|

||||

current_version = 0.20.0rc1

|

||||

current_version = 0.21.0a1

|

||||

parse = (?P<major>\d+)

|

||||

\.(?P<minor>\d+)

|

||||

\.(?P<patch>\d+)

|

||||

((?P<prerelease>[a-z]+)(?P<num>\d+))?

|

||||

((?P<prekind>a|b|rc)

|

||||

(?P<pre>\d+) # pre-release version num

|

||||

)?

|

||||

serialize =

|

||||

{major}.{minor}.{patch}{prerelease}{num}

|

||||

{major}.{minor}.{patch}{prekind}{pre}

|

||||

{major}.{minor}.{patch}

|

||||

commit = False

|

||||

tag = False

|

||||

|

||||

[bumpversion:part:prerelease]

|

||||

[bumpversion:part:prekind]

|

||||

first_value = a

|

||||

optional_value = final

|

||||

values =

|

||||

a

|

||||

b

|

||||

rc

|

||||

final

|

||||

|

||||

[bumpversion:part:num]

|

||||

[bumpversion:part:pre]

|

||||

first_value = 1

|

||||

|

||||

[bumpversion:file:setup.py]

|

||||

@@ -26,6 +30,8 @@ first_value = 1

|

||||

|

||||

[bumpversion:file:core/dbt/version.py]

|

||||

|

||||

[bumpversion:file:core/scripts/create_adapter_plugins.py]

|

||||

|

||||

[bumpversion:file:plugins/postgres/setup.py]

|

||||

|

||||

[bumpversion:file:plugins/redshift/setup.py]

|

||||

@@ -41,4 +47,3 @@ first_value = 1

|

||||

[bumpversion:file:plugins/snowflake/dbt/adapters/snowflake/__version__.py]

|

||||

|

||||

[bumpversion:file:plugins/bigquery/dbt/adapters/bigquery/__version__.py]

|

||||

|

||||

|

||||

29

.github/ISSUE_TEMPLATE/minor-version-release.md

vendored

Normal file

29

.github/ISSUE_TEMPLATE/minor-version-release.md

vendored

Normal file

@@ -0,0 +1,29 @@

|

||||

---

|

||||

name: Minor version release

|

||||

about: Creates a tracking checklist of items for a minor version release

|

||||

title: "[Tracking] v#.##.# release "

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

### Release Core

|

||||

- [ ] [Engineering] dbt-release workflow

|

||||

- [ ] [Engineering] Create new protected `x.latest` branch

|

||||

- [ ] [Product] Finalize migration guide (next.docs.getdbt.com)

|

||||

|

||||

### Release Cloud

|

||||

- [ ] [Engineering] Create a platform issue to update dbt Cloud and verify it is completed

|

||||

- [ ] [Engineering] Determine if schemas have changed. If so, generate new schemas and push to schemas.getdbt.com

|

||||

|

||||

### Announce

|

||||

- [ ] [Product] Publish discourse

|

||||

- [ ] [Product] Announce in dbt Slack

|

||||

|

||||

### Post-release

|

||||

- [ ] [Engineering] [Bump plugin versions](https://www.notion.so/fishtownanalytics/Releasing-b97c5ea9a02949e79e81db3566bbc8ef#59571f5bc1a040d9a8fd096e23d2c7db) (dbt-spark + dbt-presto), add compatibility as needed

|

||||

- [ ] Spark

|

||||

- [ ] Presto

|

||||

- [ ] [Engineering] Create a platform issue to update dbt-spark versions to dbt Cloud

|

||||

- [ ] [Product] Release new version of dbt-utils with new dbt version compatibility. If there are breaking changes requiring a minor version, plan upgrades of other packages that depend on dbt-utils.

|

||||

- [ ] [Engineering] If this isn't a final release, create an epic for the next release

|

||||

181

.github/workflows/performance.yml

vendored

Normal file

181

.github/workflows/performance.yml

vendored

Normal file

@@ -0,0 +1,181 @@

|

||||

|

||||

name: Performance Regression Testing

|

||||

# Schedule triggers

|

||||

on:

|

||||

# TODO this is just while developing

|

||||

pull_request:

|

||||

branches:

|

||||

- 'develop'

|

||||

- 'performance-regression-testing'

|

||||

schedule:

|

||||

# runs twice a day at 10:05am and 10:05pm

|

||||

- cron: '5 10,22 * * *'

|

||||

# Allows you to run this workflow manually from the Actions tab

|

||||

workflow_dispatch:

|

||||

|

||||

jobs:

|

||||

|

||||

# checks fmt of runner code

|

||||

# purposefully not a dependency of any other job

|

||||

# will block merging, but not prevent developing

|

||||

fmt:

|

||||

name: Cargo fmt

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- uses: actions-rs/toolchain@v1

|

||||

with:

|

||||

profile: minimal

|

||||

toolchain: stable

|

||||

override: true

|

||||

- run: rustup component add rustfmt

|

||||

- uses: actions-rs/cargo@v1

|

||||

with:

|

||||

command: fmt

|

||||

args: --manifest-path performance/runner/Cargo.toml --all -- --check

|

||||

|

||||

# runs any tests associated with the runner

|

||||

# these tests make sure the runner logic is correct

|

||||

test-runner:

|

||||

name: Test Runner

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

# turns errors into warnings

|

||||

RUSTFLAGS: "-D warnings"

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- uses: actions-rs/toolchain@v1

|

||||

with:

|

||||

profile: minimal

|

||||

toolchain: stable

|

||||

override: true

|

||||

- uses: actions-rs/cargo@v1

|

||||

with:

|

||||

command: test

|

||||

args: --manifest-path performance/runner/Cargo.toml

|

||||

|

||||

# build an optimized binary to be used as the runner in later steps

|

||||

build-runner:

|

||||

needs: [test-runner]

|

||||

name: Build Runner

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

RUSTFLAGS: "-D warnings"

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- uses: actions-rs/toolchain@v1

|

||||

with:

|

||||

profile: minimal

|

||||

toolchain: stable

|

||||

override: true

|

||||

- uses: actions-rs/cargo@v1

|

||||

with:

|

||||

command: build

|

||||

args: --release --manifest-path performance/runner/Cargo.toml

|

||||

- uses: actions/upload-artifact@v2

|

||||

with:

|

||||

name: runner

|

||||

path: performance/runner/target/release/runner

|

||||

|

||||

# run the performance measurements on the current or default branch

|

||||

measure-dev:

|

||||

needs: [build-runner]

|

||||

name: Measure Dev Branch

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: checkout dev

|

||||

uses: actions/checkout@v2

|

||||

- name: Setup Python

|

||||

uses: actions/setup-python@v2.2.2

|

||||

with:

|

||||

python-version: '3.8'

|

||||

- name: install dbt

|

||||

run: pip install -r dev-requirements.txt -r editable-requirements.txt

|

||||

- name: install hyperfine

|

||||

run: wget https://github.com/sharkdp/hyperfine/releases/download/v1.11.0/hyperfine_1.11.0_amd64.deb && sudo dpkg -i hyperfine_1.11.0_amd64.deb

|

||||

- uses: actions/download-artifact@v2

|

||||

with:

|

||||

name: runner

|

||||

- name: change permissions

|

||||

run: chmod +x ./runner

|

||||

- name: run

|

||||

run: ./runner measure -b dev -p ${{ github.workspace }}/performance/projects/

|

||||

- uses: actions/upload-artifact@v2

|

||||

with:

|

||||

name: dev-results

|

||||

path: performance/results/

|

||||

|

||||

# run the performance measurements on the release branch which we use

|

||||

# as a performance baseline. This part takes by far the longest, so

|

||||

# we do everything we can first so the job fails fast.

|

||||

# -----

|

||||

# we need to checkout dbt twice in this job: once for the baseline dbt

|

||||

# version, and once to get the latest regression testing projects,

|

||||

# metrics, and runner code from the develop or current branch so that

|

||||

# the calculations match for both versions of dbt we are comparing.

|

||||

measure-baseline:

|

||||

needs: [build-runner]

|

||||

name: Measure Baseline Branch

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: checkout latest

|

||||

uses: actions/checkout@v2

|

||||

with:

|

||||

ref: '0.20.latest'

|

||||

- name: Setup Python

|

||||

uses: actions/setup-python@v2.2.2

|

||||

with:

|

||||

python-version: '3.8'

|

||||

- name: move repo up a level

|

||||

run: mkdir ${{ github.workspace }}/../baseline/ && cp -r ${{ github.workspace }} ${{ github.workspace }}/../baseline

|

||||

- name: "[debug] ls new dbt location"

|

||||

run: ls ${{ github.workspace }}/../baseline/dbt/

|

||||

# installation creates egg-links so we have to preserve source

|

||||

- name: install dbt from new location

|

||||

run: cd ${{ github.workspace }}/../baseline/dbt/ && pip install -r dev-requirements.txt -r editable-requirements.txt

|

||||

# checkout the current branch to get all the target projects

|

||||

# this deletes the old checked out code which is why we had to copy before

|

||||

- name: checkout dev

|

||||

uses: actions/checkout@v2

|

||||

- name: install hyperfine

|

||||

run: wget https://github.com/sharkdp/hyperfine/releases/download/v1.11.0/hyperfine_1.11.0_amd64.deb && sudo dpkg -i hyperfine_1.11.0_amd64.deb

|

||||

- uses: actions/download-artifact@v2

|

||||

with:

|

||||

name: runner

|

||||

- name: change permissions

|

||||

run: chmod +x ./runner

|

||||

- name: run runner

|

||||

run: ./runner measure -b baseline -p ${{ github.workspace }}/performance/projects/

|

||||

- uses: actions/upload-artifact@v2

|

||||

with:

|

||||

name: baseline-results

|

||||

path: performance/results/

|

||||

|

||||

# detect regressions on the output generated from measuring

|

||||

# the two branches. Exits with non-zero code if a regression is detected.

|

||||

calculate-regressions:

|

||||

needs: [measure-dev, measure-baseline]

|

||||

name: Compare Results

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/download-artifact@v2

|

||||

with:

|

||||

name: dev-results

|

||||

- uses: actions/download-artifact@v2

|

||||

with:

|

||||

name: baseline-results

|

||||

- name: "[debug] ls result files"

|

||||

run: ls

|

||||

- uses: actions/download-artifact@v2

|

||||

with:

|

||||

name: runner

|

||||

- name: change permissions

|

||||

run: chmod +x ./runner

|

||||

- name: run calculation

|

||||

run: ./runner calculate -r ./

|

||||

# always attempt to upload the results even if there were regressions found

|

||||

- uses: actions/upload-artifact@v2

|

||||

if: ${{ always() }}

|

||||

with:

|

||||

name: final-calculations

|

||||

path: ./final_calculations.json

|

||||

74

CHANGELOG.md

74

CHANGELOG.md

@@ -1,4 +1,73 @@

|

||||

## dbt 0.20.0 (Release TBD)

|

||||

## dbt 0.21.0 (Release TBD)

|

||||

|

||||

### Features

|

||||

- Add `dbt build` command to run models, tests, seeds, and snapshots in DAG order. ([#2743] (https://github.com/dbt-labs/dbt/issues/2743), [#3490] (https://github.com/dbt-labs/dbt/issues/3490))

|

||||

|

||||

### Fixes

|

||||

- Fix docs generation for cross-db sources in REDSHIFT RA3 node ([#3236](https://github.com/fishtown-analytics/dbt/issues/3236), [#3408](https://github.com/fishtown-analytics/dbt/pull/3408))

|

||||

- Fix type coercion issues when fetching query result sets ([#2984](https://github.com/fishtown-analytics/dbt/issues/2984), [#3499](https://github.com/fishtown-analytics/dbt/pull/3499))

|

||||

- Handle whitespace after a plus sign on the project config ([#3526](https://github.com/dbt-labs/dbt/pull/3526))

|

||||

|

||||

### Under the hood

|

||||

- Add performance regression testing [#3602](https://github.com/dbt-labs/dbt/pull/3602)

|

||||

- Improve default view and table materialization performance by checking relational cache before attempting to drop temp relations ([#3112](https://github.com/fishtown-analytics/dbt/issues/3112), [#3468](https://github.com/fishtown-analytics/dbt/pull/3468))

|

||||

- Add optional `sslcert`, `sslkey`, and `sslrootcert` profile arguments to the Postgres connector. ([#3472](https://github.com/fishtown-analytics/dbt/pull/3472), [#3473](https://github.com/fishtown-analytics/dbt/pull/3473))

|

||||

- Move the example project used by `dbt init` into `dbt` repository, to avoid cloning an external repo ([#3005](https://github.com/fishtown-analytics/dbt/pull/3005), [#3474](https://github.com/fishtown-analytics/dbt/pull/3474), [#3536](https://github.com/fishtown-analytics/dbt/pull/3536))

|

||||

- Better interaction between `dbt init` and adapters. Avoid raising errors while initializing a project ([#2814](https://github.com/fishtown-analytics/dbt/pull/2814), [#3483](https://github.com/fishtown-analytics/dbt/pull/3483))

|

||||

- Update `create_adapter_plugins` script to include latest accessories, and stay up to date with latest dbt-core version ([#3002](https://github.com/fishtown-analytics/dbt/issues/3002), [#3509](https://github.com/fishtown-analytics/dbt/pull/3509))

|

||||

|

||||

### Dependencies

|

||||

- Require `werkzeug>=1`

|

||||

|

||||

Contributors:

|

||||

- [@kostek-pl](https://github.com/kostek-pl) ([#3236](https://github.com/fishtown-analytics/dbt/pull/3408))

|

||||

- [@tconbeer](https://github.com/tconbeer) [#3468](https://github.com/fishtown-analytics/dbt/pull/3468))

|

||||

- [@JLDLaughlin](https://github.com/JLDLaughlin) ([#3473](https://github.com/fishtown-analytics/dbt/pull/3473))

|

||||

- [@jmriego](https://github.com/jmriego) ([#3526](https://github.com/dbt-labs/dbt/pull/3526))

|

||||

|

||||

|

||||

## dbt 0.20.1 (Release TBD)

|

||||

|

||||

### Fixes

|

||||

- Fix `store_failures` config when defined as a modifier for `unique` and `not_null` tests ([#3575](https://github.com/fishtown-analytics/dbt/issues/3575), [#3577](https://github.com/fishtown-analytics/dbt/pull/3577))

|

||||

|

||||

|

||||

## dbt 0.20.0 (July 12, 2021)

|

||||

|

||||

### Fixes

|

||||

|

||||

- Avoid slowdown in column-level `persist_docs` on Snowflake, while preserving the error-avoidance from [#3149](https://github.com/fishtown-analytics/dbt/issues/3149) ([#3541](https://github.com/fishtown-analytics/dbt/issues/3541), [#3543](https://github.com/fishtown-analytics/dbt/pull/3543))

|

||||

- Partial parsing: handle already deleted nodes when schema block also deleted ([#3516](http://github.com/fishown-analystics/dbt/issues/3516), [#3522](http://github.com/fishown-analystics/dbt/issues/3522))

|

||||

|

||||

### Docs

|

||||

|

||||

- Update dbt logo and links ([docs#197](https://github.com/fishtown-analytics/dbt-docs/issues/197))

|

||||

|

||||

### Under the hood

|

||||

|

||||

- Add tracking for experimental parser accuracy ([3503](https://github.com/dbt-labs/dbt/pull/3503), [3553](https://github.com/dbt-labs/dbt/pull/3553))

|

||||

|

||||

## dbt 0.20.0rc2 (June 30, 2021)

|

||||

|

||||

### Fixes

|

||||

|

||||

- Handle quoted values within test configs, such as `where` ([#3458](https://github.com/fishtown-analytics/dbt/issues/3458), [#3459](https://github.com/fishtown-analytics/dbt/pull/3459))

|

||||

|

||||

### Docs

|

||||

|

||||

- Display `tags` on exposures ([docs#194](https://github.com/fishtown-analytics/dbt-docs/issues/194), [docs#195](https://github.com/fishtown-analytics/dbt-docs/issues/195))

|

||||

|

||||

### Under the hood

|

||||

|

||||

- Swap experimental parser implementation to use Rust [#3497](https://github.com/fishtown-analytics/dbt/pull/3497)

|

||||

- Dispatch the core SQL statement of the new test materialization, to benefit adapter maintainers ([#3465](https://github.com/fishtown-analytics/dbt/pull/3465), [#3461](https://github.com/fishtown-analytics/dbt/pull/3461))

|

||||

- Minimal validation of yaml dictionaries prior to partial parsing ([#3246](https://github.com/fishtown-analytics/dbt/issues/3246), [#3460](https://github.com/fishtown-analytics/dbt/pull/3460))

|

||||

- Add partial parsing tests and improve partial parsing handling of macros ([#3449](https://github.com/fishtown-analytics/dbt/issues/3449), [#3505](https://github.com/fishtown-analytics/dbt/pull/3505))

|

||||

- Update project loading event data to include experimental parser information. ([#3438](https://github.com/fishtown-analytics/dbt/issues/3438), [#3495](https://github.com/fishtown-analytics/dbt/pull/3495))

|

||||

|

||||

Contributors:

|

||||

- [@swanderz](https://github.com/swanderz) ([#3461](https://github.com/fishtown-analytics/dbt/pull/3461))

|

||||

- [@stkbailey](https://github.com/stkbailey) ([docs#195](https://github.com/fishtown-analytics/dbt-docs/issues/195))

|

||||

|

||||

## dbt 0.20.0rc1 (June 04, 2021)

|

||||

|

||||

@@ -26,7 +95,10 @@

|

||||

- Separate `compiled_path` from `build_path`, and print the former alongside node error messages ([#1985](https://github.com/fishtown-analytics/dbt/issues/1985), [#3327](https://github.com/fishtown-analytics/dbt/pull/3327))

|

||||

- Fix exception caused when running `dbt debug` with BigQuery connections ([#3314](https://github.com/fishtown-analytics/dbt/issues/3314), [#3351](https://github.com/fishtown-analytics/dbt/pull/3351))

|

||||

- Raise better error if snapshot is missing required configurations ([#3381](https://github.com/fishtown-analytics/dbt/issues/3381), [#3385](https://github.com/fishtown-analytics/dbt/pull/3385))

|

||||

- Fix `dbt run` errors caused from receiving non-JSON responses from Snowflake with Oauth ([#3350](https://github.com/fishtown-analytics/dbt/issues/3350))

|

||||

- Fix deserialization of Manifest lock attribute ([#3435](https://github.com/fishtown-analytics/dbt/issues/3435), [#3445](https://github.com/fishtown-analytics/dbt/pull/3445))

|

||||

- Fix `dbt run` errors caused from receiving non-JSON responses from Snowflake with Oauth ([#3350](https://github.com/fishtown-analytics/dbt/issues/3350)

|

||||

- Fix infinite recursion when parsing schema tests due to loops in macro calls ([#3444](https://github.com/fishtown-analytics/dbt/issues/3344), [#3454](https://github.com/fishtown-analytics/dbt/pull/3454))

|

||||

|

||||

### Docs

|

||||

- Reversed the rendering direction of relationship tests so that the test renders in the model it is defined in ([docs#181](https://github.com/fishtown-analytics/dbt-docs/issues/181), [docs#183](https://github.com/fishtown-analytics/dbt-docs/pull/183))

|

||||

|

||||

@@ -186,7 +186,7 @@

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright {yyyy} {name of copyright owner}

|

||||

Copyright 2021 dbt Labs, Inc.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

|

||||

47

README.md

47

README.md

@@ -1,28 +1,21 @@

|

||||

<p align="center">

|

||||

<img src="https://raw.githubusercontent.com/fishtown-analytics/dbt/6c6649f9129d5d108aa3b0526f634cd8f3a9d1ed/etc/dbt-logo-full.svg" alt="dbt logo" width="500"/>

|

||||

<img src="https://raw.githubusercontent.com/dbt-labs/dbt/ec7dee39f793aa4f7dd3dae37282cc87664813e4/etc/dbt-logo-full.svg" alt="dbt logo" width="500"/>

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://codeclimate.com/github/fishtown-analytics/dbt">

|

||||

<img src="https://codeclimate.com/github/fishtown-analytics/dbt/badges/gpa.svg" alt="Code Climate"/>

|

||||

<a href="https://github.com/dbt-labs/dbt/actions/workflows/tests.yml?query=branch%3Adevelop">

|

||||

<img src="https://github.com/dbt-labs/dbt/actions/workflows/tests.yml/badge.svg" alt="GitHub Actions"/>

|

||||

</a>

|

||||

<a href="https://circleci.com/gh/fishtown-analytics/dbt/tree/master">

|

||||

<img src="https://circleci.com/gh/fishtown-analytics/dbt/tree/master.svg?style=svg" alt="CircleCI" />

|

||||

<a href="https://circleci.com/gh/dbt-labs/dbt/tree/develop">

|

||||

<img src="https://circleci.com/gh/dbt-labs/dbt/tree/develop.svg?style=svg" alt="CircleCI" />

|

||||

</a>

|

||||

<a href="https://ci.appveyor.com/project/DrewBanin/dbt/branch/development">

|

||||

<img src="https://ci.appveyor.com/api/projects/status/v01rwd3q91jnwp9m/branch/development?svg=true" alt="AppVeyor" />

|

||||

</a>

|

||||

<a href="https://community.getdbt.com">

|

||||

<img src="https://community.getdbt.com/badge.svg" alt="Slack" />

|

||||

<a href="https://dev.azure.com/fishtown-analytics/dbt/_build?definitionId=1&_a=summary&repositoryFilter=1&branchFilter=789%2C789%2C789%2C789">

|

||||

<img src="https://dev.azure.com/fishtown-analytics/dbt/_apis/build/status/fishtown-analytics.dbt?branchName=develop" alt="Azure Pipelines" />

|

||||

</a>

|

||||

</p>

|

||||

|

||||

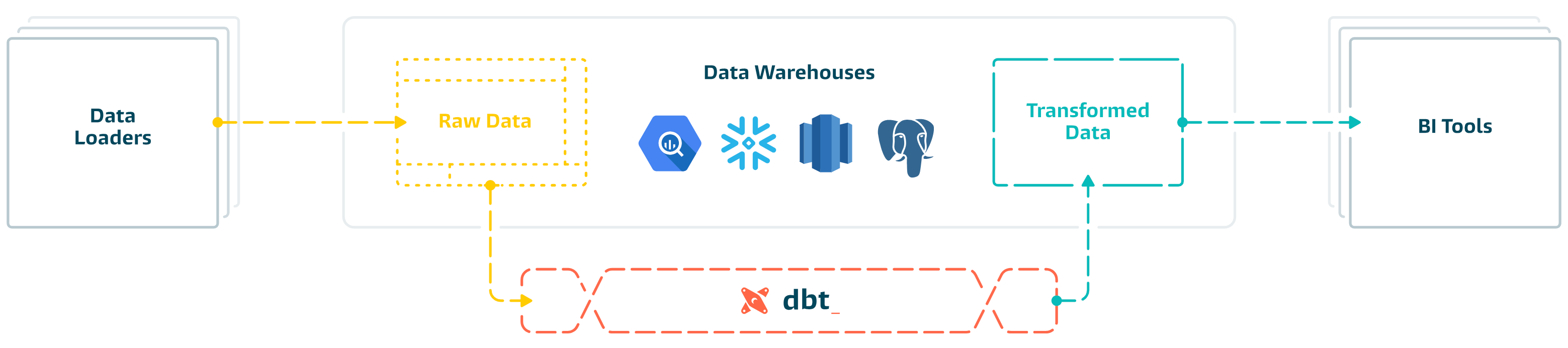

**[dbt](https://www.getdbt.com/)** (data build tool) enables data analysts and engineers to transform their data using the same practices that software engineers use to build applications.

|

||||

**[dbt](https://www.getdbt.com/)** enables data analysts and engineers to transform their data using the same practices that software engineers use to build applications.

|

||||

|

||||

dbt is the T in ELT. Organize, cleanse, denormalize, filter, rename, and pre-aggregate the raw data in your warehouse so that it's ready for analysis.

|

||||

|

||||

|

||||

|

||||

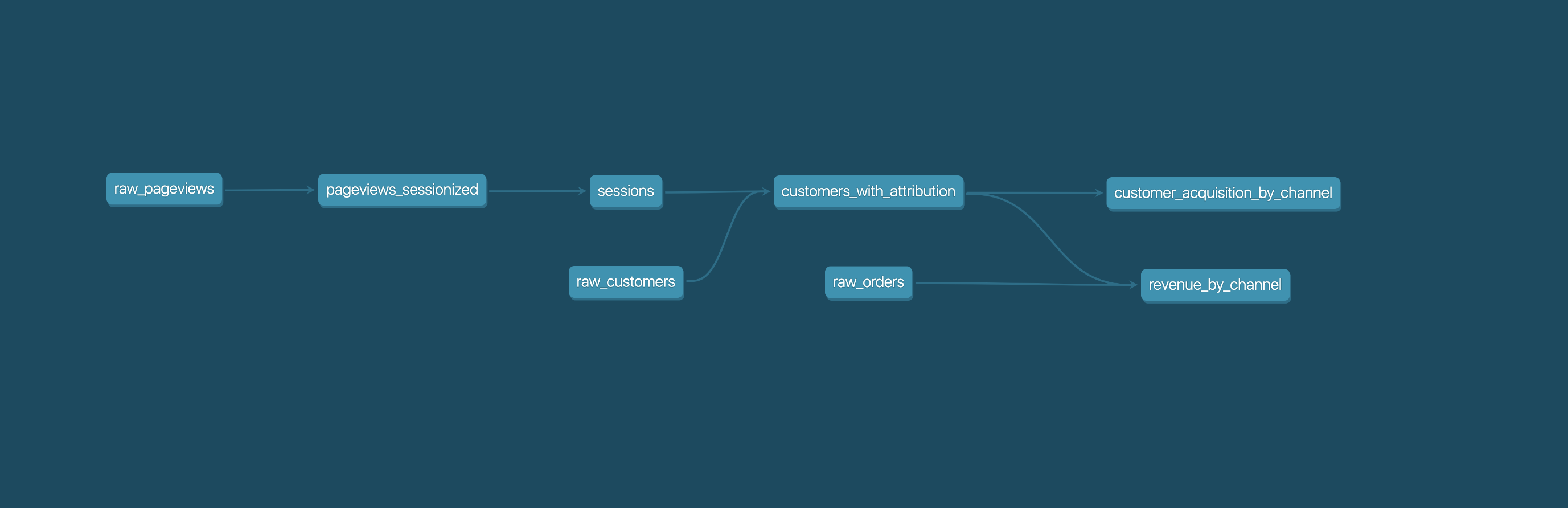

dbt can be used to [aggregate pageviews into sessions](https://github.com/fishtown-analytics/snowplow), calculate [ad spend ROI](https://github.com/fishtown-analytics/facebook-ads), or report on [email campaign performance](https://github.com/fishtown-analytics/mailchimp).

|

||||

|

||||

|

||||

## Understanding dbt

|

||||

|

||||

@@ -30,28 +23,22 @@ Analysts using dbt can transform their data by simply writing select statements,

|

||||

|

||||

These select statements, or "models", form a dbt project. Models frequently build on top of one another – dbt makes it easy to [manage relationships](https://docs.getdbt.com/docs/ref) between models, and [visualize these relationships](https://docs.getdbt.com/docs/documentation), as well as assure the quality of your transformations through [testing](https://docs.getdbt.com/docs/testing).

|

||||

|

||||

|

||||

|

||||

|

||||

## Getting started

|

||||

|

||||

- [Install dbt](https://docs.getdbt.com/docs/installation)

|

||||

- Read the [documentation](https://docs.getdbt.com/).

|

||||

- Productionize your dbt project with [dbt Cloud](https://www.getdbt.com)

|

||||

- [Install dbt](https://docs.getdbt.com/docs/installation)

|

||||

- Read the [introduction](https://docs.getdbt.com/docs/introduction/) and [viewpoint](https://docs.getdbt.com/docs/about/viewpoint/)

|

||||

|

||||

## Find out more

|

||||

## Join the dbt Community

|

||||

|

||||

- Check out the [Introduction to dbt](https://docs.getdbt.com/docs/introduction/).

|

||||

- Read the [dbt Viewpoint](https://docs.getdbt.com/docs/about/viewpoint/).

|

||||

|

||||

## Join thousands of analysts in the dbt community

|

||||

|

||||

- Join the [chat](http://community.getdbt.com/) on Slack.

|

||||

- Find community posts on [dbt Discourse](https://discourse.getdbt.com).

|

||||

- Be part of the conversation in the [dbt Community Slack](http://community.getdbt.com/)

|

||||

- Read more on the [dbt Community Discourse](https://discourse.getdbt.com)

|

||||

|

||||

## Reporting bugs and contributing code

|

||||

|

||||

- Want to report a bug or request a feature? Let us know on [Slack](http://community.getdbt.com/), or open [an issue](https://github.com/fishtown-analytics/dbt/issues/new).

|

||||

- Want to help us build dbt? Check out the [Contributing Getting Started Guide](https://github.com/fishtown-analytics/dbt/blob/HEAD/CONTRIBUTING.md)

|

||||

- Want to report a bug or request a feature? Let us know on [Slack](http://community.getdbt.com/), or open [an issue](https://github.com/dbt-labs/dbt/issues/new)

|

||||

- Want to help us build dbt? Check out the [Contributing Guide](https://github.com/dbt-labs/dbt/blob/HEAD/CONTRIBUTING.md)

|

||||

|

||||

## Code of Conduct

|

||||

|

||||

|

||||

@@ -1 +1 @@

|

||||

recursive-include dbt/include *.py *.sql *.yml *.html *.md

|

||||

recursive-include dbt/include *.py *.sql *.yml *.html *.md .gitkeep .gitignore

|

||||

|

||||

@@ -433,13 +433,14 @@ class SchemaSearchMap(Dict[InformationSchema, Set[Optional[str]]]):

|

||||

for schema in schemas:

|

||||

yield information_schema_name, schema

|

||||

|

||||

def flatten(self):

|

||||

def flatten(self, allow_multiple_databases: bool = False):

|

||||

new = self.__class__()

|

||||

|

||||

# make sure we don't have duplicates

|

||||

seen = {r.database.lower() for r in self if r.database}

|

||||

if len(seen) > 1:

|

||||

dbt.exceptions.raise_compiler_error(str(seen))

|

||||

# make sure we don't have multiple databases if allow_multiple_databases is set to False

|

||||

if not allow_multiple_databases:

|

||||

seen = {r.database.lower() for r in self if r.database}

|

||||

if len(seen) > 1:

|

||||

dbt.exceptions.raise_compiler_error(str(seen))

|

||||

|

||||

for information_schema_name, schema in self.search():

|

||||

path = {

|

||||

|

||||

@@ -35,7 +35,11 @@ class ISODateTime(agate.data_types.DateTime):

|

||||

)

|

||||

|

||||

|

||||

def build_type_tester(text_columns: Iterable[str]) -> agate.TypeTester:

|

||||

def build_type_tester(

|

||||

text_columns: Iterable[str],

|

||||

string_null_values: Optional[Iterable[str]] = ('null', '')

|

||||

) -> agate.TypeTester:

|

||||

|

||||

types = [

|

||||

agate.data_types.Number(null_values=('null', '')),

|

||||

agate.data_types.Date(null_values=('null', ''),

|

||||

@@ -46,10 +50,10 @@ def build_type_tester(text_columns: Iterable[str]) -> agate.TypeTester:

|

||||

agate.data_types.Boolean(true_values=('true',),

|

||||

false_values=('false',),

|

||||

null_values=('null', '')),

|

||||

agate.data_types.Text(null_values=('null', ''))

|

||||

agate.data_types.Text(null_values=string_null_values)

|

||||

]

|

||||

force = {

|

||||

k: agate.data_types.Text(null_values=('null', ''))

|

||||

k: agate.data_types.Text(null_values=string_null_values)

|

||||

for k in text_columns

|

||||

}

|

||||

return agate.TypeTester(force=force, types=types)

|

||||

@@ -66,7 +70,13 @@ def table_from_rows(

|

||||

if text_only_columns is None:

|

||||

column_types = DEFAULT_TYPE_TESTER

|

||||

else:

|

||||

column_types = build_type_tester(text_only_columns)

|

||||

# If text_only_columns are present, prevent coercing empty string or

|

||||

# literal 'null' strings to a None representation.

|

||||

column_types = build_type_tester(

|

||||

text_only_columns,

|

||||

string_null_values=()

|

||||

)

|

||||

|

||||

return agate.Table(rows, column_names, column_types=column_types)

|

||||

|

||||

|

||||

@@ -86,19 +96,34 @@ def table_from_data(data, column_names: Iterable[str]) -> agate.Table:

|

||||

|

||||

|

||||

def table_from_data_flat(data, column_names: Iterable[str]) -> agate.Table:

|

||||

"Convert list of dictionaries into an Agate table"

|

||||

"""

|

||||

Convert a list of dictionaries into an Agate table. This method does not

|

||||

coerce string values into more specific types (eg. '005' will not be

|

||||

coerced to '5'). Additionally, this method does not coerce values to

|

||||

None (eg. '' or 'null' will retain their string literal representations).

|

||||

"""

|

||||

|

||||

rows = []

|

||||

text_only_columns = set()

|

||||

for _row in data:

|

||||

row = []

|

||||

for value in list(_row.values()):

|

||||

for col_name in column_names:

|

||||

value = _row[col_name]

|

||||

if isinstance(value, (dict, list, tuple)):

|

||||

row.append(json.dumps(value, cls=dbt.utils.JSONEncoder))

|

||||

else:

|

||||

row.append(value)

|

||||

# Represent container types as json strings

|

||||

value = json.dumps(value, cls=dbt.utils.JSONEncoder)

|

||||

text_only_columns.add(col_name)

|

||||

elif isinstance(value, str):

|

||||

text_only_columns.add(col_name)

|

||||

row.append(value)

|

||||

|

||||

rows.append(row)

|

||||

|

||||

return table_from_rows(rows=rows, column_names=column_names)

|

||||

return table_from_rows(

|

||||

rows=rows,

|

||||

column_names=column_names,

|

||||

text_only_columns=text_only_columns

|

||||

)

|

||||

|

||||

|

||||

def empty_table():

|

||||

|

||||

@@ -147,7 +147,7 @@ class DbtProjectYamlRenderer(BaseRenderer):

|

||||

|

||||

if first in {'seeds', 'models', 'snapshots', 'tests'}:

|

||||

keypath_parts = {

|

||||

(k.lstrip('+') if isinstance(k, str) else k)

|

||||

(k.lstrip('+ ') if isinstance(k, str) else k)

|

||||

for k in keypath

|

||||

}

|

||||

# model-level hooks

|

||||

|

||||

@@ -97,7 +97,7 @@ class BaseContextConfigGenerator(Generic[T]):

|

||||

result = {}

|

||||

for key, value in level_config.items():

|

||||

if key.startswith('+'):

|

||||

result[key[1:]] = deepcopy(value)

|

||||

result[key[1:].strip()] = deepcopy(value)

|

||||

elif not isinstance(value, dict):

|

||||

result[key] = deepcopy(value)

|

||||

|

||||

|

||||

@@ -169,6 +169,8 @@ class TestMacroNamespace:

|

||||

|

||||

def recursively_get_depends_on_macros(self, depends_on_macros, dep_macros):

|

||||

for macro_unique_id in depends_on_macros:

|

||||

if macro_unique_id in dep_macros:

|

||||

continue

|

||||

dep_macros.append(macro_unique_id)

|

||||

if macro_unique_id in self.macro_resolver.macros:

|

||||

macro = self.macro_resolver.macros[macro_unique_id]

|

||||

|

||||

@@ -156,20 +156,11 @@ class BaseSourceFile(dbtClassMixin, SerializableType):

|

||||

|

||||

def _serialize(self):

|

||||

dct = self.to_dict()

|

||||

if 'pp_files' in dct:

|

||||

del dct['pp_files']

|

||||

if 'pp_test_index' in dct:

|

||||

del dct['pp_test_index']

|

||||

return dct

|

||||

|

||||

@classmethod

|

||||

def _deserialize(cls, dct: Dict[str, int]):

|

||||

if dct['parse_file_type'] == 'schema':

|

||||

# TODO: why are these keys even here

|

||||

if 'pp_files' in dct:

|

||||

del dct['pp_files']

|

||||

if 'pp_test_index' in dct:

|

||||

del dct['pp_test_index']

|

||||

sf = SchemaSourceFile.from_dict(dct)

|

||||

else:

|

||||

sf = SourceFile.from_dict(dct)

|

||||

@@ -223,7 +214,7 @@ class SourceFile(BaseSourceFile):

|

||||

class SchemaSourceFile(BaseSourceFile):

|

||||

dfy: Dict[str, Any] = field(default_factory=dict)

|

||||

# these are in the manifest.nodes dictionary

|

||||

tests: List[str] = field(default_factory=list)

|

||||

tests: Dict[str, Any] = field(default_factory=dict)

|

||||

sources: List[str] = field(default_factory=list)

|

||||

exposures: List[str] = field(default_factory=list)

|

||||

# node patches contain models, seeds, snapshots, analyses

|

||||

@@ -255,14 +246,53 @@ class SchemaSourceFile(BaseSourceFile):

|

||||

|

||||

def __post_serialize__(self, dct):

|

||||

dct = super().__post_serialize__(dct)

|

||||

if 'pp_files' in dct:

|

||||

del dct['pp_files']

|

||||

if 'pp_test_index' in dct:

|

||||

del dct['pp_test_index']

|

||||

# Remove partial parsing specific data

|

||||

for key in ('pp_files', 'pp_test_index', 'pp_dict'):

|

||||

if key in dct:

|

||||

del dct[key]

|

||||

return dct

|

||||

|

||||

def append_patch(self, yaml_key, unique_id):

|

||||

self.node_patches.append(unique_id)

|

||||

|

||||

def add_test(self, node_unique_id, test_from):

|

||||

name = test_from['name']

|

||||

key = test_from['key']

|

||||

if key not in self.tests:

|

||||

self.tests[key] = {}

|

||||

if name not in self.tests[key]:

|

||||

self.tests[key][name] = []

|

||||

self.tests[key][name].append(node_unique_id)

|

||||

|

||||

def remove_tests(self, yaml_key, name):

|

||||

if yaml_key in self.tests:

|

||||

if name in self.tests[yaml_key]:

|

||||

del self.tests[yaml_key][name]

|

||||

|

||||

def get_tests(self, yaml_key, name):

|

||||

if yaml_key in self.tests:

|

||||

if name in self.tests[yaml_key]:

|

||||

return self.tests[yaml_key][name]

|

||||

return []

|

||||

|

||||

def get_key_and_name_for_test(self, test_unique_id):

|

||||

yaml_key = None

|

||||

block_name = None

|

||||

for key in self.tests.keys():

|

||||

for name in self.tests[key]:

|

||||

for unique_id in self.tests[key][name]:

|

||||

if unique_id == test_unique_id:

|

||||

yaml_key = key

|

||||

block_name = name

|

||||

break

|

||||

return (yaml_key, block_name)

|

||||

|

||||

def get_all_test_ids(self):

|

||||

test_ids = []

|

||||

for key in self.tests.keys():

|

||||

for name in self.tests[key]:

|

||||

test_ids.extend(self.tests[key][name])

|

||||

return test_ids

|

||||

|

||||

|

||||

AnySourceFile = Union[SchemaSourceFile, SourceFile]

|

||||

|

||||

@@ -243,7 +243,7 @@ def _sort_values(dct):

|

||||

return {k: sorted(v) for k, v in dct.items()}

|

||||

|

||||

|

||||

def build_edges(nodes: List[ManifestNode]):

|

||||

def build_node_edges(nodes: List[ManifestNode]):

|

||||

"""Build the forward and backward edges on the given list of ParsedNodes

|

||||

and return them as two separate dictionaries, each mapping unique IDs to

|

||||

lists of edges.

|

||||

@@ -259,6 +259,18 @@ def build_edges(nodes: List[ManifestNode]):

|

||||

return _sort_values(forward_edges), _sort_values(backward_edges)

|

||||

|

||||

|

||||

# Build a map of children of macros

|

||||

def build_macro_edges(nodes: List[Any]):

|

||||

forward_edges: Dict[str, List[str]] = {

|

||||

n.unique_id: [] for n in nodes if n.unique_id.startswith('macro') or n.depends_on.macros

|

||||

}

|

||||

for node in nodes:

|

||||

for unique_id in node.depends_on.macros:

|

||||

if unique_id in forward_edges.keys():

|

||||

forward_edges[unique_id].append(node.unique_id)

|

||||

return _sort_values(forward_edges)

|

||||

|

||||

|

||||

def _deepcopy(value):

|

||||

return value.from_dict(value.to_dict(omit_none=True))

|

||||

|

||||

@@ -525,6 +537,12 @@ class MacroMethods:

|

||||

return candidates

|

||||

|

||||

|

||||

@dataclass

|

||||

class ParsingInfo:

|

||||

static_analysis_parsed_path_count: int = 0

|

||||

static_analysis_path_count: int = 0

|

||||

|

||||

|

||||

@dataclass

|

||||

class ManifestStateCheck(dbtClassMixin):

|

||||

vars_hash: FileHash = field(default_factory=FileHash.empty)

|

||||

@@ -566,9 +584,13 @@ class Manifest(MacroMethods, DataClassMessagePackMixin, dbtClassMixin):

|

||||

_analysis_lookup: Optional[AnalysisLookup] = field(

|

||||

default=None, metadata={'serialize': lambda x: None, 'deserialize': lambda x: None}

|

||||

)

|

||||

_parsing_info: ParsingInfo = field(

|

||||

default_factory=ParsingInfo,

|

||||

metadata={'serialize': lambda x: None, 'deserialize': lambda x: None}

|

||||

)

|

||||

_lock: Lock = field(

|

||||

default_factory=flags.MP_CONTEXT.Lock,

|

||||

metadata={'serialize': lambda x: None, 'deserialize': lambda x: flags.MP_CONTEXT.Lock}

|

||||

metadata={'serialize': lambda x: None, 'deserialize': lambda x: None}

|

||||

)

|

||||

|

||||

def __pre_serialize__(self):

|

||||

@@ -577,6 +599,11 @@ class Manifest(MacroMethods, DataClassMessagePackMixin, dbtClassMixin):

|

||||

self.source_patches = {}

|

||||

return self

|

||||

|

||||

@classmethod

|

||||

def __post_deserialize__(cls, obj):

|

||||

obj._lock = flags.MP_CONTEXT.Lock()

|

||||

return obj

|

||||

|

||||

def sync_update_node(

|

||||

self, new_node: NonSourceCompiledNode

|

||||

) -> NonSourceCompiledNode:

|

||||

@@ -779,10 +806,18 @@ class Manifest(MacroMethods, DataClassMessagePackMixin, dbtClassMixin):

|

||||

self.sources.values(),

|

||||

self.exposures.values(),

|

||||

))

|

||||

forward_edges, backward_edges = build_edges(edge_members)

|

||||

forward_edges, backward_edges = build_node_edges(edge_members)

|

||||

self.child_map = forward_edges

|

||||

self.parent_map = backward_edges

|

||||

|

||||

def build_macro_child_map(self):

|

||||

edge_members = list(chain(

|

||||

self.nodes.values(),

|

||||

self.macros.values(),

|

||||

))

|

||||

forward_edges = build_macro_edges(edge_members)

|

||||

return forward_edges

|

||||

|

||||

def writable_manifest(self):

|

||||

self.build_parent_and_child_maps()

|

||||

return WritableManifest(

|

||||

@@ -1016,10 +1051,11 @@ class Manifest(MacroMethods, DataClassMessagePackMixin, dbtClassMixin):

|

||||

_check_duplicates(node, self.nodes)

|

||||

self.nodes[node.unique_id] = node

|

||||

|

||||

def add_node(self, source_file: AnySourceFile, node: ManifestNodes):

|

||||

def add_node(self, source_file: AnySourceFile, node: ManifestNodes, test_from=None):

|

||||

self.add_node_nofile(node)

|

||||

if isinstance(source_file, SchemaSourceFile):

|

||||

source_file.tests.append(node.unique_id)

|

||||

assert test_from

|

||||

source_file.add_test(node.unique_id, test_from)

|

||||

else:

|

||||

source_file.nodes.append(node.unique_id)

|

||||

|

||||

@@ -1034,10 +1070,11 @@ class Manifest(MacroMethods, DataClassMessagePackMixin, dbtClassMixin):

|

||||

else:

|

||||

self._disabled[node.unique_id] = [node]

|

||||

|

||||

def add_disabled(self, source_file: AnySourceFile, node: CompileResultNode):

|

||||

def add_disabled(self, source_file: AnySourceFile, node: CompileResultNode, test_from=None):

|

||||

self.add_disabled_nofile(node)

|

||||

if isinstance(source_file, SchemaSourceFile):

|

||||

source_file.tests.append(node.unique_id)

|

||||

assert test_from

|

||||

source_file.add_test(node.unique_id, test_from)

|

||||

else:

|

||||

source_file.nodes.append(node.unique_id)

|

||||

|

||||

|

||||

@@ -592,7 +592,8 @@ class ParsedSourceDefinition(

|

||||

UnparsedBaseNode,

|

||||

HasUniqueID,

|

||||

HasRelationMetadata,

|

||||

HasFqn

|

||||

HasFqn,

|

||||

|

||||

):

|

||||

name: str

|

||||

source_name: str

|

||||

@@ -689,6 +690,10 @@ class ParsedSourceDefinition(

|

||||

def depends_on_nodes(self):

|

||||

return []

|

||||

|

||||

@property

|

||||

def depends_on(self):

|

||||

return {'nodes': []}

|

||||

|

||||

@property

|

||||

def refs(self):

|

||||

return []

|

||||

|

||||

@@ -78,6 +78,7 @@ class TestStatus(StrEnum):

|

||||

Error = NodeStatus.Error

|

||||

Fail = NodeStatus.Fail

|

||||

Warn = NodeStatus.Warn

|

||||

Skipped = NodeStatus.Skipped

|

||||

|

||||

|

||||

class FreshnessStatus(StrEnum):

|

||||

|

||||

@@ -1,10 +1,8 @@

|

||||

import threading

|

||||

from queue import PriorityQueue

|

||||

from typing import (

|

||||

Dict, Set, Optional

|

||||

)

|

||||

|

||||

import networkx as nx # type: ignore

|

||||

import threading

|

||||

|

||||

from queue import PriorityQueue

|

||||

from typing import Dict, Set, List, Generator, Optional

|

||||

|

||||

from .graph import UniqueId

|

||||

from dbt.contracts.graph.parsed import ParsedSourceDefinition, ParsedExposure

|

||||

@@ -21,9 +19,8 @@ class GraphQueue:

|

||||

that separate threads do not call `.empty()` or `__len__()` and `.get()` at

|

||||

the same time, as there is an unlocked race!

|

||||

"""

|

||||

def __init__(

|

||||

self, graph: nx.DiGraph, manifest: Manifest, selected: Set[UniqueId]

|

||||

):

|

||||

|

||||

def __init__(self, graph: nx.DiGraph, manifest: Manifest, selected: Set[UniqueId]):

|

||||

self.graph = graph

|

||||

self.manifest = manifest

|

||||

self._selected = selected

|

||||

@@ -37,7 +34,7 @@ class GraphQueue:

|

||||

# this lock controls most things

|

||||

self.lock = threading.Lock()

|

||||

# store the 'score' of each node as a number. Lower is higher priority.

|

||||

self._scores = self._calculate_scores()

|

||||

self._scores = self._get_scores(self.graph)

|

||||

# populate the initial queue

|

||||

self._find_new_additions()

|

||||

# awaits after task end

|

||||

@@ -56,30 +53,59 @@ class GraphQueue:

|

||||

return False

|

||||

return True

|

||||

|

||||

def _calculate_scores(self) -> Dict[UniqueId, int]:

|

||||

"""Calculate the 'value' of each node in the graph based on how many

|

||||

blocking descendants it has. We use this score for the internal

|

||||

priority queue's ordering, so the quality of this metric is important.

|

||||

@staticmethod

|

||||

def _grouped_topological_sort(

|

||||

graph: nx.DiGraph,

|

||||

) -> Generator[List[str], None, None]:

|

||||

"""Topological sort of given graph that groups ties.

|

||||

|

||||

The score is stored as a negative number because the internal

|

||||

PriorityQueue picks lowest values first.

|

||||

Adapted from `nx.topological_sort`, this function returns a topo sort of a graph however

|

||||

instead of arbitrarily ordering ties in the sort order, ties are grouped together in

|

||||

lists.

|

||||

|

||||

We could do this in one pass over the graph instead of len(self.graph)

|

||||

passes but this is easy. For large graphs this may hurt performance.

|

||||

Args:

|

||||

graph: The graph to be sorted.

|

||||

|

||||

This operates on the graph, so it would require a lock if called from

|

||||

outside __init__.

|

||||

|

||||

:return Dict[str, int]: The score dict, mapping unique IDs to integer

|

||||

scores. Lower scores are higher priority.

|

||||

Returns:

|

||||

A generator that yields lists of nodes, one list per graph depth level.

|

||||

"""

|

||||

indegree_map = {v: d for v, d in graph.in_degree() if d > 0}

|

||||

zero_indegree = [v for v, d in graph.in_degree() if d == 0]

|

||||

|

||||

while zero_indegree:

|

||||

yield zero_indegree

|

||||

new_zero_indegree = []

|

||||

for v in zero_indegree:

|

||||

for _, child in graph.edges(v):

|

||||

indegree_map[child] -= 1

|

||||

if not indegree_map[child]:

|

||||

new_zero_indegree.append(child)

|

||||

zero_indegree = new_zero_indegree

|

||||

|

||||

def _get_scores(self, graph: nx.DiGraph) -> Dict[str, int]:

|

||||

"""Scoring nodes for processing order.

|

||||

|

||||

Scores are calculated by the graph depth level. Lowest score (0) should be processed first.

|

||||

|

||||

Args:

|

||||

graph: The graph to be scored.

|

||||

|

||||

Returns:

|

||||

A dictionary consisting of `node name`:`score` pairs.

|

||||

"""

|

||||

# split graph by connected subgraphs

|

||||

subgraphs = (

|

||||

graph.subgraph(x) for x in nx.connected_components(nx.Graph(graph))

|

||||

)

|

||||

|

||||

# score all nodes in all subgraphs

|

||||

scores = {}

|

||||

for node in self.graph.nodes():

|

||||

score = -1 * len([

|

||||

d for d in nx.descendants(self.graph, node)

|

||||

if self._include_in_cost(d)

|

||||

])

|

||||

scores[node] = score

|

||||

for subgraph in subgraphs:

|

||||

grouped_nodes = self._grouped_topological_sort(subgraph)

|

||||

for level, group in enumerate(grouped_nodes):

|

||||

for node in group:

|

||||

scores[node] = level

|

||||

|

||||

return scores

|

||||

|

||||

def get(

|

||||

@@ -133,8 +159,6 @@ class GraphQueue:

|

||||

def _find_new_additions(self) -> None:

|

||||

"""Find any nodes in the graph that need to be added to the internal

|

||||

queue and add them.

|

||||

|

||||

Callers must hold the lock.

|

||||

"""

|

||||

for node, in_degree in self.graph.in_degree():

|

||||

if not self._already_known(node) and in_degree == 0:

|

||||

|

||||

@@ -6,6 +6,22 @@

|

||||

{% set target_relation = this.incorporate(type='table') %}

|

||||

{% set existing_relation = load_relation(this) %}

|

||||

|

||||

{% set tmp_identifier = model['name'] + '__dbt_tmp' %}

|

||||

{% set backup_identifier = model['name'] + "__dbt_backup" %}

|

||||

|

||||

-- the intermediate_ and backup_ relations should not already exist in the database; get_relation

|

||||

-- will return None in that case. Otherwise, we get a relation that we can drop

|

||||

-- later, before we try to use this name for the current operation. This has to happen before

|

||||

-- BEGIN, in a separate transaction

|

||||

{% set preexisting_intermediate_relation = adapter.get_relation(identifier=tmp_identifier,

|

||||

schema=schema,

|

||||

database=database) %}

|

||||

{% set preexisting_backup_relation = adapter.get_relation(identifier=backup_identifier,

|

||||

schema=schema,

|

||||

database=database) %}

|

||||

{{ drop_relation_if_exists(preexisting_intermediate_relation) }}

|

||||

{{ drop_relation_if_exists(preexisting_backup_relation) }}

|

||||

|

||||

{{ run_hooks(pre_hooks, inside_transaction=False) }}

|

||||

|

||||

-- `BEGIN` happens here:

|

||||

@@ -15,16 +31,9 @@

|

||||

{% if existing_relation is none %}

|

||||

{% set build_sql = create_table_as(False, target_relation, sql) %}

|

||||

{% elif existing_relation.is_view or should_full_refresh() %}

|

||||

{#-- Make sure the backup doesn't exist so we don't encounter issues with the rename below #}

|

||||

{% set tmp_identifier = model['name'] + '__dbt_tmp' %}

|

||||

{% set backup_identifier = model['name'] + "__dbt_backup" %}

|

||||

|

||||

{% set intermediate_relation = existing_relation.incorporate(path={"identifier": tmp_identifier}) %}

|

||||

{% set backup_relation = existing_relation.incorporate(path={"identifier": backup_identifier}) %}

|

||||

|

||||

{% do adapter.drop_relation(intermediate_relation) %}

|

||||

{% do adapter.drop_relation(backup_relation) %}

|

||||

|

||||

{% set build_sql = create_table_as(False, intermediate_relation, sql) %}

|

||||

{% set need_swap = true %}

|

||||

{% do to_drop.append(backup_relation) %}

|

||||

|

||||

@@ -12,7 +12,12 @@

|

||||

schema=schema,

|

||||

database=database,

|

||||

type='table') -%}

|

||||

|

||||

-- the intermediate_relation should not already exist in the database; get_relation

|

||||

-- will return None in that case. Otherwise, we get a relation that we can drop

|

||||

-- later, before we try to use this name for the current operation

|

||||

{%- set preexisting_intermediate_relation = adapter.get_relation(identifier=tmp_identifier,

|

||||

schema=schema,

|

||||

database=database) -%}

|

||||

/*

|

||||

See ../view/view.sql for more information about this relation.

|

||||

*/

|

||||

@@ -21,14 +26,15 @@

|

||||

schema=schema,

|

||||

database=database,

|

||||

type=backup_relation_type) -%}

|

||||

|

||||

{%- set exists_as_table = (old_relation is not none and old_relation.is_table) -%}

|

||||

{%- set exists_as_view = (old_relation is not none and old_relation.is_view) -%}

|

||||

-- as above, the backup_relation should not already exist

|

||||

{%- set preexisting_backup_relation = adapter.get_relation(identifier=backup_identifier,

|

||||

schema=schema,

|

||||

database=database) -%}

|

||||

|

||||

|

||||

-- drop the temp relations if they exists for some reason

|

||||

{{ adapter.drop_relation(intermediate_relation) }}

|

||||

{{ adapter.drop_relation(backup_relation) }}

|

||||

-- drop the temp relations if they exist already in the database

|

||||

{{ drop_relation_if_exists(preexisting_intermediate_relation) }}

|

||||

{{ drop_relation_if_exists(preexisting_backup_relation) }}

|

||||

|

||||

{{ run_hooks(pre_hooks, inside_transaction=False) }}

|

||||

|

||||

|

||||

@@ -1,3 +1,19 @@

|

||||

{% macro get_test_sql(main_sql, fail_calc, warn_if, error_if, limit) -%}

|

||||

{{ adapter.dispatch('get_test_sql')(main_sql, fail_calc, warn_if, error_if, limit) }}

|

||||

{%- endmacro %}

|

||||

|

||||

|

||||

{% macro default__get_test_sql(main_sql, fail_calc, warn_if, error_if, limit) -%}

|

||||

select

|

||||

{{ fail_calc }} as failures,

|

||||

{{ fail_calc }} {{ warn_if }} as should_warn,

|

||||

{{ fail_calc }} {{ error_if }} as should_error

|

||||

from (

|

||||

{{ main_sql }}

|

||||

{{ "limit " ~ limit if limit != none }}

|

||||

) dbt_internal_test

|

||||

{%- endmacro %}

|

||||

|

||||

{%- materialization test, default -%}

|

||||

|

||||

{% set relations = [] %}

|

||||

@@ -39,14 +55,7 @@

|

||||

|

||||

{% call statement('main', fetch_result=True) -%}

|

||||

|

||||

select

|

||||

{{ fail_calc }} as failures,

|

||||

{{ fail_calc }} {{ warn_if }} as should_warn,

|

||||

{{ fail_calc }} {{ error_if }} as should_error

|

||||

from (

|

||||

{{ main_sql }}

|

||||

{{ "limit " ~ limit if limit != none }}

|

||||

) dbt_internal_test

|

||||

{{ get_test_sql(main_sql, fail_calc, warn_if, error_if, limit)}}

|

||||

|

||||

{%- endcall %}

|

||||

|

||||

|

||||

@@ -9,7 +9,12 @@

|

||||

type='view') -%}

|

||||

{%- set intermediate_relation = api.Relation.create(identifier=tmp_identifier,

|

||||

schema=schema, database=database, type='view') -%}

|

||||

|

||||

-- the intermediate_relation should not already exist in the database; get_relation

|

||||

-- will return None in that case. Otherwise, we get a relation that we can drop

|

||||

-- later, before we try to use this name for the current operation

|

||||

{%- set preexisting_intermediate_relation = adapter.get_relation(identifier=tmp_identifier,

|

||||

schema=schema,

|

||||

database=database) -%}

|

||||

/*

|

||||

This relation (probably) doesn't exist yet. If it does exist, it's a leftover from

|

||||

a previous run, and we're going to try to drop it immediately. At the end of this

|

||||

@@ -27,14 +32,16 @@

|

||||

{%- set backup_relation = api.Relation.create(identifier=backup_identifier,

|

||||

schema=schema, database=database,

|

||||

type=backup_relation_type) -%}

|

||||

|

||||

{%- set exists_as_view = (old_relation is not none and old_relation.is_view) -%}

|

||||

-- as above, the backup_relation should not already exist

|

||||

{%- set preexisting_backup_relation = adapter.get_relation(identifier=backup_identifier,

|

||||

schema=schema,

|

||||

database=database) -%}

|

||||

|

||||

{{ run_hooks(pre_hooks, inside_transaction=False) }}

|

||||

|

||||

-- drop the temp relations if they exists for some reason

|

||||

{{ adapter.drop_relation(intermediate_relation) }}

|

||||

{{ adapter.drop_relation(backup_relation) }}

|

||||

-- drop the temp relations if they exist already in the database

|

||||

{{ drop_relation_if_exists(preexisting_intermediate_relation) }}

|

||||

{{ drop_relation_if_exists(preexisting_backup_relation) }}

|

||||

|

||||

-- `BEGIN` happens here:

|

||||

{{ run_hooks(pre_hooks, inside_transaction=True) }}

|

||||

|

||||

File diff suppressed because one or more lines are too long

4

core/dbt/include/starter_project/.gitignore

vendored

Normal file

4

core/dbt/include/starter_project/.gitignore

vendored

Normal file

@@ -0,0 +1,4 @@

|

||||

|

||||

target/

|

||||

dbt_modules/

|

||||

logs/

|

||||

15

core/dbt/include/starter_project/README.md

Normal file

15

core/dbt/include/starter_project/README.md

Normal file

@@ -0,0 +1,15 @@

|

||||

Welcome to your new dbt project!

|

||||

|

||||

### Using the starter project

|

||||

|

||||

Try running the following commands:

|

||||

- dbt run

|

||||

- dbt test

|

||||

|

||||

|

||||

### Resources:

|

||||

- Learn more about dbt [in the docs](https://docs.getdbt.com/docs/introduction)

|

||||

- Check out [Discourse](https://discourse.getdbt.com/) for commonly asked questions and answers

|

||||

- Join the [chat](https://community.getdbt.com/) on Slack for live discussions and support

|

||||

- Find [dbt events](https://events.getdbt.com) near you

|

||||

- Check out [the blog](https://blog.getdbt.com/) for the latest news on dbt's development and best practices

|

||||

3

core/dbt/include/starter_project/__init__.py

Normal file

3

core/dbt/include/starter_project/__init__.py

Normal file

@@ -0,0 +1,3 @@

|

||||

import os

|

||||

|

||||

PACKAGE_PATH = os.path.dirname(__file__)

|

||||

0

core/dbt/include/starter_project/data/.gitkeep

Normal file

0

core/dbt/include/starter_project/data/.gitkeep

Normal file

38

core/dbt/include/starter_project/dbt_project.yml

Normal file

38

core/dbt/include/starter_project/dbt_project.yml

Normal file

@@ -0,0 +1,38 @@

|

||||

|

||||

# Name your project! Project names should contain only lowercase characters

|

||||

# and underscores. A good package name should reflect your organization's

|

||||

# name or the intended use of these models

|

||||

name: 'my_new_project'

|

||||

version: '1.0.0'

|

||||

config-version: 2

|

||||

|

||||

# This setting configures which "profile" dbt uses for this project.

|

||||

profile: 'default'

|

||||

|

||||

# These configurations specify where dbt should look for different types of files.

|

||||

# The `source-paths` config, for example, states that models in this project can be

|

||||

# found in the "models/" directory. You probably won't need to change these!

|

||||

source-paths: ["models"]

|

||||

analysis-paths: ["analysis"]

|

||||

test-paths: ["tests"]

|

||||

data-paths: ["data"]

|

||||

macro-paths: ["macros"]

|

||||

snapshot-paths: ["snapshots"]

|

||||

|

||||

target-path: "target" # directory which will store compiled SQL files

|

||||

clean-targets: # directories to be removed by `dbt clean`

|

||||

- "target"

|

||||

- "dbt_modules"

|

||||

|

||||

|

||||

# Configuring models

|

||||

# Full documentation: https://docs.getdbt.com/docs/configuring-models

|

||||

|

||||

# In this example config, we tell dbt to build all models in the example/ directory

|

||||

# as tables. These settings can be overridden in the individual model files

|

||||

# using the `{{ config(...) }}` macro.

|

||||

models:

|

||||

my_new_project:

|

||||

# Config indicated by + and applies to all files under models/example/

|

||||

example:

|

||||

+materialized: view

|

||||

0

core/dbt/include/starter_project/macros/.gitkeep

Normal file

0

core/dbt/include/starter_project/macros/.gitkeep

Normal file

@@ -0,0 +1,27 @@

|

||||

|

||||

/*

|

||||

Welcome to your first dbt model!

|

||||

Did you know that you can also configure models directly within SQL files?

|

||||

This will override configurations stated in dbt_project.yml

|

||||

|

||||

Try changing "table" to "view" below

|

||||

*/

|

||||

|

||||

{{ config(materialized='table') }}

|

||||

|

||||

with source_data as (

|

||||

|

||||

select 1 as id

|

||||

union all

|

||||

select null as id

|

||||

|

||||

)

|

||||

|

||||

select *

|

||||

from source_data

|

||||

|

||||

/*

|

||||

Uncomment the line below to remove records with null `id` values

|

||||

*/

|

||||

|

||||

-- where id is not null

|

||||

@@ -0,0 +1,6 @@