mirror of

https://github.com/dbt-labs/dbt-core

synced 2025-12-19 05:21:27 +00:00

Compare commits

387 Commits

string_sel

...

fix_test_c

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

2516e83028 | ||

|

|

081f30ee2d | ||

|

|

89e4872c21 | ||

|

|

33dc970859 | ||

|

|

f73202734c | ||

|

|

32bacdab4b | ||

|

|

6113c3b533 | ||

|

|

1c634af489 | ||

|

|

428cdea2dc | ||

|

|

f14b55f839 | ||

|

|

5934d263b8 | ||

|

|

3860d919e6 | ||

|

|

fd0b9434ae | ||

|

|

efb30d0262 | ||

|

|

cee0bfbfa2 | ||

|

|

dc684d31d3 | ||

|

|

bfdf7f01b5 | ||

|

|

2cc0579b6e | ||

|

|

bfc472dc0f | ||

|

|

ea4e3680ab | ||

|

|

f02139956d | ||

|

|

7ec5c122e1 | ||

|

|

a10ab99efc | ||

|

|

9f4398c557 | ||

|

|

d60f6bc89b | ||

|

|

617eeb4ff7 | ||

|

|

5b55825638 | ||

|

|

103d524db5 | ||

|

|

babd084a9b | ||

|

|

749f87397e | ||

|

|

307d47ebaf | ||

|

|

6acd4b91c1 | ||

|

|

f4a9530894 | ||

|

|

ab65385a16 | ||

|

|

ebd761e3dc | ||

|

|

3b942ec790 | ||

|

|

b373486908 | ||

|

|

c8cd5502f6 | ||

|

|

d6dd968c4f | ||

|

|

b8d73d2197 | ||

|

|

17e57f1e0b | ||

|

|

e21bf9fbc7 | ||

|

|

12e281f076 | ||

|

|

a5ce658755 | ||

|

|

ce30dfa82d | ||

|

|

c04d1e9d5c | ||

|

|

80031d122c | ||

|

|

943b090c90 | ||

|

|

39fd53d1f9 | ||

|

|

777e7b3b6d | ||

|

|

2783fe2a9f | ||

|

|

f5880cb001 | ||

|

|

26e501008a | ||

|

|

2c67e3f5c7 | ||

|

|

033596021d | ||

|

|

f36c72e085 | ||

|

|

fefaf7b4be | ||

|

|

91431401ad | ||

|

|

59d96c08a1 | ||

|

|

f10447395b | ||

|

|

c2b6222798 | ||

|

|

3a58c49184 | ||

|

|

440a5e49e2 | ||

|

|

77c10713a3 | ||

|

|

48e367ce2f | ||

|

|

934c23bf39 | ||

|

|

e0febcb6c3 | ||

|

|

044a6c6ea4 | ||

|

|

8ebbc10572 | ||

|

|

7435828082 | ||

|

|

369b595e8a | ||

|

|

9a6d30f03d | ||

|

|

6bdd01d52b | ||

|

|

bae9767498 | ||

|

|

b0e50dedb8 | ||

|

|

96bfb3b259 | ||

|

|

909068dfa8 | ||

|

|

f4c74968be | ||

|

|

0e958f3704 | ||

|

|

a8b2942f93 | ||

|

|

564fe62400 | ||

|

|

5c5013191b | ||

|

|

31989b85d1 | ||

|

|

5ed4af2372 | ||

|

|

4d18e391aa | ||

|

|

2feeb5b927 | ||

|

|

2853f07875 | ||

|

|

4e6adc07a1 | ||

|

|

6a5ed4f418 | ||

|

|

ef25698d3d | ||

|

|

429dcc7000 | ||

|

|

ab3f994626 | ||

|

|

5f8235fcfc | ||

|

|

db325d0fde | ||

|

|

8dc1f49ac7 | ||

|

|

9fe2b651ed | ||

|

|

24e4b75c35 | ||

|

|

34174abf26 | ||

|

|

af778312cb | ||

|

|

280f5614ef | ||

|

|

8566a46793 | ||

|

|

af3c3f4cbe | ||

|

|

034a44e625 | ||

|

|

84155fdff7 | ||

|

|

8255c913a3 | ||

|

|

4d4d17669b | ||

|

|

540a0422f5 | ||

|

|

de4d7d6273 | ||

|

|

1345d95589 | ||

|

|

a5bc19dd69 | ||

|

|

25b143c8cc | ||

|

|

82cca959e4 | ||

|

|

d52374a0b6 | ||

|

|

c71a18ca07 | ||

|

|

8d73ae2cc0 | ||

|

|

7b0c74ca3e | ||

|

|

62be9f9064 | ||

|

|

2fdc113d93 | ||

|

|

b70fb543f5 | ||

|

|

31c88f9f5a | ||

|

|

af3a818f12 | ||

|

|

a07532d4c7 | ||

|

|

fb449ca4bc | ||

|

|

4da65643c0 | ||

|

|

bf64db474c | ||

|

|

344a14416d | ||

|

|

be47a0c5db | ||

|

|

808b980301 | ||

|

|

3528480562 | ||

|

|

6bd263d23f | ||

|

|

2b9aa3864b | ||

|

|

81155caf88 | ||

|

|

c7c057483d | ||

|

|

7f5170ae4d | ||

|

|

49b8693b11 | ||

|

|

d7b0a14eb5 | ||

|

|

8996cb1e18 | ||

|

|

38f278cce0 | ||

|

|

bb4e475044 | ||

|

|

4fbe36a8e9 | ||

|

|

a1a40b562a | ||

|

|

3a4a1bb005 | ||

|

|

4f8c10c1aa | ||

|

|

4833348769 | ||

|

|

ad07d59a78 | ||

|

|

e8aaabd1d3 | ||

|

|

d7d7396eeb | ||

|

|

41538860cd | ||

|

|

5c9f8a0cf0 | ||

|

|

11c997c3e9 | ||

|

|

1b1184a5e1 | ||

|

|

4ffcc43ed9 | ||

|

|

4ccaac46a6 | ||

|

|

ba88b84055 | ||

|

|

9086634c8f | ||

|

|

e88f1f1edb | ||

|

|

13c7486f0e | ||

|

|

8e811ba141 | ||

|

|

c5d86afed6 | ||

|

|

43a0cfbee1 | ||

|

|

8567d5f302 | ||

|

|

36d1bddc5b | ||

|

|

bf992680af | ||

|

|

e064298dfc | ||

|

|

e01a10ced5 | ||

|

|

2aa10fb1ed | ||

|

|

66f442ad76 | ||

|

|

11f1ecebcf | ||

|

|

e339cb27f6 | ||

|

|

bce3232b39 | ||

|

|

b08970ce39 | ||

|

|

533f88ceaf | ||

|

|

c8f0469a44 | ||

|

|

a1fc24e532 | ||

|

|

d80daa48df | ||

|

|

92aae2803f | ||

|

|

77cbbbfaf2 | ||

|

|

6c6649f912 | ||

|

|

55fbaabfda | ||

|

|

56c2518936 | ||

|

|

2b48152da6 | ||

|

|

e743e23d6b | ||

|

|

f846f921f2 | ||

|

|

e52a599be6 | ||

|

|

99744bd318 | ||

|

|

1060035838 | ||

|

|

69cc20013e | ||

|

|

3572bfd37d | ||

|

|

a6b82990f5 | ||

|

|

540c1fd9c6 | ||

|

|

46d36cd412 | ||

|

|

a170764fc5 | ||

|

|

f72873a1ce | ||

|

|

82496c30b1 | ||

|

|

cb3c007acd | ||

|

|

cb460a797c | ||

|

|

1b666d01cf | ||

|

|

df24c7d2f8 | ||

|

|

133c15c0e2 | ||

|

|

116e18a19e | ||

|

|

ec0af7c97b | ||

|

|

a34a877737 | ||

|

|

f018794465 | ||

|

|

d45f5e9791 | ||

|

|

04bd0d834c | ||

|

|

ed4f0c4713 | ||

|

|

c747068d4a | ||

|

|

aa0fbdc993 | ||

|

|

b50bfa7277 | ||

|

|

e91988f679 | ||

|

|

3ed1fce3fb | ||

|

|

e3ea0b511a | ||

|

|

c411c663de | ||

|

|

1c6f66fc14 | ||

|

|

1f927a374c | ||

|

|

07c4225aa8 | ||

|

|

42a85ac39f | ||

|

|

16e6d31ee3 | ||

|

|

a6db5b436d | ||

|

|

47675f2e28 | ||

|

|

0642bbefa7 | ||

|

|

43da603d52 | ||

|

|

f9e1f4d111 | ||

|

|

1508564e10 | ||

|

|

c14e6f4dcc | ||

|

|

75b6a20134 | ||

|

|

d82a07c221 | ||

|

|

c6f7dbcaa5 | ||

|

|

82cd099e48 | ||

|

|

546c011dd8 | ||

|

|

10b33ccaf6 | ||

|

|

bc01572176 | ||

|

|

ccd2064722 | ||

|

|

0fb42901dd | ||

|

|

a4280d7457 | ||

|

|

6966ede68b | ||

|

|

27dd14a5a2 | ||

|

|

2494301f1e | ||

|

|

f13143accb | ||

|

|

26d340a917 | ||

|

|

cc75cd4102 | ||

|

|

cf8615b231 | ||

|

|

30f473a2b1 | ||

|

|

4618709baa | ||

|

|

16b098ea42 | ||

|

|

b31c4d407a | ||

|

|

28c36cc5e2 | ||

|

|

6bfbcb842e | ||

|

|

a0eade4fdd | ||

|

|

ee24b7e88a | ||

|

|

c9baddf9a4 | ||

|

|

c5c780a685 | ||

|

|

421aaabf62 | ||

|

|

86788f034f | ||

|

|

232d3758cf | ||

|

|

71bcf9b31d | ||

|

|

bf4ee4f064 | ||

|

|

aa3bdfeb17 | ||

|

|

ce6967d396 | ||

|

|

330065f5e0 | ||

|

|

944db82553 | ||

|

|

c257361f05 | ||

|

|

ffdbfb018a | ||

|

|

cfa2bd6b08 | ||

|

|

51e90c3ce0 | ||

|

|

d69149f43e | ||

|

|

f261663f3d | ||

|

|

e5948dd1d3 | ||

|

|

5f13aab7d8 | ||

|

|

292d489592 | ||

|

|

0a01f20e35 | ||

|

|

2bd08d5c4c | ||

|

|

adae5126db | ||

|

|

dddf1bcb76 | ||

|

|

d23d4b0fd4 | ||

|

|

658f7550b3 | ||

|

|

cfb50ae21e | ||

|

|

9b0a365822 | ||

|

|

97ab130619 | ||

|

|

3578fde290 | ||

|

|

f382da69b8 | ||

|

|

2da3d215c6 | ||

|

|

43ed29c14c | ||

|

|

9df0283689 | ||

|

|

04b82cf4a5 | ||

|

|

274c3012b0 | ||

|

|

2b24a4934f | ||

|

|

692a423072 | ||

|

|

148f55335f | ||

|

|

2f752842a1 | ||

|

|

aff72996a1 | ||

|

|

08e425bcf6 | ||

|

|

454ddc601a | ||

|

|

b025f208a8 | ||

|

|

b60e533b9d | ||

|

|

37af0e0d59 | ||

|

|

ac1de5bce9 | ||

|

|

ef7ff55e07 | ||

|

|

608db5b982 | ||

|

|

8dd69efd48 | ||

|

|

73f7fba793 | ||

|

|

867e2402d2 | ||

|

|

a3b9e61967 | ||

|

|

cd149b68e8 | ||

|

|

cd3583c736 | ||

|

|

441f86f3ed | ||

|

|

f62bea65a1 | ||

|

|

886b574987 | ||

|

|

2888bac275 | ||

|

|

35c9206916 | ||

|

|

c4c5b59312 | ||

|

|

f25fb4e5ac | ||

|

|

868bfec5e6 | ||

|

|

e7c242213a | ||

|

|

862552ead4 | ||

|

|

9d90e0c167 | ||

|

|

a281f227cd | ||

|

|

5b981278db | ||

|

|

c1091ed3d1 | ||

|

|

08aed63455 | ||

|

|

90a550ee4f | ||

|

|

34869fc2a2 | ||

|

|

3deb10156d | ||

|

|

8c0e84de05 | ||

|

|

23be083c39 | ||

|

|

217aafce39 | ||

|

|

03210c63f4 | ||

|

|

a90510f6f2 | ||

|

|

36d91aded6 | ||

|

|

9afe8a1297 | ||

|

|

1e6f272034 | ||

|

|

a1aa2f81ef | ||

|

|

62899ef308 | ||

|

|

7f3396c002 | ||

|

|

453bc18196 | ||

|

|

dbb6b57b76 | ||

|

|

d7137db78c | ||

|

|

5ac4f2d80b | ||

|

|

5ba5271da9 | ||

|

|

b834e3015a | ||

|

|

c8721ded62 | ||

|

|

1e97372d24 | ||

|

|

fd4e111784 | ||

|

|

75094e7e21 | ||

|

|

8db2d674ed | ||

|

|

ffb140fab3 | ||

|

|

e93543983c | ||

|

|

0d066f80ff | ||

|

|

ccca1b2016 | ||

|

|

fec0e31a25 | ||

|

|

d246aa8f6d | ||

|

|

66bfba2258 | ||

|

|

b53b4373cb | ||

|

|

0810f93883 | ||

|

|

a4e696a252 | ||

|

|

0951d08f52 | ||

|

|

dbf367e070 | ||

|

|

6447ba8ec8 | ||

|

|

43e260966f | ||

|

|

b0e301b046 | ||

|

|

c8a9ea4979 | ||

|

|

afb7fc05da | ||

|

|

14124ccca8 | ||

|

|

df5022dbc3 | ||

|

|

015e798a31 | ||

|

|

c19125bb02 | ||

|

|

0e6ac5baf1 | ||

|

|

2c8d1b5b8c | ||

|

|

f7c0c1c21a | ||

|

|

4edd98f7ce | ||

|

|

df0abb7000 | ||

|

|

4f93da307f | ||

|

|

a8765d54aa | ||

|

|

ec0f3d22e7 | ||

|

|

009b75cab6 | ||

|

|

d64668df1e | ||

|

|

8538bec99e | ||

|

|

f983900597 | ||

|

|

8c71488757 | ||

|

|

7aa8c435c9 | ||

|

|

daeb51253d | ||

|

|

1dd4187cd0 | ||

|

|

9e36ebdaab | ||

|

|

aaa0127354 | ||

|

|

e60280c4d6 | ||

|

|

aef7866e29 | ||

|

|

70694e3bb9 |

@@ -1,5 +1,5 @@

|

||||

[bumpversion]

|

||||

current_version = 0.19.0b1

|

||||

current_version = 0.19.0

|

||||

parse = (?P<major>\d+)

|

||||

\.(?P<minor>\d+)

|

||||

\.(?P<patch>\d+)

|

||||

|

||||

@@ -2,12 +2,19 @@ version: 2.1

|

||||

jobs:

|

||||

unit:

|

||||

docker: &test_only

|

||||

- image: fishtownanalytics/test-container:9

|

||||

- image: fishtownanalytics/test-container:11

|

||||

environment:

|

||||

DBT_INVOCATION_ENV: circle

|

||||

DOCKER_TEST_DATABASE_HOST: "database"

|

||||

TOX_PARALLEL_NO_SPINNER: 1

|

||||

steps:

|

||||

- checkout

|

||||

- run: tox -e flake8,mypy,unit-py36,unit-py38

|

||||

- run: tox -p -e py36,py37,py38

|

||||

lint:

|

||||

docker: *test_only

|

||||

steps:

|

||||

- checkout

|

||||

- run: tox -e mypy,flake8 -- -v

|

||||

build-wheels:

|

||||

docker: *test_only

|

||||

steps:

|

||||

@@ -19,7 +26,7 @@ jobs:

|

||||

export PYTHON_BIN="${PYTHON_ENV}/bin/python"

|

||||

$PYTHON_BIN -m pip install -U pip setuptools

|

||||

$PYTHON_BIN -m pip install -r requirements.txt

|

||||

$PYTHON_BIN -m pip install -r dev_requirements.txt

|

||||

$PYTHON_BIN -m pip install -r dev-requirements.txt

|

||||

/bin/bash ./scripts/build-wheels.sh

|

||||

$PYTHON_BIN ./scripts/collect-dbt-contexts.py > ./dist/context_metadata.json

|

||||

$PYTHON_BIN ./scripts/collect-artifact-schema.py > ./dist/artifact_schemas.json

|

||||

@@ -28,20 +35,22 @@ jobs:

|

||||

- store_artifacts:

|

||||

path: ./dist

|

||||

destination: dist

|

||||

integration-postgres-py36:

|

||||

docker: &test_and_postgres

|

||||

- image: fishtownanalytics/test-container:9

|

||||

integration-postgres:

|

||||

docker:

|

||||

- image: fishtownanalytics/test-container:11

|

||||

environment:

|

||||

DBT_INVOCATION_ENV: circle

|

||||

DOCKER_TEST_DATABASE_HOST: "database"

|

||||

TOX_PARALLEL_NO_SPINNER: 1

|

||||

- image: postgres

|

||||

name: database

|

||||

environment: &pgenv

|

||||

environment:

|

||||

POSTGRES_USER: "root"

|

||||

POSTGRES_PASSWORD: "password"

|

||||

POSTGRES_DB: "dbt"

|

||||

steps:

|

||||

- checkout

|

||||

- run: &setupdb

|

||||

- run:

|

||||

name: Setup postgres

|

||||

command: bash test/setup_db.sh

|

||||

environment:

|

||||

@@ -50,74 +59,39 @@ jobs:

|

||||

PGPASSWORD: password

|

||||

PGDATABASE: postgres

|

||||

- run:

|

||||

name: Run tests

|

||||

command: tox -e integration-postgres-py36

|

||||

name: Postgres integration tests

|

||||

command: tox -p -e py36-postgres,py38-postgres -- -v -n4

|

||||

no_output_timeout: 30m

|

||||

- store_artifacts:

|

||||

path: ./logs

|

||||

integration-snowflake-py36:

|

||||

integration-snowflake:

|

||||

docker: *test_only

|

||||

steps:

|

||||

- checkout

|

||||

- run:

|

||||

name: Run tests

|

||||

command: tox -e integration-snowflake-py36

|

||||

no_output_timeout: 1h

|

||||

name: Snowflake integration tests

|

||||

command: tox -p -e py36-snowflake,py38-snowflake -- -v -n4

|

||||

no_output_timeout: 30m

|

||||

- store_artifacts:

|

||||

path: ./logs

|

||||

integration-redshift-py36:

|

||||

integration-redshift:

|

||||

docker: *test_only

|

||||

steps:

|

||||

- checkout

|

||||

- run:

|

||||

name: Run tests

|

||||

command: tox -e integration-redshift-py36

|

||||

name: Redshift integration tests

|

||||

command: tox -p -e py36-redshift,py38-redshift -- -v -n4

|

||||

no_output_timeout: 30m

|

||||

- store_artifacts:

|

||||

path: ./logs

|

||||

integration-bigquery-py36:

|

||||

integration-bigquery:

|

||||

docker: *test_only

|

||||

steps:

|

||||

- checkout

|

||||

- run:

|

||||

name: Run tests

|

||||

command: tox -e integration-bigquery-py36

|

||||

- store_artifacts:

|

||||

path: ./logs

|

||||

integration-postgres-py38:

|

||||

docker: *test_and_postgres

|

||||

steps:

|

||||

- checkout

|

||||

- run: *setupdb

|

||||

- run:

|

||||

name: Run tests

|

||||

command: tox -e integration-postgres-py38

|

||||

- store_artifacts:

|

||||

path: ./logs

|

||||

integration-snowflake-py38:

|

||||

docker: *test_only

|

||||

steps:

|

||||

- checkout

|

||||

- run:

|

||||

name: Run tests

|

||||

command: tox -e integration-snowflake-py38

|

||||

no_output_timeout: 1h

|

||||

- store_artifacts:

|

||||

path: ./logs

|

||||

integration-redshift-py38:

|

||||

docker: *test_only

|

||||

steps:

|

||||

- checkout

|

||||

- run:

|

||||

name: Run tests

|

||||

command: tox -e integration-redshift-py38

|

||||

- store_artifacts:

|

||||

path: ./logs

|

||||

integration-bigquery-py38:

|

||||

docker: *test_only

|

||||

steps:

|

||||

- checkout

|

||||

- run:

|

||||

name: Run tests

|

||||

command: tox -e integration-bigquery-py38

|

||||

name: Bigquery integration test

|

||||

command: tox -p -e py36-bigquery,py38-bigquery -- -v -n4

|

||||

no_output_timeout: 30m

|

||||

- store_artifacts:

|

||||

path: ./logs

|

||||

|

||||

@@ -125,39 +99,25 @@ workflows:

|

||||

version: 2

|

||||

test-everything:

|

||||

jobs:

|

||||

- lint

|

||||

- unit

|

||||

- integration-postgres-py36:

|

||||

- integration-postgres:

|

||||

requires:

|

||||

- unit

|

||||

- integration-redshift-py36:

|

||||

requires:

|

||||

- integration-postgres-py36

|

||||

- integration-bigquery-py36:

|

||||

requires:

|

||||

- integration-postgres-py36

|

||||

- integration-snowflake-py36:

|

||||

requires:

|

||||

- integration-postgres-py36

|

||||

- integration-postgres-py38:

|

||||

- integration-redshift:

|

||||

requires:

|

||||

- unit

|

||||

- integration-redshift-py38:

|

||||

- integration-bigquery:

|

||||

requires:

|

||||

- integration-postgres-py38

|

||||

- integration-bigquery-py38:

|

||||

- unit

|

||||

- integration-snowflake:

|

||||

requires:

|

||||

- integration-postgres-py38

|

||||

- integration-snowflake-py38:

|

||||

requires:

|

||||

- integration-postgres-py38

|

||||

- unit

|

||||

- build-wheels:

|

||||

requires:

|

||||

- lint

|

||||

- unit

|

||||

- integration-postgres-py36

|

||||

- integration-redshift-py36

|

||||

- integration-bigquery-py36

|

||||

- integration-snowflake-py36

|

||||

- integration-postgres-py38

|

||||

- integration-redshift-py38

|

||||

- integration-bigquery-py38

|

||||

- integration-snowflake-py38

|

||||

- integration-postgres

|

||||

- integration-redshift

|

||||

- integration-bigquery

|

||||

- integration-snowflake

|

||||

|

||||

45

.github/dependabot.yml

vendored

Normal file

45

.github/dependabot.yml

vendored

Normal file

@@ -0,0 +1,45 @@

|

||||

version: 2

|

||||

updates:

|

||||

# python dependencies

|

||||

- package-ecosystem: "pip"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "daily"

|

||||

rebase-strategy: "disabled"

|

||||

- package-ecosystem: "pip"

|

||||

directory: "/core"

|

||||

schedule:

|

||||

interval: "daily"

|

||||

rebase-strategy: "disabled"

|

||||

- package-ecosystem: "pip"

|

||||

directory: "/plugins/bigquery"

|

||||

schedule:

|

||||

interval: "daily"

|

||||

rebase-strategy: "disabled"

|

||||

- package-ecosystem: "pip"

|

||||

directory: "/plugins/postgres"

|

||||

schedule:

|

||||

interval: "daily"

|

||||

rebase-strategy: "disabled"

|

||||

- package-ecosystem: "pip"

|

||||

directory: "/plugins/redshift"

|

||||

schedule:

|

||||

interval: "daily"

|

||||

rebase-strategy: "disabled"

|

||||

- package-ecosystem: "pip"

|

||||

directory: "/plugins/snowflake"

|

||||

schedule:

|

||||

interval: "daily"

|

||||

rebase-strategy: "disabled"

|

||||

|

||||

# docker dependencies

|

||||

- package-ecosystem: "docker"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

rebase-strategy: "disabled"

|

||||

- package-ecosystem: "docker"

|

||||

directory: "/docker"

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

rebase-strategy: "disabled"

|

||||

3

.gitignore

vendored

3

.gitignore

vendored

@@ -8,7 +8,7 @@ __pycache__/

|

||||

|

||||

# Distribution / packaging

|

||||

.Python

|

||||

env/

|

||||

env*/

|

||||

dbt_env/

|

||||

build/

|

||||

develop-eggs/

|

||||

@@ -85,6 +85,7 @@ target/

|

||||

|

||||

# pycharm

|

||||

.idea/

|

||||

venv/

|

||||

|

||||

# AWS credentials

|

||||

.aws/

|

||||

|

||||

49

ARCHITECTURE.md

Normal file

49

ARCHITECTURE.md

Normal file

@@ -0,0 +1,49 @@

|

||||

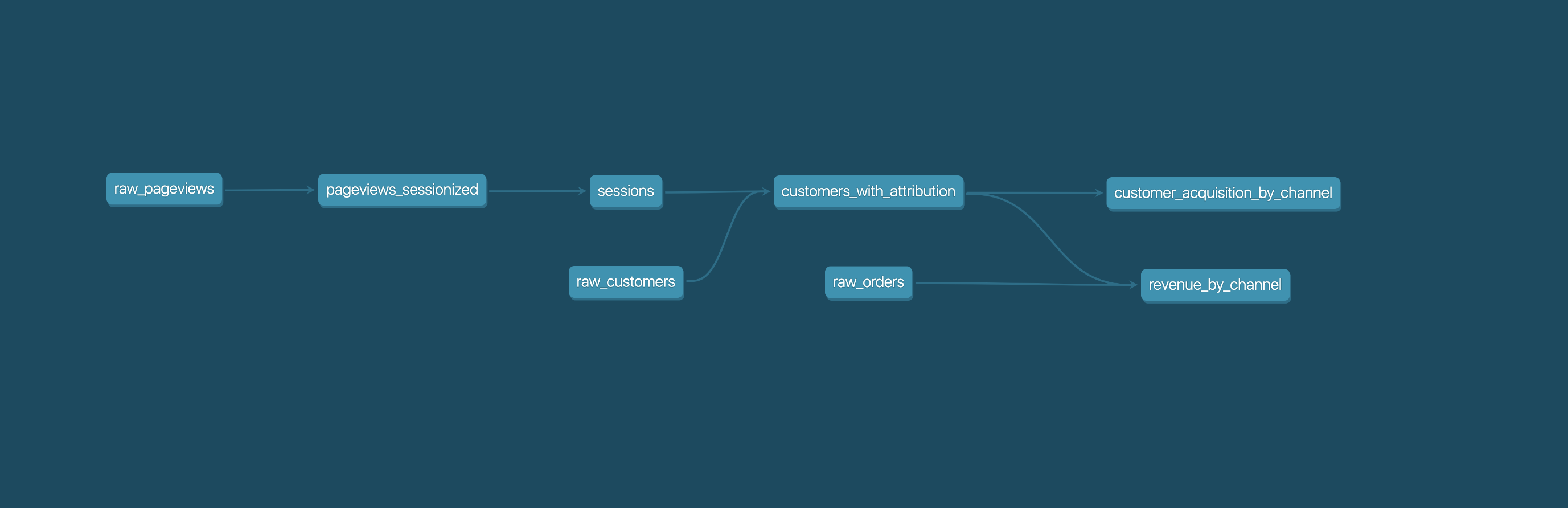

The core function of dbt is SQL compilation and execution. Users create projects of dbt resources (models, tests, seeds, snapshots, ...), defined in SQL and YAML files, and they invoke dbt to create, update, or query associated views and tables. Today, dbt makes heavy use of Jinja2 to enable the templating of SQL, and to construct a DAG (Directed Acyclic Graph) from all of the resources in a project. Users can also extend their projects by installing resources (including Jinja macros) from other projects, called "packages."

|

||||

|

||||

## dbt-core

|

||||

|

||||

Most of the python code in the repository is within the `core/dbt` directory. Currently the main subdirectories are:

|

||||

- [`adapters`](core/dbt/adapters): Define base classes for behavior that is likely to differ across databases

|

||||

- [`clients`](core/dbt/clients): Interface with dependencies (agate, jinja) or across operating systems

|

||||

- [`config`](core/dbt/config): Reconcile user-supplied configuration from connection profiles, project files, and Jinja macros

|

||||

- [`context`](core/dbt/context): Build and expose dbt-specific Jinja functionality

|

||||

- [`contracts`](core/dbt/contracts): Define Python objects (dataclasses) that dbt expects to create and validate

|

||||

- [`deps`](core/dbt/deps): Package installation and dependency resolution

|

||||

- [`graph`](core/dbt/graph): Produce a `networkx` DAG of project resources, and selecting those resources given user-supplied criteria

|

||||

- [`include`](core/dbt/include): The dbt "global project," which defines default implementations of Jinja2 macros

|

||||

- [`parser`](core/dbt/parser): Read project files, validate, construct python objects

|

||||

- [`rpc`](core/dbt/rpc): Provide remote procedure call server for invoking dbt, following JSON-RPC 2.0 spec

|

||||

- [`task`](core/dbt/task): Set forth the actions that dbt can perform when invoked

|

||||

|

||||

### Invoking dbt

|

||||

|

||||

There are two supported ways of invoking dbt: from the command line and using an RPC server.

|

||||

|

||||

The "tasks" map to top-level dbt commands. So `dbt run` => task.run.RunTask, etc. Some are more like abstract base classes (GraphRunnableTask, for example) but all the concrete types outside of task/rpc should map to tasks. Currently one executes at a time. The tasks kick off their “Runners” and those do execute in parallel. The parallelism is managed via a thread pool, in GraphRunnableTask.

|

||||

|

||||

core/dbt/include/index.html

|

||||

This is the docs website code. It comes from the dbt-docs repository, and is generated when a release is packaged.

|

||||

|

||||

## Adapters

|

||||

|

||||

dbt uses an adapter-plugin pattern to extend support to different databases, warehouses, query engines, etc. The four core adapters that are in the main repository, contained within the [`plugins`](plugins) subdirectory, are: Postgres Redshift, Snowflake and BigQuery. Other warehouses use adapter plugins defined in separate repositories (e.g. [dbt-spark](https://github.com/fishtown-analytics/dbt-spark), [dbt-presto](https://github.com/fishtown-analytics/dbt-presto)).

|

||||

|

||||

Each adapter is a mix of python, Jinja2, and SQL. The adapter code also makes heavy use of Jinja2 to wrap modular chunks of SQL functionality, define default implementations, and allow plugins to override it.

|

||||

|

||||

Each adapter plugin is a standalone python package that includes:

|

||||

|

||||

- `dbt/include/[name]`: A "sub-global" dbt project, of YAML and SQL files, that reimplements Jinja macros to use the adapter's supported SQL syntax

|

||||

- `dbt/adapters/[name]`: Python modules that inherit, and optionally reimplement, the base adapter classes defined in dbt-core

|

||||

- `setup.py`

|

||||

|

||||

The Postgres adapter code is the most central, and many of its implementations are used as the default defined in the dbt-core global project. The greater the distance of a data technology from Postgres, the more its adapter plugin may need to reimplement.

|

||||

|

||||

## Testing dbt

|

||||

|

||||

The [`test/`](test/) subdirectory includes unit and integration tests that run as continuous integration checks against open pull requests. Unit tests check mock inputs and outputs of specific python functions. Integration tests perform end-to-end dbt invocations against real adapters (Postgres, Redshift, Snowflake, BigQuery) and assert that the results match expectations. See [the contributing guide](CONTRIBUTING.md) for a step-by-step walkthrough of setting up a local development and testing environment.

|

||||

|

||||

## Everything else

|

||||

|

||||

- [docker](docker/): All dbt versions are published as Docker images on DockerHub. This subfolder contains the `Dockerfile` (constant) and `requirements.txt` (one for each version).

|

||||

- [etc](etc/): Images for README

|

||||

- [scripts](scripts/): Helper scripts for testing, releasing, and producing JSON schemas. These are not included in distributions of dbt, not are they rigorously tested—they're just handy tools for the dbt maintainers :)

|

||||

190

CHANGELOG.md

190

CHANGELOG.md

@@ -1,51 +1,194 @@

|

||||

## dbt 0.19.0 (Release TBD)

|

||||

## dbt 0.20.0 (Release TBD)

|

||||

|

||||

### Fixes

|

||||

- Fix exit code from dbt debug not returning a failure when one of the tests fail ([#3017](https://github.com/fishtown-analytics/dbt/issues/3017))

|

||||

- Auto-generated CTEs in tests and ephemeral models have lowercase names to comply with dbt coding conventions ([#3027](https://github.com/fishtown-analytics/dbt/issues/3027), [#3028](https://github.com/fishtown-analytics/dbt/issues/3028))

|

||||

- Fix incorrect error message when a selector does not match any node [#3036](https://github.com/fishtown-analytics/dbt/issues/3036))

|

||||

- Fix variable `_dbt_max_partition` declaration and initialization for BigQuery incremental models ([#2940](https://github.com/fishtown-analytics/dbt/issues/2940), [#2976](https://github.com/fishtown-analytics/dbt/pull/2976))

|

||||

- Moving from 'master' to 'HEAD' default branch in git ([#3057](https://github.com/fishtown-analytics/dbt/issues/3057), [#3104](https://github.com/fishtown-analytics/dbt/issues/3104), [#3117](https://github.com/fishtown-analytics/dbt/issues/3117)))

|

||||

- Requirement on `dataclasses` is relaxed to be between `>=0.6,<0.9` allowing dbt to cohabit with other libraries which required higher versions. ([#3150](https://github.com/fishtown-analytics/dbt/issues/3150), [#3151](https://github.com/fishtown-analytics/dbt/pull/3151))

|

||||

- Add feature to add `_n` alias to same column names in SQL query ([#3147](https://github.com/fishtown-analytics/dbt/issues/3147), [#3158](https://github.com/fishtown-analytics/dbt/pull/3158))

|

||||

- Raise a proper error message if dbt parses a macro twice due to macro duplication or misconfiguration. ([#2449](https://github.com/fishtown-analytics/dbt/issues/2449), [#3165](https://github.com/fishtown-analytics/dbt/pull/3165))

|

||||

- Fix exposures missing in graph context variable. ([#3241](https://github.com/fishtown-analytics/dbt/issues/3241))

|

||||

- Ensure that schema test macros are properly processed ([#3229](https://github.com/fishtown-analytics/dbt/issues/3229), [#3272](https://github.com/fishtown-analytics/dbt/pull/3272))

|

||||

|

||||

### Features

|

||||

- Added macro get_partitions_metadata(table) to return partition metadata for partitioned table [#2596](https://github.com/fishtown-analytics/dbt/pull/2596)

|

||||

- Added native python 're' module for regex in jinja templates [#2851](https://github.com/fishtown-analytics/dbt/pull/2851)

|

||||

- Support commit hashes in dbt deps package revision ([#3268](https://github.com/fishtown-analytics/dbt/issues/3268), [#3270](https://github.com/fishtown-analytics/dbt/pull/3270))

|

||||

- Add optional configs for `require_partition_filter` and `partition_expiration_days` in BigQuery ([#1843](https://github.com/fishtown-analytics/dbt/issues/1843), [#2928](https://github.com/fishtown-analytics/dbt/pull/2928))

|

||||

- Fix for EOL SQL comments prevent entire line execution ([#2731](https://github.com/fishtown-analytics/dbt/issues/2731), [#2974](https://github.com/fishtown-analytics/dbt/pull/2974))

|

||||

- Add optional `merge_update_columns` config to specify columns to update for `merge` statements in BigQuery and Snowflake ([#1862](https://github.com/fishtown-analytics/dbt/issues/1862), [#3100](https://github.com/fishtown-analytics/dbt/pull/3100))

|

||||

- Use query comment JSON as job labels for BigQuery adapter when `query-comment.job-label` is set to `true` ([#2483](https://github.com/fishtown-analytics/dbt/issues/2483)), ([#3145](https://github.com/fishtown-analytics/dbt/pull/3145))

|

||||

- Set application_name for Postgres connections ([#885](https://github.com/fishtown-analytics/dbt/issues/885), [#3182](https://github.com/fishtown-analytics/dbt/pull/3182))

|

||||

- Support disabling schema tests, and configuring tests from `dbt_project.yml` ([#3252](https://github.com/fishtown-analytics/dbt/issues/3252),

|

||||

[#3253](https://github.com/fishtown-analytics/dbt/issues/3253), [#3257](https://github.com/fishtown-analytics/dbt/pull/3257))

|

||||

- Add Jinja tag for tests ([#1173](https://github.com/fishtown-analytics/dbt/issues/1173), [#3261](https://github.com/fishtown-analytics/dbt/pull/3261))

|

||||

|

||||

### Under the hood

|

||||

- Add dependabot configuration for alerting maintainers about keeping dependencies up to date and secure. ([#3061](https://github.com/fishtown-analytics/dbt/issues/3061), [#3062](https://github.com/fishtown-analytics/dbt/pull/3062))

|

||||

- Update script to collect and write json schema for dbt artifacts ([#2870](https://github.com/fishtown-analytics/dbt/issues/2870), [#3065](https://github.com/fishtown-analytics/dbt/pull/3065))

|

||||

- Relax Google Cloud dependency pins to major versions. ([#3156](https://github.com/fishtown-analytics/dbt/pull/3156)

|

||||

- Bump `snowflake-connector-python` and releated dependencies, support Python 3.9 ([#2985](https://github.com/fishtown-analytics/dbt/issues/2985), [#3148](https://github.com/fishtown-analytics/dbt/pull/3148))

|

||||

- General development environment clean up and improve experience running tests locally ([#3194](https://github.com/fishtown-analytics/dbt/issues/3194), [#3204](https://github.com/fishtown-analytics/dbt/pull/3204), [#3228](https://github.com/fishtown-analytics/dbt/pull/3228))

|

||||

- Add a new materialization for tests, update data tests to use test materialization when executing. ([#3154](https://github.com/fishtown-analytics/dbt/issues/3154), [#3181](https://github.com/fishtown-analytics/dbt/pull/3181))

|

||||

- Switch from externally storing parsing state in ParseResult object to using Manifest ([#3163](http://github.com/fishtown-analytics/dbt/issues/3163), [#3219](https://github.com/fishtown-analytics/dbt/pull/3219))

|

||||

- Switch from loading project files in separate parsers to loading in one place([#3244](http://github.com/fishtown-analytics/dbt/issues/3244), [#3248](https://github.com/fishtown-analytics/dbt/pull/3248))

|

||||

|

||||

Contributors:

|

||||

- [@yu-iskw](https://github.com/yu-iskw) ([#2928](https://github.com/fishtown-analytics/dbt/pull/2928))

|

||||

- [@sdebruyn](https://github.com/sdebruyn) / [@lynxcare](https://github.com/lynxcare) ([#3018](https://github.com/fishtown-analytics/dbt/pull/3018))

|

||||

- [@rvacaru](https://github.com/rvacaru) ([#2974](https://github.com/fishtown-analytics/dbt/pull/2974))

|

||||

- [@NiallRees](https://github.com/NiallRees) ([#3028](https://github.com/fishtown-analytics/dbt/pull/3028))

|

||||

- [ran-eh](https://github.com/ran-eh) ([#3036](https://github.com/fishtown-analytics/dbt/pull/3036))

|

||||

- [@pcasteran](https://github.com/pcasteran) ([#2976](https://github.com/fishtown-analytics/dbt/pull/2976))

|

||||

- [@VasiliiSurov](https://github.com/VasiliiSurov) ([#3104](https://github.com/fishtown-analytics/dbt/pull/3104))

|

||||

- [@jmcarp](https://github.com/jmcarp) ([#3145](https://github.com/fishtown-analytics/dbt/pull/3145))

|

||||

- [@bastienboutonnet](https://github.com/bastienboutonnet) ([#3151](https://github.com/fishtown-analytics/dbt/pull/3151))

|

||||

- [@max-sixty](https://github.com/max-sixty) ([#3156](https://github.com/fishtown-analytics/dbt/pull/3156)

|

||||

- [@prratek](https://github.com/prratek) ([#3100](https://github.com/fishtown-analytics/dbt/pull/3100))

|

||||

- [@techytushar](https://github.com/techytushar) ([#3158](https://github.com/fishtown-analytics/dbt/pull/3158))

|

||||

- [@cgopalan](https://github.com/cgopalan) ([#3165](https://github.com/fishtown-analytics/dbt/pull/3165), [#3182](https://github.com/fishtown-analytics/dbt/pull/3182))

|

||||

- [@fux](https://github.com/fuchsst) ([#3241](https://github.com/fishtown-analytics/dbt/issues/3241))

|

||||

- [@dmateusp](https://github.com/dmateusp) ([#3270](https://github.com/fishtown-analytics/dbt/pull/3270))

|

||||

|

||||

## dbt 0.19.1 (March 31, 2021)

|

||||

|

||||

## dbt 0.19.1rc2 (March 25, 2021)

|

||||

|

||||

|

||||

### Fixes

|

||||

- Pass service-account scopes to gcloud-based oauth ([#3040](https://github.com/fishtown-analytics/dbt/issues/3040), [#3041](https://github.com/fishtown-analytics/dbt/pull/3041))

|

||||

|

||||

Contributors:

|

||||

- [@yu-iskw](https://github.com/yu-iskw) ([#3041](https://github.com/fishtown-analytics/dbt/pull/3041))

|

||||

|

||||

## dbt 0.19.1rc1 (March 15, 2021)

|

||||

|

||||

### Under the hood

|

||||

- Update code to use Mashumaro 2.0 ([#3138](https://github.com/fishtown-analytics/dbt/pull/3138))

|

||||

- Pin `agate<1.6.2` to avoid installation errors relating to its new dependency `PyICU` ([#3160](https://github.com/fishtown-analytics/dbt/issues/3160), [#3161](https://github.com/fishtown-analytics/dbt/pull/3161))

|

||||

- Add an event to track resource counts ([#3050](https://github.com/fishtown-analytics/dbt/issues/3050), [#3157](https://github.com/fishtown-analytics/dbt/pull/3157))

|

||||

|

||||

### Fixes

|

||||

|

||||

- Fix compiled sql for ephemeral models ([#3139](https://github.com/fishtown-analytics/dbt/pull/3139), [#3056](https://github.com/fishtown-analytics/dbt/pull/3056))

|

||||

|

||||

## dbt 0.19.1b2 (February 15, 2021)

|

||||

|

||||

## dbt 0.19.1b1 (February 12, 2021)

|

||||

|

||||

### Fixes

|

||||

|

||||

- On BigQuery, fix regressions for `insert_overwrite` incremental strategy with `int64` and `timestamp` partition columns ([#3063](https://github.com/fishtown-analytics/dbt/issues/3063), [#3095](https://github.com/fishtown-analytics/dbt/issues/3095), [#3098](https://github.com/fishtown-analytics/dbt/issues/3098))

|

||||

|

||||

### Under the hood

|

||||

- Bump werkzeug upper bound dependency to `<v2.0` ([#3011](https://github.com/fishtown-analytics/dbt/pull/3011))

|

||||

- Performance fixes for many different things ([#2862](https://github.com/fishtown-analytics/dbt/issues/2862), [#3034](https://github.com/fishtown-analytics/dbt/pull/3034))

|

||||

|

||||

Contributors:

|

||||

- [@Bl3f](https://github.com/Bl3f) ([#3011](https://github.com/fishtown-analytics/dbt/pull/3011))

|

||||

|

||||

|

||||

## dbt 0.19.0 (January 27, 2021)

|

||||

|

||||

## dbt 0.19.0rc3 (January 27, 2021)

|

||||

|

||||

### Under the hood

|

||||

- Cleanup docker resources, use single `docker/Dockerfile` for publishing dbt as a docker image ([dbt-release#3](https://github.com/fishtown-analytics/dbt-release/issues/3), [#3019](https://github.com/fishtown-analytics/dbt/pull/3019))

|

||||

|

||||

## dbt 0.19.0rc2 (January 14, 2021)

|

||||

|

||||

### Fixes

|

||||

- Fix regression with defining exposures and other resources with the same name ([#2969](https://github.com/fishtown-analytics/dbt/issues/2969), [#3009](https://github.com/fishtown-analytics/dbt/pull/3009))

|

||||

- Remove ellipses printed while parsing ([#2971](https://github.com/fishtown-analytics/dbt/issues/2971), [#2996](https://github.com/fishtown-analytics/dbt/pull/2996))

|

||||

|

||||

### Under the hood

|

||||

- Rewrite macro for snapshot_merge_sql to make compatible with other SQL dialects ([#3003](https://github.com/fishtown-analytics/dbt/pull/3003)

|

||||

- Rewrite logic in `snapshot_check_strategy()` to make compatible with other SQL dialects ([#3000](https://github.com/fishtown-analytics/dbt/pull/3000), [#3001](https://github.com/fishtown-analytics/dbt/pull/3001))

|

||||

- Remove version restrictions on `botocore` ([#3006](https://github.com/fishtown-analytics/dbt/pull/3006))

|

||||

- Include `exposures` in start-of-invocation stdout summary: `Found ...` ([#3007](https://github.com/fishtown-analytics/dbt/pull/3007), [#3008](https://github.com/fishtown-analytics/dbt/pull/3008))

|

||||

|

||||

Contributors:

|

||||

- [@mikaelene](https://github.com/mikaelene) ([#3003](https://github.com/fishtown-analytics/dbt/pull/3003))

|

||||

- [@dbeatty10](https://github.com/dbeatty10) ([dbt-adapter-tests#10](https://github.com/fishtown-analytics/dbt-adapter-tests/pull/10))

|

||||

- [@swanderz](https://github.com/swanderz) ([#3000](https://github.com/fishtown-analytics/dbt/pull/3000))

|

||||

- [@stpierre](https://github.com/stpierre) ([#3006](https://github.com/fishtown-analytics/dbt/pull/3006))

|

||||

|

||||

## dbt 0.19.0rc1 (December 29, 2020)

|

||||

|

||||

### Breaking changes

|

||||

|

||||

- Defer if and only if upstream reference does not exist in current environment namespace ([#2909](https://github.com/fishtown-analytics/dbt/issues/2909), [#2946](https://github.com/fishtown-analytics/dbt/pull/2946))

|

||||

- Rationalize run result status reporting and clean up artifact schema ([#2493](https://github.com/fishtown-analytics/dbt/issues/2493), [#2943](https://github.com/fishtown-analytics/dbt/pull/2943))

|

||||

- Add adapter specific query execution info to run results and source freshness results artifacts. Statement call blocks return `response` instead of `status`, and the adapter method `get_status` is now `get_response` ([#2747](https://github.com/fishtown-analytics/dbt/issues/2747), [#2961](https://github.com/fishtown-analytics/dbt/pull/2961))

|

||||

|

||||

### Features

|

||||

- Added macro `get_partitions_metadata(table)` to return partition metadata for BigQuery partitioned tables ([#2552](https://github.com/fishtown-analytics/dbt/pull/2552), [#2596](https://github.com/fishtown-analytics/dbt/pull/2596))

|

||||

- Added `--defer` flag for `dbt test` as well ([#2701](https://github.com/fishtown-analytics/dbt/issues/2701), [#2954](https://github.com/fishtown-analytics/dbt/pull/2954))

|

||||

- Added native python `re` module for regex in jinja templates ([#1755](https://github.com/fishtown-analytics/dbt/pull/2851), [#1755](https://github.com/fishtown-analytics/dbt/pull/2851))

|

||||

- Store resolved node names in manifest ([#2647](https://github.com/fishtown-analytics/dbt/issues/2647), [#2837](https://github.com/fishtown-analytics/dbt/pull/2837))

|

||||

- Save selectors dictionary to manifest, allow descriptions ([#2693](https://github.com/fishtown-analytics/dbt/issues/2693), [#2866](https://github.com/fishtown-analytics/dbt/pull/2866))

|

||||

- Normalize cli-style-strings in manifest selectors dictionary ([#2879](https://github.com/fishtown-anaytics/dbt/issues/2879), [#2895](https://github.com/fishtown-analytics/dbt/pull/2895))

|

||||

- Hourly, monthly and yearly partitions available in BigQuery ([#2476](https://github.com/fishtown-analytics/dbt/issues/2476), [#2903](https://github.com/fishtown-analytics/dbt/pull/2903))

|

||||

- Allow BigQuery to default to the environment's default project ([#2828](https://github.com/fishtown-analytics/dbt/pull/2828), [#2908](https://github.com/fishtown-analytics/dbt/pull/2908))

|

||||

- Rationalize run result status reporting and clean up artifact schema ([#2493](https://github.com/fishtown-analytics/dbt/issues/2493), [#2943](https://github.com/fishtown-analytics/dbt/pull/2943))

|

||||

|

||||

### Fixes

|

||||

- Respect --project-dir in dbt clean command ([#2840](https://github.com/fishtown-analytics/dbt/issues/2840), [#2841](https://github.com/fishtown-analytics/dbt/pull/2841))

|

||||

- Fix Redshift adapter `get_columns_in_relation` macro to push schema filter down to the `svv_external_columns` view ([#2855](https://github.com/fishtown-analytics/dbt/issues/2854))

|

||||

- Add `unixodbc-dev` package to testing docker image ([#2859](https://github.com/fishtown-analytics/dbt/pull/2859))

|

||||

- Respect `--project-dir` in `dbt clean` command ([#2840](https://github.com/fishtown-analytics/dbt/issues/2840), [#2841](https://github.com/fishtown-analytics/dbt/pull/2841))

|

||||

- Fix Redshift adapter `get_columns_in_relation` macro to push schema filter down to the `svv_external_columns` view ([#2854](https://github.com/fishtown-analytics/dbt/issues/2854), [#2854](https://github.com/fishtown-analytics/dbt/issues/2854))

|

||||

- Increased the supported relation name length in postgres from 29 to 51 ([#2850](https://github.com/fishtown-analytics/dbt/pull/2850))

|

||||

- `dbt list` command always return `0` as exit code ([#2886](https://github.com/fishtown-analytics/dbt/issues/2886), [#2892](https://github.com/fishtown-analytics/dbt/issues/2892))

|

||||

- Set default `materialized` for test node configs to `test` ([#2806](https://github.com/fishtown-analytics/dbt/issues/2806), [#2902](https://github.com/fishtown-analytics/dbt/pull/2902))

|

||||

- Allow `docs` blocks in `exposure` descriptions ([#2913](https://github.com/fishtown-analytics/dbt/issues/2913), [#2920](https://github.com/fishtown-analytics/dbt/pull/2920))

|

||||

- Use original file path instead of absolute path as checksum for big seeds ([#2927](https://github.com/fishtown-analytics/dbt/issues/2927), [#2939](https://github.com/fishtown-analytics/dbt/pull/2939))

|

||||

- Fix KeyError if deferring to a manifest with a since-deleted source, ephemeral model, or test ([#2875](https://github.com/fishtown-analytics/dbt/issues/2875), [#2958](https://github.com/fishtown-analytics/dbt/pull/2958))

|

||||

|

||||

### Under the hood

|

||||

- Bump hologram version to 0.0.11. Add scripts/dtr.py ([#2888](https://github.com/fishtown-analytics/dbt/issues/2840),[#2889](https://github.com/fishtown-analytics/dbt/pull/2889))

|

||||

- Add `unixodbc-dev` package to testing docker image ([#2859](https://github.com/fishtown-analytics/dbt/pull/2859))

|

||||

- Add event tracking for project parser/load times ([#2823](https://github.com/fishtown-analytics/dbt/issues/2823),[#2893](https://github.com/fishtown-analytics/dbt/pull/2893))

|

||||

- Bump `cryptography` version to `>= 3.2` and bump snowflake connector to `2.3.6` ([#2896](https://github.com/fishtown-analytics/dbt/issues/2896), [#2922](https://github.com/fishtown-analytics/dbt/issues/2922))

|

||||

- Widen supported Google Cloud libraries dependencies ([#2794](https://github.com/fishtown-analytics/dbt/pull/2794), [#2877](https://github.com/fishtown-analytics/dbt/pull/2877)).

|

||||

- Bump `hologram` version to `0.0.11`. Add `scripts/dtr.py` ([#2888](https://github.com/fishtown-analytics/dbt/issues/2840),[#2889](https://github.com/fishtown-analytics/dbt/pull/2889))

|

||||

- Bump `hologram` version to `0.0.12`. Add testing support for python3.9 ([#2822](https://github.com/fishtown-analytics/dbt/issues/2822),[#2960](https://github.com/fishtown-analytics/dbt/pull/2960))

|

||||

- Bump the version requirements for `boto3` in dbt-redshift to the upper limit `1.16` to match dbt-redshift and the `snowflake-python-connector` as of version `2.3.6`. ([#2931](https://github.com/fishtown-analytics/dbt/issues/2931), ([#2963](https://github.com/fishtown-analytics/dbt/issues/2963))

|

||||

|

||||

### Docs

|

||||

- Fixed issue where data tests with tags were not showing up in graph viz ([docs#147](https://github.com/fishtown-analytics/dbt-docs/issues/147), [docs#157](https://github.com/fishtown-analytics/dbt-docs/pull/157))

|

||||

|

||||

Contributors:

|

||||

- [@feluelle](https://github.com/feluelle) ([#2841](https://github.com/fishtown-analytics/dbt/pull/2841))

|

||||

- [ran-eh](https://github.com/ran-eh) [#2596](https://github.com/fishtown-analytics/dbt/pull/2596)

|

||||

- [@hochoy](https://github.com/hochoy) [#2851](https://github.com/fishtown-analytics/dbt/pull/2851)

|

||||

- [@brangisom](https://github.com/brangisom) [#2855](https://github.com/fishtown-analytics/dbt/pull/2855)

|

||||

- [ran-eh](https://github.com/ran-eh) ([#2596](https://github.com/fishtown-analytics/dbt/pull/2596))

|

||||

- [@hochoy](https://github.com/hochoy) ([#2851](https://github.com/fishtown-analytics/dbt/pull/2851))

|

||||

- [@brangisom](https://github.com/brangisom) ([#2855](https://github.com/fishtown-analytics/dbt/pull/2855))

|

||||

- [@elexisvenator](https://github.com/elexisvenator) ([#2850](https://github.com/fishtown-analytics/dbt/pull/2850))

|

||||

- [@franloza](https://github.com/franloza) ([#2837](https://github.com/fishtown-analytics/dbt/pull/2837))

|

||||

- [@max-sixty](https://github.com/max-sixty) ([#2877](https://github.com/fishtown-analytics/dbt/pull/2877), [#2908](https://github.com/fishtown-analytics/dbt/pull/2908))

|

||||

- [@rsella](https://github.com/rsella) ([#2892](https://github.com/fishtown-analytics/dbt/issues/2892))

|

||||

- [@joellabes](https://github.com/joellabes) ([#2913](https://github.com/fishtown-analytics/dbt/issues/2913))

|

||||

- [@plotneishestvo](https://github.com/plotneishestvo) ([#2896](https://github.com/fishtown-analytics/dbt/issues/2896))

|

||||

- [@db-magnus](https://github.com/db-magnus) ([#2892](https://github.com/fishtown-analytics/dbt/issues/2892))

|

||||

- [@tyang209](https:/github.com/tyang209) ([#2931](https://github.com/fishtown-analytics/dbt/issues/2931))

|

||||

|

||||

## dbt 0.19.0b1 (October 21, 2020)

|

||||

|

||||

### Breaking changes

|

||||

- The format for sources.json, run-results.json, manifest.json, and catalog.json has changed to include a common metadata field ([#2761](https://github.com/fishtown-analytics/dbt/issues/2761), [#2778](https://github.com/fishtown-analytics/dbt/pull/2778), [#2763](https://github.com/fishtown-analytics/dbt/issues/2763), [#2784](https://github.com/fishtown-analytics/dbt/pull/2784), [#2764](https://github.com/fishtown-analytics/dbt/issues/2764), [#2785](https://github.com/fishtown-analytics/dbt/pull/2785))

|

||||

- The format for `sources.json`, `run-results.json`, `manifest.json`, and `catalog.json` has changed:

|

||||

- Each now has a common metadata dictionary ([#2761](https://github.com/fishtown-analytics/dbt/issues/2761), [#2778](https://github.com/fishtown-analytics/dbt/pull/2778)). The contents include: schema and dbt versions ([#2670](https://github.com/fishtown-analytics/dbt/issues/2670), [#2767](https://github.com/fishtown-analytics/dbt/pull/2767)); `invocation_id` ([#2763](https://github.com/fishtown-analytics/dbt/issues/2763), [#2784](https://github.com/fishtown-analytics/dbt/pull/2784)); custom environment variables prefixed with `DBT_ENV_CUSTOM_ENV_` ([#2764](https://github.com/fishtown-analytics/dbt/issues/2764), [#2785](https://github.com/fishtown-analytics/dbt/pull/2785)); cli and rpc arguments in the `run_results.json` ([#2510](https://github.com/fishtown-analytics/dbt/issues/2510), [#2813](https://github.com/fishtown-analytics/dbt/pull/2813)).

|

||||

- Remove `injected_sql` from manifest nodes, use `compiled_sql` instead ([#2762](https://github.com/fishtown-analytics/dbt/issues/2762), [#2834](https://github.com/fishtown-analytics/dbt/pull/2834))

|

||||

|

||||

### Features

|

||||

- dbt will compare configurations using the un-rendered form of the config block in dbt_project.yml ([#2713](https://github.com/fishtown-analytics/dbt/issues/2713), [#2735](https://github.com/fishtown-analytics/dbt/pull/2735))

|

||||

- dbt will compare configurations using the un-rendered form of the config block in `dbt_project.yml` ([#2713](https://github.com/fishtown-analytics/dbt/issues/2713), [#2735](https://github.com/fishtown-analytics/dbt/pull/2735))

|

||||

- Added state and defer arguments to the RPC client, matching the CLI ([#2678](https://github.com/fishtown-analytics/dbt/issues/2678), [#2736](https://github.com/fishtown-analytics/dbt/pull/2736))

|

||||

- Added schema and dbt versions to JSON artifacts ([#2670](https://github.com/fishtown-analytics/dbt/issues/2670), [#2767](https://github.com/fishtown-analytics/dbt/pull/2767))

|

||||

- Added ability to snapshot hard-deleted records (opt-in with `invalidate_hard_deletes` config option). ([#249](https://github.com/fishtown-analytics/dbt/issues/249), [#2749](https://github.com/fishtown-analytics/dbt/pull/2749))

|

||||

- Added revival for snapshotting hard-deleted records. ([#2819](https://github.com/fishtown-analytics/dbt/issues/2819), [#2821](https://github.com/fishtown-analytics/dbt/pull/2821))

|

||||

- Improved error messages for YAML selectors ([#2700](https://github.com/fishtown-analytics/dbt/issues/2700), [#2781](https://github.com/fishtown-analytics/dbt/pull/2781))

|

||||

- Save manifest at the same time we save the run_results at the end of a run ([#2765](https://github.com/fishtown-analytics/dbt/issues/2765), [#2799](https://github.com/fishtown-analytics/dbt/pull/2799))

|

||||

- Added dbt_invocation_id for each BigQuery job to enable performance analysis ([#2808](https://github.com/fishtown-analytics/dbt/issues/2808), [#2809](https://github.com/fishtown-analytics/dbt/pull/2809))

|

||||

- Save cli and rpc arguments in run_results.json ([#2510](https://github.com/fishtown-analytics/dbt/issues/2510), [#2813](https://github.com/fishtown-analytics/dbt/pull/2813))

|

||||

- Added `dbt_invocation_id` for each BigQuery job to enable performance analysis ([#2808](https://github.com/fishtown-analytics/dbt/issues/2808), [#2809](https://github.com/fishtown-analytics/dbt/pull/2809))

|

||||

- Added support for BigQuery connections using refresh tokens ([#2344](https://github.com/fishtown-analytics/dbt/issues/2344), [#2805](https://github.com/fishtown-analytics/dbt/pull/2805))

|

||||

- Remove injected_sql from manifest nodes ([#2762](https://github.com/fishtown-analytics/dbt/issues/2762), [#2834](https://github.com/fishtown-analytics/dbt/pull/2834))

|

||||

|

||||

### Under the hood

|

||||

- Save `manifest.json` at the same time we save the `run_results.json` at the end of a run ([#2765](https://github.com/fishtown-analytics/dbt/issues/2765), [#2799](https://github.com/fishtown-analytics/dbt/pull/2799))

|

||||

- Added strategy-specific validation to improve the relevancy of compilation errors for the `timestamp` and `check` snapshot strategies. (([#2787](https://github.com/fishtown-analytics/dbt/issues/2787), [#2791](https://github.com/fishtown-analytics/dbt/pull/2791))

|

||||

- Changed rpc test timeouts to avoid locally run test failures ([#2803](https://github.com/fishtown-analytics/dbt/issues/2803),[#2804](https://github.com/fishtown-analytics/dbt/pull/2804))

|

||||

- Added a debug_query on the base adapter that will allow plugin authors to create custom debug queries ([#2751](https://github.com/fishtown-analytics/dbt/issues/2751),[#2871](https://github.com/fishtown-analytics/dbt/pull/2817))

|

||||

- Added a `debug_query` on the base adapter that will allow plugin authors to create custom debug queries ([#2751](https://github.com/fishtown-analytics/dbt/issues/2751),[#2871](https://github.com/fishtown-analytics/dbt/pull/2817))

|

||||

|

||||

### Docs

|

||||

- Add select/deselect option in DAG view dropups. ([docs#98](https://github.com/fishtown-analytics/dbt-docs/issues/98), [docs#138](https://github.com/fishtown-analytics/dbt-docs/pull/138))

|

||||

@@ -59,6 +202,15 @@ Contributors:

|

||||

- [@Mr-Nobody99](https://github.com/Mr-Nobody99) ([docs#138](https://github.com/fishtown-analytics/dbt-docs/pull/138))

|

||||

- [@jplynch77](https://github.com/jplynch77) ([docs#139](https://github.com/fishtown-analytics/dbt-docs/pull/139))

|

||||

|

||||

## dbt 0.18.2 (March 22, 2021)

|

||||

|

||||

## dbt 0.18.2rc1 (March 12, 2021)

|

||||

|

||||

### Under the hood

|

||||

- Pin `agate<1.6.2` to avoid installation errors relating to its new dependency

|

||||

`PyICU` ([#3160](https://github.com/fishtown-analytics/dbt/issues/3160),

|

||||

[#3161](https://github.com/fishtown-analytics/dbt/pull/3161))

|

||||

|

||||

## dbt 0.18.1 (October 13, 2020)

|

||||

|

||||

## dbt 0.18.1rc1 (October 01, 2020)

|

||||

@@ -160,7 +312,6 @@ Contributors:

|

||||

- Add relevance criteria to site search ([docs#113](https://github.com/fishtown-analytics/dbt-docs/pull/113))

|

||||

- Support new selector methods, intersection, and arbitrary parent/child depth in DAG selection syntax ([docs#118](https://github.com/fishtown-analytics/dbt-docs/pull/118))

|

||||

- Revise anonymous event tracking: simpler URL fuzzing; differentiate between Cloud-hosted and non-Cloud docs ([docs#121](https://github.com/fishtown-analytics/dbt-docs/pull/121))

|

||||

|

||||

Contributors:

|

||||

- [@bbhoss](https://github.com/bbhoss) ([#2677](https://github.com/fishtown-analytics/dbt/pull/2677))

|

||||

- [@kconvey](https://github.com/kconvey) ([#2694](https://github.com/fishtown-analytics/dbt/pull/2694), [#2709](https://github.com/fishtown-analytics/dbt/pull/2709)), [#2711](https://github.com/fishtown-analytics/dbt/pull/2711))

|

||||

@@ -880,7 +1031,6 @@ Thanks for your contributions to dbt!

|

||||

- [@bastienboutonnet](https://github.com/bastienboutonnet) ([#1591](https://github.com/fishtown-analytics/dbt/pull/1591), [#1689](https://github.com/fishtown-analytics/dbt/pull/1689))

|

||||

|

||||

|

||||

|

||||

## dbt 0.14.0 - Wilt Chamberlain (July 10, 2019)

|

||||

|

||||

### Overview

|

||||

|

||||

207

CONTRIBUTING.md

207

CONTRIBUTING.md

@@ -1,79 +1,86 @@

|

||||

# Contributing to dbt

|

||||

# Contributing to `dbt`

|

||||

|

||||

1. [About this document](#about-this-document)

|

||||

2. [Proposing a change](#proposing-a-change)

|

||||

3. [Getting the code](#getting-the-code)

|

||||

4. [Setting up an environment](#setting-up-an-environment)

|

||||

5. [Running dbt in development](#running-dbt-in-development)

|

||||

5. [Running `dbt` in development](#running-dbt-in-development)

|

||||

6. [Testing](#testing)

|

||||

7. [Submitting a Pull Request](#submitting-a-pull-request)

|

||||

|

||||

## About this document

|

||||

|

||||

This document is a guide intended for folks interested in contributing to dbt. Below, we document the process by which members of the community should create issues and submit pull requests (PRs) in this repository. It is not intended as a guide for using dbt, and it assumes a certain level of familiarity with Python concepts such as virtualenvs, `pip`, python modules, filesystems, and so on. This guide assumes you are using macOS or Linux and are comfortable with the command line.

|

||||

This document is a guide intended for folks interested in contributing to `dbt`. Below, we document the process by which members of the community should create issues and submit pull requests (PRs) in this repository. It is not intended as a guide for using `dbt`, and it assumes a certain level of familiarity with Python concepts such as virtualenvs, `pip`, python modules, filesystems, and so on. This guide assumes you are using macOS or Linux and are comfortable with the command line.

|

||||

|

||||

If you're new to python development or contributing to open-source software, we encourage you to read this document from start to finish. If you get stuck, drop us a line in the #development channel on [slack](community.getdbt.com).

|

||||

If you're new to python development or contributing to open-source software, we encourage you to read this document from start to finish. If you get stuck, drop us a line in the `#dbt-core-development` channel on [slack](https://community.getdbt.com).

|

||||

|

||||

### Signing the CLA

|

||||

|

||||

Please note that all contributors to dbt must sign the [Contributor License Agreement](https://docs.getdbt.com/docs/contributor-license-agreements) to have their Pull Request merged into the dbt codebase. If you are unable to sign the CLA, then the dbt maintainers will unfortunately be unable to merge your Pull Request. You are, however, welcome to open issues and comment on existing ones.

|

||||

Please note that all contributors to `dbt` must sign the [Contributor License Agreement](https://docs.getdbt.com/docs/contributor-license-agreements) to have their Pull Request merged into the `dbt` codebase. If you are unable to sign the CLA, then the `dbt` maintainers will unfortunately be unable to merge your Pull Request. You are, however, welcome to open issues and comment on existing ones.

|

||||

|

||||

## Proposing a change

|

||||

|

||||

dbt is Apache 2.0-licensed open source software. dbt is what it is today because community members like you have opened issues, provided feedback, and contributed to the knowledge loop for the entire communtiy. Whether you are a seasoned open source contributor or a first-time committer, we welcome and encourage you to contribute code, documentation, ideas, or problem statements to this project.

|

||||

`dbt` is Apache 2.0-licensed open source software. `dbt` is what it is today because community members like you have opened issues, provided feedback, and contributed to the knowledge loop for the entire communtiy. Whether you are a seasoned open source contributor or a first-time committer, we welcome and encourage you to contribute code, documentation, ideas, or problem statements to this project.

|

||||

|

||||

### Defining the problem

|

||||

|

||||

If you have an idea for a new feature or if you've discovered a bug in dbt, the first step is to open an issue. Please check the list of [open issues](https://github.com/fishtown-analytics/dbt/issues) before creating a new one. If you find a relevant issue, please add a comment to the open issue instead of creating a new one. There are hundreds of open issues in this repository and it can be hard to know where to look for a relevant open issue. **The dbt maintainers are always happy to point contributors in the right direction**, so please err on the side of documenting your idea in a new issue if you are unsure where a problem statement belongs.

|

||||

If you have an idea for a new feature or if you've discovered a bug in `dbt`, the first step is to open an issue. Please check the list of [open issues](https://github.com/fishtown-analytics/dbt/issues) before creating a new one. If you find a relevant issue, please add a comment to the open issue instead of creating a new one. There are hundreds of open issues in this repository and it can be hard to know where to look for a relevant open issue. **The `dbt` maintainers are always happy to point contributors in the right direction**, so please err on the side of documenting your idea in a new issue if you are unsure where a problem statement belongs.

|

||||

|

||||

**Note:** All community-contributed Pull Requests _must_ be associated with an open issue. If you submit a Pull Request that does not pertain to an open issue, you will be asked to create an issue describing the problem before the Pull Request can be reviewed.

|

||||

> **Note:** All community-contributed Pull Requests _must_ be associated with an open issue. If you submit a Pull Request that does not pertain to an open issue, you will be asked to create an issue describing the problem before the Pull Request can be reviewed.

|

||||

|

||||

### Discussing the idea

|

||||

|

||||

After you open an issue, a dbt maintainer will follow up by commenting on your issue (usually within 1-3 days) to explore your idea further and advise on how to implement the suggested changes. In many cases, community members will chime in with their own thoughts on the problem statement. If you as the issue creator are interested in submitting a Pull Request to address the issue, you should indicate this in the body of the issue. The dbt maintainers are _always_ happy to help contributors with the implementation of fixes and features, so please also indicate if there's anything you're unsure about or could use guidance around in the issue.

|

||||

After you open an issue, a `dbt` maintainer will follow up by commenting on your issue (usually within 1-3 days) to explore your idea further and advise on how to implement the suggested changes. In many cases, community members will chime in with their own thoughts on the problem statement. If you as the issue creator are interested in submitting a Pull Request to address the issue, you should indicate this in the body of the issue. The `dbt` maintainers are _always_ happy to help contributors with the implementation of fixes and features, so please also indicate if there's anything you're unsure about or could use guidance around in the issue.

|

||||

|

||||

### Submitting a change

|

||||

|

||||

If an issue is appropriately well scoped and describes a beneficial change to the dbt codebase, then anyone may submit a Pull Request to implement the functionality described in the issue. See the sections below on how to do this.

|

||||

If an issue is appropriately well scoped and describes a beneficial change to the `dbt` codebase, then anyone may submit a Pull Request to implement the functionality described in the issue. See the sections below on how to do this.

|

||||

|

||||

The dbt maintainers will add a `good first issue` label if an issue is suitable for a first-time contributor. This label often means that the required code change is small, limited to one database adapter, or a net-new addition that does not impact existing functionality. You can see the list of currently open issues on the [Contribute](https://github.com/fishtown-analytics/dbt/contribute) page.

|

||||

The `dbt` maintainers will add a `good first issue` label if an issue is suitable for a first-time contributor. This label often means that the required code change is small, limited to one database adapter, or a net-new addition that does not impact existing functionality. You can see the list of currently open issues on the [Contribute](https://github.com/fishtown-analytics/dbt/contribute) page.

|

||||

|

||||

Here's a good workflow:

|

||||

- Comment on the open issue, expressing your interest in contributing the required code change

|

||||

- Outline your planned implementation. If you want help getting started, ask!

|

||||

- Follow the steps outlined below to develop locally. Once you have opened a PR, one of the dbt maintainers will work with you to review your code.

|

||||

- Add a test! Tests are crucial for both fixes and new features alike. We want to make sure that code works as intended, and that it avoids any bugs previously encountered. Currently, the best resource for understanding dbt's [unit](test/unit) and [integration](test/integration) tests is the tests themselves. One of the maintainers can help by pointing out relevant examples.

|

||||

- Follow the steps outlined below to develop locally. Once you have opened a PR, one of the `dbt` maintainers will work with you to review your code.

|

||||

- Add a test! Tests are crucial for both fixes and new features alike. We want to make sure that code works as intended, and that it avoids any bugs previously encountered. Currently, the best resource for understanding `dbt`'s [unit](test/unit) and [integration](test/integration) tests is the tests themselves. One of the maintainers can help by pointing out relevant examples.

|

||||

|

||||

In some cases, the right resolution to an open issue might be tangential to the dbt codebase. The right path forward might be a documentation update or a change that can be made in user-space. In other cases, the issue might describe functionality that the dbt maintainers are unwilling or unable to incorporate into the dbt codebase. When it is determined that an open issue describes functionality that will not translate to a code change in the dbt repository, the issue will be tagged with the `wontfix` label (see below) and closed.

|

||||