mirror of

https://github.com/dbt-labs/dbt-core

synced 2025-12-17 19:31:34 +00:00

Compare commits

165 Commits

add-except

...

v1.0.3

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

27ed2f961b | ||

|

|

f2dcb6f23c | ||

|

|

77afe63c7c | ||

|

|

ca7c4c147a | ||

|

|

4145834c5b | ||

|

|

aaeb94d683 | ||

|

|

a2662b2f83 | ||

|

|

056db408cf | ||

|

|

bec6becd18 | ||

|

|

3be057b6a4 | ||

|

|

e2a6c25a6d | ||

|

|

92b3fc470d | ||

|

|

1e9fe67393 | ||

|

|

d9361259f4 | ||

|

|

7990974bd8 | ||

|

|

544d3e7a3a | ||

|

|

31962beb14 | ||

|

|

f6a0853901 | ||

|

|

336a3d4987 | ||

|

|

74dc5c49ae | ||

|

|

29fa687349 | ||

|

|

39d4e729c9 | ||

|

|

406bdcc89c | ||

|

|

9702aa733f | ||

|

|

44265716f9 | ||

|

|

20b27fd3b6 | ||

|

|

76c2e182ba | ||

|

|

791625ddf5 | ||

|

|

1baa05a764 | ||

|

|

1b47b53aff | ||

|

|

ec1f609f3e | ||

|

|

b4ea003559 | ||

|

|

23e1a9aa4f | ||

|

|

9882d08a24 | ||

|

|

79cc811a68 | ||

|

|

c82572f745 | ||

|

|

42a38e4deb | ||

|

|

ecf0ffe68c | ||

|

|

e9f26ef494 | ||

|

|

c77dc59af8 | ||

|

|

a5ebe4ff59 | ||

|

|

5c01f9006c | ||

|

|

c92e1ed9f2 | ||

|

|

85dee41a9f | ||

|

|

a4456feff0 | ||

|

|

8d27764b0f | ||

|

|

e56256d968 | ||

|

|

86cb3ba6fa | ||

|

|

4d0d2d0d6f | ||

|

|

f8a3c27fb8 | ||

|

|

30f05b0213 | ||

|

|

f1bebb3629 | ||

|

|

e7a40345ad | ||

|

|

ba94b8212c | ||

|

|

284ac9b138 | ||

|

|

7448ec5adb | ||

|

|

caa6269bc7 | ||

|

|

31691c3b88 | ||

|

|

3a904a811f | ||

|

|

b927a31a53 | ||

|

|

d8dd75320c | ||

|

|

a613556246 | ||

|

|

8d2351d541 | ||

|

|

f72b603196 | ||

|

|

4eb17b57fb | ||

|

|

85a4b87267 | ||

|

|

0d320c58da | ||

|

|

ed1ff2caac | ||

|

|

d80646c258 | ||

|

|

a9b4316346 | ||

|

|

36776b96e7 | ||

|

|

7f2d3cd24f | ||

|

|

d046ae0606 | ||

|

|

e8c267275e | ||

|

|

a4951749a8 | ||

|

|

e1a2e8d9f5 | ||

|

|

f80c78e3a5 | ||

|

|

c541eca592 | ||

|

|

726aba0586 | ||

|

|

d300addee1 | ||

|

|

d5d16f01f4 | ||

|

|

2cb26e2699 | ||

|

|

b4793b4f9b | ||

|

|

045e70ccf1 | ||

|

|

ba23395c8e | ||

|

|

0aacd99168 | ||

|

|

e4b5d73dc4 | ||

|

|

bd950f687a | ||

|

|

aea23a488b | ||

|

|

22731df07b | ||

|

|

c55be164e6 | ||

|

|

9d73304c1a | ||

|

|

719b2026ab | ||

|

|

22416980d1 | ||

|

|

3d28b6704c | ||

|

|

5d1b104e1f | ||

|

|

4a8a68049d | ||

|

|

4b7fd1d46a | ||

|

|

0722922c03 | ||

|

|

40321d7966 | ||

|

|

434f3d2678 | ||

|

|

6dd9c2c5ba | ||

|

|

5e6be1660e | ||

|

|

31acb95d7a | ||

|

|

683190b711 | ||

|

|

ebb84c404f | ||

|

|

2ca6ce688b | ||

|

|

a40550b89d | ||

|

|

b2aea11cdb | ||

|

|

43b39fd1aa | ||

|

|

5cc8626e96 | ||

|

|

f95e9efbc0 | ||

|

|

25c974af8c | ||

|

|

b5c6f09a9e | ||

|

|

bd3e623240 | ||

|

|

63343653a9 | ||

|

|

d8b97c1077 | ||

|

|

e0b0edaeed | ||

|

|

3cafc9e13f | ||

|

|

13f31aed90 | ||

|

|

d513491046 | ||

|

|

281d2491a5 | ||

|

|

9857e1dd83 | ||

|

|

6b36b18029 | ||

|

|

d8868c5197 | ||

|

|

b141620125 | ||

|

|

51d8440dd4 | ||

|

|

5b2562a919 | ||

|

|

44a9da621e | ||

|

|

69aa6bf964 | ||

|

|

f9ef9da110 | ||

|

|

57ae9180c2 | ||

|

|

efe926d20c | ||

|

|

1081b8e720 | ||

|

|

8205921c4b | ||

|

|

da6c211611 | ||

|

|

354c1e0d4d | ||

|

|

855419d698 | ||

|

|

e94fd61b24 | ||

|

|

4cf9b73c3d | ||

|

|

8442fb66a5 | ||

|

|

f8cefa3eff | ||

|

|

d83e0fb8d8 | ||

|

|

3e9da06365 | ||

|

|

bda70c988e | ||

|

|

229e897070 | ||

|

|

f20e83a32b | ||

|

|

dd84f9a896 | ||

|

|

e6df4266f6 | ||

|

|

b591e1a2b7 | ||

|

|

3dab058c73 | ||

|

|

c7bc6eb812 | ||

|

|

c690ecc1fd | ||

|

|

73e272f06e | ||

|

|

95d087b51b | ||

|

|

40ae6b6bc8 | ||

|

|

fe20534a98 | ||

|

|

dd7af477ac | ||

|

|

178f74b753 | ||

|

|

a14f563ec8 | ||

|

|

ff109e1806 | ||

|

|

5e46694b68 | ||

|

|

73af9a56e5 | ||

|

|

d2aa920275 | ||

|

|

c34f3530c8 |

@@ -1,5 +1,5 @@

|

||||

[bumpversion]

|

||||

current_version = 1.0.0b2

|

||||

current_version = 1.0.3

|

||||

parse = (?P<major>\d+)

|

||||

\.(?P<minor>\d+)

|

||||

\.(?P<patch>\d+)

|

||||

@@ -35,3 +35,5 @@ first_value = 1

|

||||

[bumpversion:file:plugins/postgres/setup.py]

|

||||

|

||||

[bumpversion:file:plugins/postgres/dbt/adapters/postgres/__version__.py]

|

||||

|

||||

[bumpversion:file:docker/requirements/requirements.txt]

|

||||

|

||||

2

.github/scripts/integration-test-matrix.js

vendored

2

.github/scripts/integration-test-matrix.js

vendored

@@ -1,6 +1,6 @@

|

||||

module.exports = ({ context }) => {

|

||||

const defaultPythonVersion = "3.8";

|

||||

const supportedPythonVersions = ["3.6", "3.7", "3.8", "3.9"];

|

||||

const supportedPythonVersions = ["3.7", "3.8", "3.9"];

|

||||

const supportedAdapters = ["postgres"];

|

||||

|

||||

// if PR, generate matrix based on files changed and PR labels

|

||||

|

||||

7

.github/workflows/main.yml

vendored

7

.github/workflows/main.yml

vendored

@@ -77,7 +77,7 @@ jobs:

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

python-version: [3.6, 3.7, 3.8] # TODO: support unit testing for python 3.9 (https://github.com/dbt-labs/dbt/issues/3689)

|

||||

python-version: [3.7, 3.8, 3.9]

|

||||

|

||||

env:

|

||||

TOXENV: "unit"

|

||||

@@ -167,7 +167,7 @@ jobs:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

os: [ubuntu-latest, macos-latest, windows-latest]

|

||||

python-version: [3.6, 3.7, 3.8, 3.9]

|

||||

python-version: [3.7, 3.8, 3.9]

|

||||

|

||||

steps:

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

@@ -198,8 +198,9 @@ jobs:

|

||||

dbt --version

|

||||

|

||||

- name: Install source distributions

|

||||

# ignore dbt-1.0.0, which intentionally raises an error when installed from source

|

||||

run: |

|

||||

find ./dist/*.gz -maxdepth 1 -type f | xargs pip install --force-reinstall --find-links=dist/

|

||||

find ./dist/dbt-[a-z]*.gz -maxdepth 1 -type f | xargs pip install --force-reinstall --find-links=dist/

|

||||

|

||||

- name: Check source distributions

|

||||

run: |

|

||||

|

||||

200

.github/workflows/release.yml

vendored

Normal file

200

.github/workflows/release.yml

vendored

Normal file

@@ -0,0 +1,200 @@

|

||||

# **what?**

|

||||

# Take the given commit, run unit tests specifically on that sha, build and

|

||||

# package it, and then release to GitHub and PyPi with that specific build

|

||||

|

||||

# **why?**

|

||||

# Ensure an automated and tested release process

|

||||

|

||||

# **when?**

|

||||

# This will only run manually with a given sha and version

|

||||

|

||||

name: Release to GitHub and PyPi

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

sha:

|

||||

description: 'The last commit sha in the release'

|

||||

required: true

|

||||

version_number:

|

||||

description: 'The release version number (i.e. 1.0.0b1)'

|

||||

required: true

|

||||

|

||||

defaults:

|

||||

run:

|

||||

shell: bash

|

||||

|

||||

jobs:

|

||||

unit:

|

||||

name: Unit test

|

||||

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

env:

|

||||

TOXENV: "unit"

|

||||

|

||||

steps:

|

||||

- name: Check out the repository

|

||||

uses: actions/checkout@v2

|

||||

with:

|

||||

persist-credentials: false

|

||||

ref: ${{ github.event.inputs.sha }}

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.8

|

||||

|

||||

- name: Install python dependencies

|

||||

run: |

|

||||

pip install --user --upgrade pip

|

||||

pip install tox

|

||||

pip --version

|

||||

tox --version

|

||||

|

||||

- name: Run tox

|

||||

run: tox

|

||||

|

||||

build:

|

||||

name: build packages

|

||||

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Check out the repository

|

||||

uses: actions/checkout@v2

|

||||

with:

|

||||

persist-credentials: false

|

||||

ref: ${{ github.event.inputs.sha }}

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.8

|

||||

|

||||

- name: Install python dependencies

|

||||

run: |

|

||||

pip install --user --upgrade pip

|

||||

pip install --upgrade setuptools wheel twine check-wheel-contents

|

||||

pip --version

|

||||

|

||||

- name: Build distributions

|

||||

run: ./scripts/build-dist.sh

|

||||

|

||||

- name: Show distributions

|

||||

run: ls -lh dist/

|

||||

|

||||

- name: Check distribution descriptions

|

||||

run: |

|

||||

twine check dist/*

|

||||

|

||||

- name: Check wheel contents

|

||||

run: |

|

||||

check-wheel-contents dist/*.whl --ignore W007,W008

|

||||

|

||||

- uses: actions/upload-artifact@v2

|

||||

with:

|

||||

name: dist

|

||||

path: |

|

||||

dist/

|

||||

!dist/dbt-${{github.event.inputs.version_number}}.tar.gz

|

||||

|

||||

test-build:

|

||||

name: verify packages

|

||||

|

||||

needs: [build, unit]

|

||||

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.8

|

||||

|

||||

- name: Install python dependencies

|

||||

run: |

|

||||

pip install --user --upgrade pip

|

||||

pip install --upgrade wheel

|

||||

pip --version

|

||||

|

||||

- uses: actions/download-artifact@v2

|

||||

with:

|

||||

name: dist

|

||||

path: dist/

|

||||

|

||||

- name: Show distributions

|

||||

run: ls -lh dist/

|

||||

|

||||

- name: Install wheel distributions

|

||||

run: |

|

||||

find ./dist/*.whl -maxdepth 1 -type f | xargs pip install --force-reinstall --find-links=dist/

|

||||

|

||||

- name: Check wheel distributions

|

||||

run: |

|

||||

dbt --version

|

||||

|

||||

- name: Install source distributions

|

||||

run: |

|

||||

find ./dist/*.gz -maxdepth 1 -type f | xargs pip install --force-reinstall --find-links=dist/

|

||||

|

||||

- name: Check source distributions

|

||||

run: |

|

||||

dbt --version

|

||||

|

||||

|

||||

github-release:

|

||||

name: GitHub Release

|

||||

|

||||

needs: test-build

|

||||

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- uses: actions/download-artifact@v2

|

||||

with:

|

||||

name: dist

|

||||

path: '.'

|

||||

|

||||

# Need to set an output variable because env variables can't be taken as input

|

||||

# This is needed for the next step with releasing to GitHub

|

||||

- name: Find release type

|

||||

id: release_type

|

||||

env:

|

||||

IS_PRERELEASE: ${{ contains(github.event.inputs.version_number, 'rc') || contains(github.event.inputs.version_number, 'b') }}

|

||||

run: |

|

||||

echo ::set-output name=isPrerelease::$IS_PRERELEASE

|

||||

|

||||

- name: Creating GitHub Release

|

||||

uses: softprops/action-gh-release@v1

|

||||

with:

|

||||

name: dbt-core v${{github.event.inputs.version_number}}

|

||||

tag_name: v${{github.event.inputs.version_number}}

|

||||

prerelease: ${{ steps.release_type.outputs.isPrerelease }}

|

||||

target_commitish: ${{github.event.inputs.sha}}

|

||||

body: |

|

||||

[Release notes](https://github.com/dbt-labs/dbt-core/blob/main/CHANGELOG.md)

|

||||

files: |

|

||||

dbt_postgres-${{github.event.inputs.version_number}}-py3-none-any.whl

|

||||

dbt_core-${{github.event.inputs.version_number}}-py3-none-any.whl

|

||||

dbt-postgres-${{github.event.inputs.version_number}}.tar.gz

|

||||

dbt-core-${{github.event.inputs.version_number}}.tar.gz

|

||||

|

||||

pypi-release:

|

||||

name: Pypi release

|

||||

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

needs: github-release

|

||||

|

||||

environment: PypiProd

|

||||

steps:

|

||||

- uses: actions/download-artifact@v2

|

||||

with:

|

||||

name: dist

|

||||

path: 'dist'

|

||||

|

||||

- name: Publish distribution to PyPI

|

||||

uses: pypa/gh-action-pypi-publish@v1.4.2

|

||||

with:

|

||||

password: ${{ secrets.PYPI_API_TOKEN }}

|

||||

71

.github/workflows/structured-logging-schema-check.yml

vendored

Normal file

71

.github/workflows/structured-logging-schema-check.yml

vendored

Normal file

@@ -0,0 +1,71 @@

|

||||

# This Action checks makes a dbt run to sample json structured logs

|

||||

# and checks that they conform to the currently documented schema.

|

||||

#

|

||||

# If this action fails it either means we have unintentionally deviated

|

||||

# from our documented structured logging schema, or we need to bump the

|

||||

# version of our structured logging and add new documentation to

|

||||

# communicate these changes.

|

||||

|

||||

|

||||

name: Structured Logging Schema Check

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- "main"

|

||||

- "*.latest"

|

||||

- "releases/*"

|

||||

pull_request:

|

||||

workflow_dispatch:

|

||||

|

||||

permissions: read-all

|

||||

|

||||

jobs:

|

||||

# run the performance measurements on the current or default branch

|

||||

test-schema:

|

||||

name: Test Log Schema

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

# turns warnings into errors

|

||||

RUSTFLAGS: "-D warnings"

|

||||

# points tests to the log file

|

||||

LOG_DIR: "/home/runner/work/dbt-core/dbt-core/logs"

|

||||

# tells integration tests to output into json format

|

||||

DBT_LOG_FORMAT: 'json'

|

||||

steps:

|

||||

|

||||

- name: checkout dev

|

||||

uses: actions/checkout@v2

|

||||

with:

|

||||

persist-credentials: false

|

||||

|

||||

- name: Setup Python

|

||||

uses: actions/setup-python@v2.2.2

|

||||

with:

|

||||

python-version: "3.8"

|

||||

|

||||

- uses: actions-rs/toolchain@v1

|

||||

with:

|

||||

profile: minimal

|

||||

toolchain: stable

|

||||

override: true

|

||||

|

||||

- name: install dbt

|

||||

run: pip install -r dev-requirements.txt -r editable-requirements.txt

|

||||

|

||||

- name: Set up postgres

|

||||

uses: ./.github/actions/setup-postgres-linux

|

||||

|

||||

- name: ls

|

||||

run: ls

|

||||

|

||||

# integration tests generate a ton of logs in different files. the next step will find them all.

|

||||

# we actually care if these pass, because the normal test run doesn't usually include many json log outputs

|

||||

- name: Run integration tests

|

||||

run: tox -e py38-postgres -- -nauto

|

||||

|

||||

# apply our schema tests to every log event from the previous step

|

||||

# skips any output that isn't valid json

|

||||

- uses: actions-rs/cargo@v1

|

||||

with:

|

||||

command: run

|

||||

args: --manifest-path test/interop/log_parsing/Cargo.toml

|

||||

193

CHANGELOG.md

193

CHANGELOG.md

@@ -1,4 +1,172 @@

|

||||

## dbt-core 1.0.0 (Release TBD)

|

||||

## dbt-core 1.0.3 (TBD)

|

||||

|

||||

### Fixes

|

||||

- Fix bug accessing target fields in deps and clean commands ([#4752](https://github.com/dbt-labs/dbt-core/issues/4752), [#4758](https://github.com/dbt-labs/dbt-core/issues/4758))

|

||||

|

||||

## dbt-core 1.0.2 (February 18, 2022)

|

||||

|

||||

### Dependencies

|

||||

- Pin `MarkupSafe==2.0.1`. Deprecation of `soft_unicode` in `MarkupSafe==2.1.0` is not supported by `Jinja2==2.11`

|

||||

|

||||

## dbt-core 1.0.2rc1 (February 4, 2022)

|

||||

|

||||

### Fixes

|

||||

- Projects created using `dbt init` now have the correct `seeds` directory created (instead of `data`) ([#4588](https://github.com/dbt-labs/dbt-core/issues/4588), [#4599](https://github.com/dbt-labs/dbt-core/pull/4589))

|

||||

- Don't require a profile for dbt deps and clean commands ([#4554](https://github.com/dbt-labs/dbt-core/issues/4554), [#4610](https://github.com/dbt-labs/dbt-core/pull/4610))

|

||||

- Select modified.body works correctly when new model added([#4570](https://github.com/dbt-labs/dbt-core/issues/4570), [#4631](https://github.com/dbt-labs/dbt-core/pull/4631))

|

||||

- Fix bug in retry logic for bad response from hub and when there is a bad git tarball download. ([#4577](https://github.com/dbt-labs/dbt-core/issues/4577), [#4579](https://github.com/dbt-labs/dbt-core/issues/4579), [#4609](https://github.com/dbt-labs/dbt-core/pull/4609))

|

||||

- Restore previous log level (DEBUG) when a test depends on a disabled resource. Still WARN if the resource is missing ([#4594](https://github.com/dbt-labs/dbt-core/issues/4594), [#4647](https://github.com/dbt-labs/dbt-core/pull/4647))

|

||||

- User wasn't asked for permission to overwite a profile entry when running init inside an existing project ([#4375](https://github.com/dbt-labs/dbt-core/issues/4375), [#4447](https://github.com/dbt-labs/dbt-core/pull/4447))

|

||||

- A change in secret environment variables won't trigger a full reparse [#4650](https://github.com/dbt-labs/dbt-core/issues/4650) [4665](https://github.com/dbt-labs/dbt-core/pull/4665)

|

||||

- adapter compability messaging added([#4438](https://github.com/dbt-labs/dbt-core/pull/4438) [#4565](https://github.com/dbt-labs/dbt-core/pull/4565))

|

||||

- Add project name validation to `dbt init` ([#4490](https://github.com/dbt-labs/dbt-core/issues/4490),[#4536](https://github.com/dbt-labs/dbt-core/pull/4536))

|

||||

|

||||

Contributors:

|

||||

- [@NiallRees](https://github.com/NiallRees) ([#4447](https://github.com/dbt-labs/dbt-core/pull/4447))

|

||||

- [@amirkdv](https://github.com/amirkdv) ([#4536](https://github.com/dbt-labs/dbt-core/pull/4536))

|

||||

- [@nkyuray](https://github.com/nkyuray) ([#4565](https://github.com/dbt-labs/dbt-core/pull/4565))

|

||||

|

||||

## dbt-core 1.0.1 (January 03, 2022)

|

||||

|

||||

|

||||

## dbt-core 1.0.1rc1 (December 20, 2021)

|

||||

|

||||

### Fixes

|

||||

- Fix wrong url in the dbt docs overview homepage ([#4442](https://github.com/dbt-labs/dbt-core/pull/4442))

|

||||

- Fix redefined status param of SQLQueryStatus to typecheck the string which passes on `._message` value of `AdapterResponse` or the `str` value sent by adapter plugin. ([#4463](https://github.com/dbt-labs/dbt-core/pull/4463#issuecomment-990174166))

|

||||

- Fix `DepsStartPackageInstall` event to use package name instead of version number. ([#4482](https://github.com/dbt-labs/dbt-core/pull/4482))

|

||||

- Reimplement log message to use adapter name instead of the object method. ([#4501](https://github.com/dbt-labs/dbt-core/pull/4501))

|

||||

- Issue better error message for incompatible schemas ([#4470](https://github.com/dbt-labs/dbt-core/pull/4442), [#4497](https://github.com/dbt-labs/dbt-core/pull/4497))

|

||||

- Remove secrets from error related to packages. ([#4507](https://github.com/dbt-labs/dbt-core/pull/4507))

|

||||

- Prevent coercion of boolean values (`True`, `False`) to numeric values (`0`, `1`) in query results ([#4511](https://github.com/dbt-labs/dbt-core/issues/4511), [#4512](https://github.com/dbt-labs/dbt-core/pull/4512))

|

||||

- Fix error with an env_var in a project hook ([#4523](https://github.com/dbt-labs/dbt-core/issues/4523), [#4524](https://github.com/dbt-labs/dbt-core/pull/4524))

|

||||

|

||||

### Docs

|

||||

- Fix missing data on exposures in docs ([#4467](https://github.com/dbt-labs/dbt-core/issues/4467))

|

||||

|

||||

Contributors:

|

||||

- [remoyson](https://github.com/remoyson) ([#4442](https://github.com/dbt-labs/dbt-core/pull/4442))

|

||||

|

||||

## dbt-core 1.0.0 (December 3, 2021)

|

||||

|

||||

### Fixes

|

||||

- Configure the CLI logger destination to use stdout instead of stderr ([#4368](https://github.com/dbt-labs/dbt-core/pull/4368))

|

||||

- Make the size of `EVENT_HISTORY` configurable, via `EVENT_BUFFER_SIZE` global config ([#4411](https://github.com/dbt-labs/dbt-core/pull/4411), [#4416](https://github.com/dbt-labs/dbt-core/pull/4416))

|

||||

- Change type of `log_format` in `profiles.yml` user config to be string, not boolean ([#4394](https://github.com/dbt-labs/dbt-core/pull/4394))

|

||||

|

||||

### Under the hood

|

||||

- Only log cache events if `LOG_CACHE_EVENTS` is enabled, and disable by default. This restores previous behavior ([#4369](https://github.com/dbt-labs/dbt-core/pull/4369))

|

||||

- Move event codes to be a top-level attribute of JSON-formatted logs, rather than nested in `data` ([#4381](https://github.com/dbt-labs/dbt-core/pull/4381))

|

||||

- Fix failing integration test on Windows ([#4380](https://github.com/dbt-labs/dbt-core/pull/4380))

|

||||

- Clean up warning messages for `clean` + `deps` ([#4366](https://github.com/dbt-labs/dbt-core/pull/4366))

|

||||

- Use RFC3339 timestamps for log messages ([#4384](https://github.com/dbt-labs/dbt-core/pull/4384))

|

||||

- Different text output for console (info) and file (debug) logs ([#4379](https://github.com/dbt-labs/dbt-core/pull/4379), [#4418](https://github.com/dbt-labs/dbt-core/pull/4418))

|

||||

- Remove unused events. More structured `ConcurrencyLine`. Replace `\n` message starts/ends with `EmptyLine` events, and exclude `EmptyLine` from JSON-formatted output ([#4388](https://github.com/dbt-labs/dbt-core/pull/4388))

|

||||

- Update `events` module README ([#4395](https://github.com/dbt-labs/dbt-core/pull/4395))

|

||||

- Rework approach to JSON serialization for events with non-standard properties ([#4396](https://github.com/dbt-labs/dbt-core/pull/4396))

|

||||

- Update legacy logger file name to `dbt.log.legacy` ([#4402](https://github.com/dbt-labs/dbt-core/pull/4402))

|

||||

- Rollover `dbt.log` at 10 MB, and keep up to 5 backups, restoring previous behavior ([#4405](https://github.com/dbt-labs/dbt-core/pull/4405))

|

||||

- Use reference keys instead of full relation objects in cache events ([#4410](https://github.com/dbt-labs/dbt-core/pull/4410))

|

||||

- Add `node_type` contextual info to more events ([#4378](https://github.com/dbt-labs/dbt-core/pull/4378))

|

||||

- Make `materialized` config optional in `node_type` ([#4417](https://github.com/dbt-labs/dbt-core/pull/4417))

|

||||

- Stringify exception in `GenericExceptionOnRun` to support JSON serialization ([#4424](https://github.com/dbt-labs/dbt-core/pull/4424))

|

||||

- Add "interop" tests for machine consumption of structured log output ([#4327](https://github.com/dbt-labs/dbt-core/pull/4327))

|

||||

- Relax version specifier for `dbt-extractor` to `~=0.4.0`, to support compiled wheels for additional architectures when available ([#4427](https://github.com/dbt-labs/dbt-core/pull/4427))

|

||||

|

||||

## dbt-core 1.0.0rc3 (November 30, 2021)

|

||||

|

||||

### Fixes

|

||||

- Support partial parsing of env_vars in metrics ([#4253](https://github.com/dbt-labs/dbt-core/issues/4293), [#4322](https://github.com/dbt-labs/dbt-core/pull/4322))

|

||||

- Fix typo in `UnparsedSourceDefinition.__post_serialize__` ([#3545](https://github.com/dbt-labs/dbt-core/issues/3545), [#4349](https://github.com/dbt-labs/dbt-core/pull/4349))

|

||||

|

||||

### Under the hood

|

||||

- Change some CompilationExceptions to ParsingExceptions ([#4254](http://github.com/dbt-labs/dbt-core/issues/4254), [#4328](https://github.com/dbt-core/pull/4328))

|

||||

- Reorder logic for static parser sampling to speed up model parsing ([#4332](https://github.com/dbt-labs/dbt-core/pull/4332))

|

||||

- Use more augmented assignment statements ([#4315](https://github.com/dbt-labs/dbt-core/issues/4315)), ([#4311](https://github.com/dbt-labs/dbt-core/pull/4331))

|

||||

- Adjust logic when finding approximate matches for models and tests ([#3835](https://github.com/dbt-labs/dbt-core/issues/3835)), [#4076](https://github.com/dbt-labs/dbt-core/pull/4076))

|

||||

- Restore small previous behaviors for logging: JSON formatting for first few events; `WARN`-level stdout for `list` task; include tracking events in `dbt.log` ([#4341](https://github.com/dbt-labs/dbt-core/pull/4341))

|

||||

|

||||

Contributors:

|

||||

- [@sarah-weatherbee](https://github.com/sarah-weatherbee) ([#4331](https://github.com/dbt-labs/dbt-core/pull/4331))

|

||||

- [@emilieschario](https://github.com/emilieschario) ([#4076](https://github.com/dbt-labs/dbt-core/pull/4076))

|

||||

- [@sneznaj](https://github.com/sneznaj) ([#4349](https://github.com/dbt-labs/dbt-core/pull/4349))

|

||||

|

||||

## dbt-core 1.0.0rc2 (November 22, 2021)

|

||||

|

||||

### Breaking changes

|

||||

- Restrict secret env vars (prefixed `DBT_ENV_SECRET_`) to `profiles.yml` + `packages.yml` _only_. Raise an exception if a secret env var is used elsewhere ([#4310](https://github.com/dbt-labs/dbt-core/issues/4310), [#4311](https://github.com/dbt-labs/dbt-core/pull/4311))

|

||||

- Reorder arguments to `config.get()` so that `default` is second ([#4273](https://github.com/dbt-labs/dbt-core/issues/4273), [#4297](https://github.com/dbt-labs/dbt-core/pull/4297))

|

||||

|

||||

### Features

|

||||

- Avoid error when missing column in YAML description ([#4151](https://github.com/dbt-labs/dbt-core/issues/4151), [#4285](https://github.com/dbt-labs/dbt-core/pull/4285))

|

||||

- Allow `--defer` flag to `dbt snapshot` ([#4110](https://github.com/dbt-labs/dbt-core/issues/4110), [#4296](https://github.com/dbt-labs/dbt-core/pull/4296))

|

||||

- Install prerelease packages when `version` explicitly references a prerelease version, regardless of `install-prerelease` status ([#4243](https://github.com/dbt-labs/dbt-core/issues/4243), [#4295](https://github.com/dbt-labs/dbt-core/pull/4295))

|

||||

- Add data attributes to json log messages ([#4301](https://github.com/dbt-labs/dbt-core/pull/4301))

|

||||

- Add event codes to all log events ([#4319](https://github.com/dbt-labs/dbt-core/pull/4319))

|

||||

|

||||

### Fixes

|

||||

- Fix serialization error with missing quotes in metrics model ref ([#4252](https://github.com/dbt-labs/dbt-core/issues/4252), [#4287](https://github.com/dbt-labs/dbt-core/pull/4289))

|

||||

- Correct definition of 'created_at' in ParsedMetric nodes ([#4298](http://github.com/dbt-labs/dbt-core/issues/4298), [#4299](https://github.com/dbt-labs/dbt-core/pull/4299))

|

||||

|

||||

### Fixes

|

||||

- Allow specifying default in Jinja config.get with default keyword ([#4273](https://github.com/dbt-labs/dbt-core/issues/4273), [#4297](https://github.com/dbt-labs/dbt-core/pull/4297))

|

||||

- Fix serialization error with missing quotes in metrics model ref ([#4252](https://github.com/dbt-labs/dbt-core/issues/4252), [#4287](https://github.com/dbt-labs/dbt-core/pull/4289))

|

||||

- Correct definition of 'created_at' in ParsedMetric nodes ([#4298](https://github.com/dbt-labs/dbt-core/issues/4298), [#4299](https://github.com/dbt-labs/dbt-core/pull/4299))

|

||||

|

||||

### Under the hood

|

||||

- Add --indirect-selection parameter to profiles.yml and builtin DBT_ env vars; stringified parameter to enable multi-modal use ([#3997](https://github.com/dbt-labs/dbt-core/issues/3997), [#4270](https://github.com/dbt-labs/dbt-core/pull/4270))

|

||||

- Fix filesystem searcher test failure on Python 3.9 ([#3689](https://github.com/dbt-labs/dbt-core/issues/3689), [#4271](https://github.com/dbt-labs/dbt-core/pull/4271))

|

||||

- Clean up deprecation warnings shown for `dbt_project.yml` config renames ([#4276](https://github.com/dbt-labs/dbt-core/issues/4276), [#4291](https://github.com/dbt-labs/dbt-core/pull/4291))

|

||||

- Fix metrics count in compiled project stats ([#4290](https://github.com/dbt-labs/dbt-core/issues/4290), [#4292](https://github.com/dbt-labs/dbt-core/pull/4292))

|

||||

- First pass at supporting more dbt tasks via python lib ([#4200](https://github.com/dbt-labs/dbt-core/pull/4200))

|

||||

|

||||

Contributors:

|

||||

- [@kadero](https://github.com/kadero) ([#4285](https://github.com/dbt-labs/dbt-core/pull/4285), [#4296](https://github.com/dbt-labs/dbt-core/pull/4296))

|

||||

- [@joellabes](https://github.com/joellabes) ([#4295](https://github.com/dbt-labs/dbt-core/pull/4295))

|

||||

|

||||

## dbt-core 1.0.0rc1 (November 10, 2021)

|

||||

|

||||

### Breaking changes

|

||||

- Replace `greedy` flag/property for test selection with `indirect_selection: eager/cautious` flag/property. Set to `eager` by default. **Note:** This reverts test selection to its pre-v0.20 behavior by default. `dbt test -s my_model` _will_ select multi-parent tests, such as `relationships`, that depend on unselected resources. To achieve the behavior change in v0.20 + v0.21, set `--indirect-selection=cautious` on the CLI or `indirect_selection: cautious` in yaml selectors. ([#4082](https://github.com/dbt-labs/dbt-core/issues/4082), [#4104](https://github.com/dbt-labs/dbt-core/pull/4104))

|

||||

- In v1.0.0, **`pip install dbt` will raise an explicit error.** Instead, please use `pip install dbt-<adapter>` (to use dbt with that database adapter), or `pip install dbt-core` (for core functionality). For parity with the previous behavior of `pip install dbt`, you can use: `pip install dbt-core dbt-postgres dbt-redshift dbt-snowflake dbt-bigquery` ([#4100](https://github.com/dbt-labs/dbt-core/issues/4100), [#4133](https://github.com/dbt-labs/dbt-core/pull/4133))

|

||||

- Reorganize the `global_project` (macros) into smaller files with clearer names. Remove unused global macros: `column_list`, `column_list_for_create_table`, `incremental_upsert` ([#4154](https://github.com/dbt-labs/dbt-core/pull/4154))

|

||||

- Introduce structured event interface, and begin conversion of all legacy logging ([#3359](https://github.com/dbt-labs/dbt-core/issues/3359), [#4055](https://github.com/dbt-labs/dbt-core/pull/4055))

|

||||

- **This is a breaking change for adapter plugins, requiring a very simple migration.** See [`events` module README](core/dbt/events/README.md#adapter-maintainers) for details.

|

||||

- If you maintain another kind of dbt-core plugin that makes heavy use of legacy logging, and you need time to cut over to the new event interface, you can re-enable the legacy logger via an environment variable shim, `DBT_ENABLE_LEGACY_LOGGER=True`. Be advised that we will remove this capability in a future version of dbt-core.

|

||||

|

||||

### Features

|

||||

- Allow nullable `error_after` in source freshness ([#3874](https://github.com/dbt-labs/dbt-core/issues/3874), [#3955](https://github.com/dbt-labs/dbt-core/pull/3955))

|

||||

- Add `metrics` nodes ([#4071](https://github.com/dbt-labs/dbt-core/issues/4071), [#4235](https://github.com/dbt-labs/dbt-core/pull/4235))

|

||||

- Add support for `dbt init <project_name>`, and support for `skip_profile_setup` argument (`dbt init -s`) ([#4156](https://github.com/dbt-labs/dbt-core/issues/4156), [#4249](https://github.com/dbt-labs/dbt-core/pull/4249))

|

||||

|

||||

### Fixes

|

||||

- Changes unit tests using `assertRaisesRegexp` to `assertRaisesRegex` ([#4136](https://github.com/dbt-labs/dbt-core/issues/4132), [#4136](https://github.com/dbt-labs/dbt-core/pull/4136))

|

||||

- Allow retries when the answer from a `dbt deps` is `None` ([#4178](https://github.com/dbt-labs/dbt-core/issues/4178), [#4225](https://github.com/dbt-labs/dbt-core/pull/4225))

|

||||

|

||||

### Docs

|

||||

|

||||

- Fix non-alphabetical sort of Source Tables in source overview page ([docs#81](https://github.com/dbt-labs/dbt-docs/issues/81), [docs#218](https://github.com/dbt-labs/dbt-docs/pull/218))

|

||||

- Add title tag to node elements in tree ([docs#202](https://github.com/dbt-labs/dbt-docs/issues/202), [docs#203](https://github.com/dbt-labs/dbt-docs/pull/203))

|

||||

- Account for test rename: `schema` → `generic`, `data` →` singular`. Use `test_metadata` instead of `schema`/`data` tags to differentiate ([docs#216](https://github.com/dbt-labs/dbt-docs/issues/216), [docs#222](https://github.com/dbt-labs/dbt-docs/pull/222))

|

||||

- Add `metrics` ([core#216](https://github.com/dbt-labs/dbt-core/issues/4235), [docs#223](https://github.com/dbt-labs/dbt-docs/pull/223))

|

||||

|

||||

### Under the hood

|

||||

- Bump artifact schema versions for 1.0.0: manifest v4, run results v4, sources v3. Notable changes: added `metrics` nodes; schema test + data test nodes are renamed to generic test + singular test nodes; freshness threshold default values ([#4191](https://github.com/dbt-labs/dbt-core/pull/4191))

|

||||

- Speed up node selection by skipping `incorporate_indirect_nodes` if not needed ([#4213](https://github.com/dbt-labs/dbt-core/issues/4213), [#4214](https://github.com/dbt-labs/dbt-core/issues/4214))

|

||||

- When `on_schema_change` is set, pass common columns as `dest_columns` in incremental merge macros ([#4144](https://github.com/dbt-labs/dbt-core/issues/4144), [#4170](https://github.com/dbt-labs/dbt-core/pull/4170))

|

||||

- Clear adapters before registering in `lib` module config generation ([#4218](https://github.com/dbt-labs/dbt-core/pull/4218))

|

||||

- Remove official support for python 3.6, which is reaching end of life on December 23, 2021 ([#4134](https://github.com/dbt-labs/dbt-core/issues/4134), [#4223](https://github.com/dbt-labs/dbt-core/pull/4223))

|

||||

|

||||

Contributors:

|

||||

- [@kadero](https://github.com/kadero) ([#3955](https://github.com/dbt-labs/dbt-core/pull/3955), [#4249](https://github.com/dbt-labs/dbt-core/pull/4249))

|

||||

- [@frankcash](https://github.com/frankcash) ([#4136](https://github.com/dbt-labs/dbt-core/pull/4136))

|

||||

- [@Kayrnt](https://github.com/Kayrnt) ([#4136](https://github.com/dbt-labs/dbt-core/pull/4170))

|

||||

- [@VersusFacit](https://github.com/VersusFacit) ([#4104](https://github.com/dbt-labs/dbt-core/pull/4104))

|

||||

- [@joellabes](https://github.com/joellabes) ([#4104](https://github.com/dbt-labs/dbt-core/pull/4104))

|

||||

- [@b-per](https://github.com/b-per) ([#4225](https://github.com/dbt-labs/dbt-core/pull/4225))

|

||||

- [@salmonsd](https://github.com/salmonsd) ([docs#218](https://github.com/dbt-labs/dbt-docs/pull/218))

|

||||

- [@miike](https://github.com/miike) ([docs#203](https://github.com/dbt-labs/dbt-docs/pull/203))

|

||||

|

||||

|

||||

## dbt-core 1.0.0b2 (October 25, 2021)

|

||||

|

||||

@@ -15,12 +183,16 @@

|

||||

- `dbt init` is now interactive, generating profiles.yml when run inside existing project ([#3625](https://github.com/dbt-labs/dbt/pull/3625))

|

||||

|

||||

### Under the hood

|

||||

|

||||

- Fix intermittent errors in partial parsing tests ([#4060](https://github.com/dbt-labs/dbt-core/issues/4060), [#4068](https://github.com/dbt-labs/dbt-core/pull/4068))

|

||||

- Make finding disabled nodes more consistent ([#4069](https://github.com/dbt-labs/dbt-core/issues/4069), [#4073](https://github.com/dbt-labas/dbt-core/pull/4073))

|

||||

- Remove connection from `render_with_context` during parsing, thereby removing misleading log message ([#3137](https://github.com/dbt-labs/dbt-core/issues/3137), [#4062](https://github.com/dbt-labas/dbt-core/pull/4062))

|

||||

- Wait for postgres docker container to be ready in `setup_db.sh`. ([#3876](https://github.com/dbt-labs/dbt-core/issues/3876), [#3908](https://github.com/dbt-labs/dbt-core/pull/3908))

|

||||

- Prefer macros defined in the project over the ones in a package by default ([#4106](https://github.com/dbt-labs/dbt-core/issues/4106), [#4114](https://github.com/dbt-labs/dbt-core/pull/4114))

|

||||

- Prefer macros defined in the project over the ones in a package by default ([#4106](https://github.com/dbt-labs/dbt-core/issues/4106), [#4114](https://github.com/dbt-labs/dbt-core/pull/4114))

|

||||

- Dependency updates ([#4079](https://github.com/dbt-labs/dbt-core/pull/4079)), ([#3532](https://github.com/dbt-labs/dbt-core/pull/3532)

|

||||

- Schedule partial parsing for SQL files with env_var changes ([#3885](https://github.com/dbt-labs/dbt-core/issues/3885), [#4101](https://github.com/dbt-labs/dbt-core/pull/4101))

|

||||

- Schedule partial parsing for schema files with env_var changes ([#3885](https://github.com/dbt-labs/dbt-core/issues/3885), [#4162](https://github.com/dbt-labs/dbt-core/pull/4162))

|

||||

- Skip partial parsing when env_vars change in dbt_project or profile ([#3885](https://github.com/dbt-labs/dbt-core/issues/3885), [#4212](https://github.com/dbt-labs/dbt-core/pull/4212))

|

||||

|

||||

Contributors:

|

||||

- [@sungchun12](https://github.com/sungchun12) ([#4017](https://github.com/dbt-labs/dbt/pull/4017))

|

||||

@@ -54,7 +226,6 @@ Contributors:

|

||||

- Fixed bug with `error_if` test option ([#4070](https://github.com/dbt-labs/dbt-core/pull/4070))

|

||||

|

||||

### Under the hood

|

||||

|

||||

- Enact deprecation for `materialization-return` and replace deprecation warning with an exception. ([#3896](https://github.com/dbt-labs/dbt-core/issues/3896))

|

||||

- Build catalog for only relational, non-ephemeral nodes in the graph ([#3920](https://github.com/dbt-labs/dbt-core/issues/3920))

|

||||

- Enact deprecation to remove the `release` arg from the `execute_macro` method. ([#3900](https://github.com/dbt-labs/dbt-core/issues/3900))

|

||||

@@ -67,6 +238,7 @@ Contributors:

|

||||

- Update the default project paths to be `analysis-paths = ['analyses']` and `test-paths = ['tests]`. Also have starter project set `analysis-paths: ['analyses']` from now on. ([#2659](https://github.com/dbt-labs/dbt-core/issues/2659))

|

||||

- Define the data type of `sources` as an array of arrays of string in the manifest artifacts. ([#3966](https://github.com/dbt-labs/dbt-core/issues/3966), [#3967](https://github.com/dbt-labs/dbt-core/pull/3967))

|

||||

- Marked `source-paths` and `data-paths` as deprecated keys in `dbt_project.yml` in favor of `model-paths` and `seed-paths` respectively.([#1607](https://github.com/dbt-labs/dbt-core/issues/1607))

|

||||

- Surface git errors to `stdout` when cloning dbt packages from Github. ([#3167](https://github.com/dbt-labs/dbt-core/issues/3167))

|

||||

|

||||

Contributors:

|

||||

|

||||

@@ -75,14 +247,25 @@ Contributors:

|

||||

- [@samlader](https://github.com/samlader) ([#3993](https://github.com/dbt-labs/dbt-core/pull/3993))

|

||||

- [@yu-iskw](https://github.com/yu-iskw) ([#3967](https://github.com/dbt-labs/dbt-core/pull/3967))

|

||||

- [@laxjesse](https://github.com/laxjesse) ([#4019](https://github.com/dbt-labs/dbt-core/pull/4019))

|

||||

- [@gitznik](https://github.com/Gitznik) ([#4124](https://github.com/dbt-labs/dbt-core/pull/4124))

|

||||

|

||||

## dbt 0.21.1 (Release TBD)

|

||||

## dbt 0.21.1 (November 29, 2021)

|

||||

|

||||

### Fixes

|

||||

- Add `get_where_subquery` to test macro namespace, fixing custom generic tests that rely on introspecting the `model` arg at parse time ([#4195](https://github.com/dbt-labs/dbt/issues/4195), [#4197](https://github.com/dbt-labs/dbt/pull/4197))

|

||||

|

||||

## dbt 0.21.1rc1 (November 03, 2021)

|

||||

|

||||

### Fixes

|

||||

- Performance: Use child_map to find tests for nodes in resolve_graph ([#4012](https://github.com/dbt-labs/dbt/issues/4012), [#4022](https://github.com/dbt-labs/dbt/pull/4022))

|

||||

- Switch `unique_field` from abstractproperty to optional property. Add docstring ([#4025](https://github.com/dbt-labs/dbt/issues/4025), [#4028](https://github.com/dbt-labs/dbt/pull/4028))

|

||||

- Include only relational nodes in `database_schema_set` ([#4063](https://github.com/dbt-labs/dbt-core/issues/4063), [#4077](https://github.com/dbt-labs/dbt-core/pull/4077))

|

||||

- Added support for tests on databases that lack real boolean types. ([#4084](https://github.com/dbt-labs/dbt-core/issues/4084))

|

||||

- Scrub secrets coming from `CommandError`s so they don't get exposed in logs. ([#4138](https://github.com/dbt-labs/dbt-core/pull/4138))

|

||||

- Syntax fix in `alter_relation_add_remove_columns` if only removing columns in `on_schema_change: sync_all_columns` ([#4147](https://github.com/dbt-labs/dbt-core/issues/4147))

|

||||

- Increase performance of graph subset selection ([#4135](https://github.com/dbt-labs/dbt-core/issues/4135),[#4155](https://github.com/dbt-labs/dbt-core/pull/4155))

|

||||

- Add downstream test edges for `build` task _only_. Restore previous graph construction, compilation performance, and node selection behavior (`test+`) for all other tasks ([#4135](https://github.com/dbt-labs/dbt-core/issues/4135), [#4143](https://github.com/dbt-labs/dbt-core/pull/4143))

|

||||

- Don't require a strict/proper subset when adding testing edges to specialized graph for `build` ([#4158](https://github.com/dbt-labs/dbt-core/issues/4135), [#4158](https://github.com/dbt-labs/dbt-core/pull/4160))

|

||||

|

||||

Contributors:

|

||||

- [@ljhopkins2](https://github.com/ljhopkins2) ([#4077](https://github.com/dbt-labs/dbt-core/pull/4077))

|

||||

@@ -210,7 +393,7 @@ Contributors:

|

||||

- [@jmriego](https://github.com/jmriego) ([#3526](https://github.com/dbt-labs/dbt-core/pull/3526))

|

||||

- [@danielefrigo](https://github.com/danielefrigo) ([#3547](https://github.com/dbt-labs/dbt-core/pull/3547))

|

||||

|

||||

## dbt 0.20.2 (Release TBD)

|

||||

## dbt 0.20.2 (September 07, 2021)

|

||||

|

||||

### Under the hood

|

||||

|

||||

|

||||

42

core/README.md

Normal file

42

core/README.md

Normal file

@@ -0,0 +1,42 @@

|

||||

<p align="center">

|

||||

<img src="https://raw.githubusercontent.com/dbt-labs/dbt-core/fa1ea14ddfb1d5ae319d5141844910dd53ab2834/etc/dbt-core.svg" alt="dbt logo" width="750"/>

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://github.com/dbt-labs/dbt-core/actions/workflows/main.yml">

|

||||

<img src="https://github.com/dbt-labs/dbt-core/actions/workflows/main.yml/badge.svg?event=push" alt="Unit Tests Badge"/>

|

||||

</a>

|

||||

<a href="https://github.com/dbt-labs/dbt-core/actions/workflows/integration.yml">

|

||||

<img src="https://github.com/dbt-labs/dbt-core/actions/workflows/integration.yml/badge.svg?event=push" alt="Integration Tests Badge"/>

|

||||

</a>

|

||||

</p>

|

||||

|

||||

**[dbt](https://www.getdbt.com/)** enables data analysts and engineers to transform their data using the same practices that software engineers use to build applications.

|

||||

|

||||

|

||||

|

||||

## Understanding dbt

|

||||

|

||||

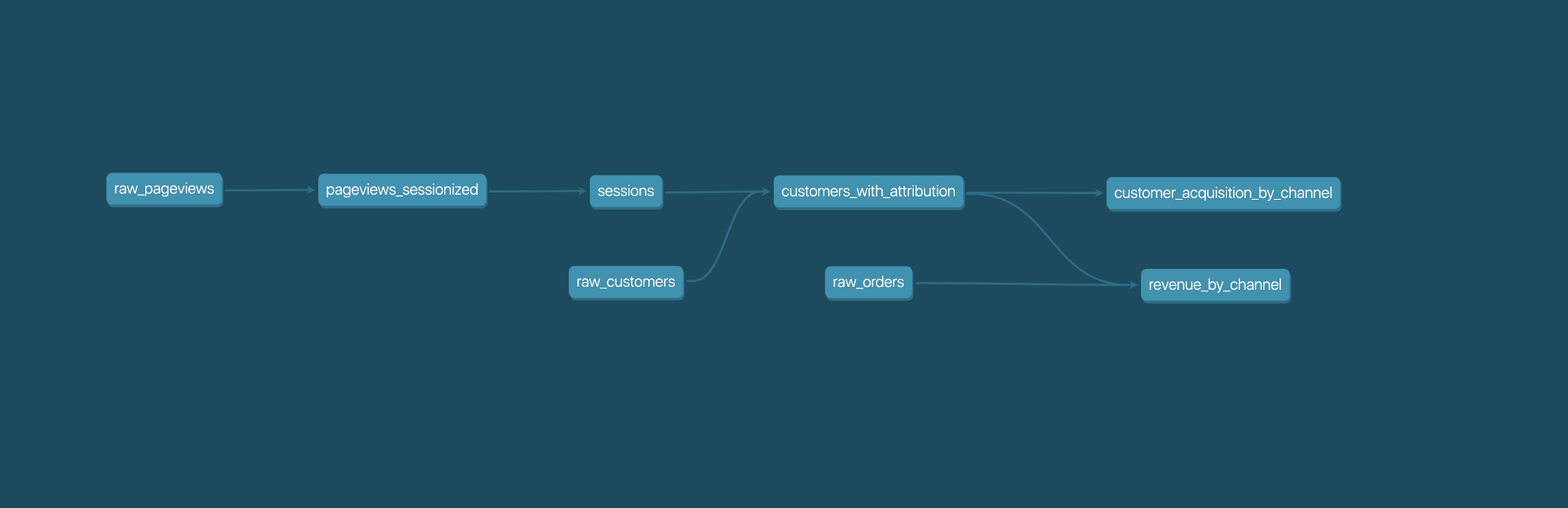

Analysts using dbt can transform their data by simply writing select statements, while dbt handles turning these statements into tables and views in a data warehouse.

|

||||

|

||||

These select statements, or "models", form a dbt project. Models frequently build on top of one another – dbt makes it easy to [manage relationships](https://docs.getdbt.com/docs/ref) between models, and [visualize these relationships](https://docs.getdbt.com/docs/documentation), as well as assure the quality of your transformations through [testing](https://docs.getdbt.com/docs/testing).

|

||||

|

||||

|

||||

|

||||

## Getting started

|

||||

|

||||

- [Install dbt](https://docs.getdbt.com/docs/installation)

|

||||

- Read the [introduction](https://docs.getdbt.com/docs/introduction/) and [viewpoint](https://docs.getdbt.com/docs/about/viewpoint/)

|

||||

|

||||

## Join the dbt Community

|

||||

|

||||

- Be part of the conversation in the [dbt Community Slack](http://community.getdbt.com/)

|

||||

- Read more on the [dbt Community Discourse](https://discourse.getdbt.com)

|

||||

|

||||

## Reporting bugs and contributing code

|

||||

|

||||

- Want to report a bug or request a feature? Let us know on [Slack](http://community.getdbt.com/), or open [an issue](https://github.com/dbt-labs/dbt-core/issues/new)

|

||||

- Want to help us build dbt? Check out the [Contributing Guide](https://github.com/dbt-labs/dbt-core/blob/HEAD/CONTRIBUTING.md)

|

||||

|

||||

## Code of Conduct

|

||||

|

||||

Everyone interacting in the dbt project's codebases, issue trackers, chat rooms, and mailing lists is expected to follow the [dbt Code of Conduct](https://community.getdbt.com/code-of-conduct).

|

||||

@@ -18,7 +18,17 @@ from dbt.contracts.graph.manifest import Manifest

|

||||

from dbt.adapters.base.query_headers import (

|

||||

MacroQueryStringSetter,

|

||||

)

|

||||

from dbt.logger import GLOBAL_LOGGER as logger

|

||||

from dbt.events.functions import fire_event

|

||||

from dbt.events.types import (

|

||||

NewConnection,

|

||||

ConnectionReused,

|

||||

ConnectionLeftOpen,

|

||||

ConnectionLeftOpen2,

|

||||

ConnectionClosed,

|

||||

ConnectionClosed2,

|

||||

Rollback,

|

||||

RollbackFailed

|

||||

)

|

||||

from dbt import flags

|

||||

|

||||

|

||||

@@ -136,14 +146,10 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

||||

if conn.name == conn_name and conn.state == 'open':

|

||||

return conn

|

||||

|

||||

logger.debug(

|

||||

'Acquiring new {} connection "{}".'.format(self.TYPE, conn_name))

|

||||

fire_event(NewConnection(conn_name=conn_name, conn_type=self.TYPE))

|

||||

|

||||

if conn.state == 'open':

|

||||

logger.debug(

|

||||

'Re-using an available connection from the pool (formerly {}).'

|

||||

.format(conn.name)

|

||||

)

|

||||

fire_event(ConnectionReused(conn_name=conn_name))

|

||||

else:

|

||||

conn.handle = LazyHandle(self.open)

|

||||

|

||||

@@ -190,11 +196,9 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

||||

with self.lock:

|

||||

for connection in self.thread_connections.values():

|

||||

if connection.state not in {'closed', 'init'}:

|

||||

logger.debug("Connection '{}' was left open."

|

||||

.format(connection.name))

|

||||

fire_event(ConnectionLeftOpen(conn_name=connection.name))

|

||||

else:

|

||||

logger.debug("Connection '{}' was properly closed."

|

||||

.format(connection.name))

|

||||

fire_event(ConnectionClosed(conn_name=connection.name))

|

||||

self.close(connection)

|

||||

|

||||

# garbage collect these connections

|

||||

@@ -220,20 +224,17 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

||||

try:

|

||||

connection.handle.rollback()

|

||||

except Exception:

|

||||

logger.debug(

|

||||

'Failed to rollback {}'.format(connection.name),

|

||||

exc_info=True

|

||||

)

|

||||

fire_event(RollbackFailed(conn_name=connection.name))

|

||||

|

||||

@classmethod

|

||||

def _close_handle(cls, connection: Connection) -> None:

|

||||

"""Perform the actual close operation."""

|

||||

# On windows, sometimes connection handles don't have a close() attr.

|

||||

if hasattr(connection.handle, 'close'):

|

||||

logger.debug(f'On {connection.name}: Close')

|

||||

fire_event(ConnectionClosed2(conn_name=connection.name))

|

||||

connection.handle.close()

|

||||

else:

|

||||

logger.debug(f'On {connection.name}: No close available on handle')

|

||||

fire_event(ConnectionLeftOpen2(conn_name=connection.name))

|

||||

|

||||

@classmethod

|

||||

def _rollback(cls, connection: Connection) -> None:

|

||||

@@ -244,7 +245,7 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

||||

f'"{connection.name}", but it does not have one open!'

|

||||

)

|

||||

|

||||

logger.debug(f'On {connection.name}: ROLLBACK')

|

||||

fire_event(Rollback(conn_name=connection.name))

|

||||

cls._rollback_handle(connection)

|

||||

|

||||

connection.transaction_open = False

|

||||

@@ -256,7 +257,7 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

||||

return connection

|

||||

|

||||

if connection.transaction_open and connection.handle:

|

||||

logger.debug('On {}: ROLLBACK'.format(connection.name))

|

||||

fire_event(Rollback(conn_name=connection.name))

|

||||

cls._rollback_handle(connection)

|

||||

connection.transaction_open = False

|

||||

|

||||

|

||||

@@ -29,7 +29,8 @@ from dbt.contracts.graph.compiled import (

|

||||

from dbt.contracts.graph.manifest import Manifest, MacroManifest

|

||||

from dbt.contracts.graph.parsed import ParsedSeedNode

|

||||

from dbt.exceptions import warn_or_error

|

||||

from dbt.logger import GLOBAL_LOGGER as logger

|

||||

from dbt.events.functions import fire_event

|

||||

from dbt.events.types import CacheMiss, ListRelations

|

||||

from dbt.utils import filter_null_values, executor

|

||||

|

||||

from dbt.adapters.base.connections import Connection, AdapterResponse

|

||||

@@ -38,7 +39,7 @@ from dbt.adapters.base.relation import (

|

||||

ComponentName, BaseRelation, InformationSchema, SchemaSearchMap

|

||||

)

|

||||

from dbt.adapters.base import Column as BaseColumn

|

||||

from dbt.adapters.cache import RelationsCache

|

||||

from dbt.adapters.cache import RelationsCache, _make_key

|

||||

|

||||

|

||||

SeedModel = Union[ParsedSeedNode, CompiledSeedNode]

|

||||

@@ -288,9 +289,12 @@ class BaseAdapter(metaclass=AdapterMeta):

|

||||

"""Check if the schema is cached, and by default logs if it is not."""

|

||||

|

||||

if (database, schema) not in self.cache:

|

||||

logger.debug(

|

||||

'On "{}": cache miss for schema "{}.{}", this is inefficient'

|

||||

.format(self.nice_connection_name(), database, schema)

|

||||

fire_event(

|

||||

CacheMiss(

|

||||

conn_name=self.nice_connection_name(),

|

||||

database=database,

|

||||

schema=schema

|

||||

)

|

||||

)

|

||||

return False

|

||||

else:

|

||||

@@ -672,9 +676,12 @@ class BaseAdapter(metaclass=AdapterMeta):

|

||||

relations = self.list_relations_without_caching(

|

||||

schema_relation

|

||||

)

|

||||

fire_event(ListRelations(

|

||||

database=database,

|

||||

schema=schema,

|

||||

relations=[_make_key(x) for x in relations]

|

||||

))

|

||||

|

||||

logger.debug('with database={}, schema={}, relations={}'

|

||||

.format(database, schema, relations))

|

||||

return relations

|

||||

|

||||

def _make_match_kwargs(

|

||||

@@ -968,10 +975,10 @@ class BaseAdapter(metaclass=AdapterMeta):

|

||||

'dbt could not find a macro with the name "{}" in {}'

|

||||

.format(macro_name, package_name)

|

||||

)

|

||||

# This causes a reference cycle, as generate_runtime_macro()

|

||||

# This causes a reference cycle, as generate_runtime_macro_context()

|

||||

# ends up calling get_adapter, so the import has to be here.

|

||||

from dbt.context.providers import generate_runtime_macro

|

||||

macro_context = generate_runtime_macro(

|

||||

from dbt.context.providers import generate_runtime_macro_context

|

||||

macro_context = generate_runtime_macro_context(

|

||||

macro=macro,

|

||||

config=self.config,

|

||||

manifest=manifest,

|

||||

|

||||

@@ -89,7 +89,10 @@ class BaseRelation(FakeAPIObject, Hashable):

|

||||

if not self._is_exactish_match(k, v):

|

||||

exact_match = False

|

||||

|

||||

if self.path.get_lowered_part(k) != v.lower():

|

||||

if (

|

||||

self.path.get_lowered_part(k).strip(self.quote_character) !=

|

||||

v.lower().strip(self.quote_character)

|

||||

):

|

||||

approximate_match = False

|

||||

|

||||

if approximate_match and not exact_match:

|

||||

|

||||

@@ -1,23 +1,27 @@

|

||||

from collections import namedtuple

|

||||

from copy import deepcopy

|

||||

from typing import List, Iterable, Optional, Dict, Set, Tuple, Any

|

||||

import threading

|

||||

from copy import deepcopy

|

||||

from typing import Any, Dict, Iterable, List, Optional, Set, Tuple

|

||||

|

||||

from dbt.logger import CACHE_LOGGER as logger

|

||||

from dbt.utils import lowercase

|

||||

from dbt.adapters.reference_keys import _make_key, _ReferenceKey

|

||||

import dbt.exceptions

|

||||

|

||||

_ReferenceKey = namedtuple('_ReferenceKey', 'database schema identifier')

|

||||

|

||||

|

||||

def _make_key(relation) -> _ReferenceKey:

|

||||

"""Make _ReferenceKeys with lowercase values for the cache so we don't have

|

||||

to keep track of quoting

|

||||

"""

|

||||

# databases and schemas can both be None

|

||||

return _ReferenceKey(lowercase(relation.database),

|

||||

lowercase(relation.schema),

|

||||

lowercase(relation.identifier))

|

||||

from dbt.events.functions import fire_event, fire_event_if

|

||||

from dbt.events.types import (

|

||||

AddLink,

|

||||

AddRelation,

|

||||

DropCascade,

|

||||

DropMissingRelation,

|

||||

DropRelation,

|

||||

DumpAfterAddGraph,

|

||||

DumpAfterRenameSchema,

|

||||

DumpBeforeAddGraph,

|

||||

DumpBeforeRenameSchema,

|

||||

RenameSchema,

|

||||

TemporaryRelation,

|

||||

UncachedRelation,

|

||||

UpdateReference

|

||||

)

|

||||

import dbt.flags as flags

|

||||

from dbt.utils import lowercase

|

||||

|

||||

|

||||

def dot_separated(key: _ReferenceKey) -> str:

|

||||

@@ -157,12 +161,6 @@ class _CachedRelation:

|

||||

return [dot_separated(r) for r in self.referenced_by]

|

||||

|

||||

|

||||

def lazy_log(msg, func):

|

||||

if logger.disabled:

|

||||

return

|

||||

logger.debug(msg.format(func()))

|

||||

|

||||

|

||||

class RelationsCache:

|

||||

"""A cache of the relations known to dbt. Keeps track of relationships

|

||||

declared between tables and handles renames/drops as a real database would.

|

||||

@@ -278,6 +276,7 @@ class RelationsCache:

|

||||

|

||||

referenced.add_reference(dependent)

|

||||

|

||||

# TODO: Is this dead code? I can't seem to find it grepping the codebase.

|

||||

def add_link(self, referenced, dependent):

|

||||

"""Add a link between two relations to the database. If either relation

|

||||

does not exist, it will be added as an "external" relation.

|

||||

@@ -297,11 +296,7 @@ class RelationsCache:

|

||||

# if we have not cached the referenced schema at all, we must be

|

||||

# referring to a table outside our control. There's no need to make

|

||||

# a link - we will never drop the referenced relation during a run.

|

||||

logger.debug(

|

||||

'{dep!s} references {ref!s} but {ref.database}.{ref.schema} '

|

||||

'is not in the cache, skipping assumed external relation'

|

||||

.format(dep=dependent, ref=ref_key)

|

||||

)

|

||||

fire_event(UncachedRelation(dep_key=dependent, ref_key=ref_key))

|

||||

return

|

||||

if ref_key not in self.relations:

|

||||

# Insert a dummy "external" relation.

|

||||

@@ -317,9 +312,7 @@ class RelationsCache:

|

||||

type=referenced.External

|

||||

)

|

||||

self.add(dependent)

|

||||

logger.debug(

|

||||

'adding link, {!s} references {!s}'.format(dep_key, ref_key)

|

||||

)

|

||||

fire_event(AddLink(dep_key=dep_key, ref_key=ref_key))

|

||||

with self.lock:

|

||||

self._add_link(ref_key, dep_key)

|

||||

|

||||

@@ -330,14 +323,12 @@ class RelationsCache:

|

||||

:param BaseRelation relation: The underlying relation.

|

||||

"""

|

||||

cached = _CachedRelation(relation)

|

||||

logger.debug('Adding relation: {!s}'.format(cached))

|

||||

|

||||

lazy_log('before adding: {!s}', self.dump_graph)

|

||||

fire_event(AddRelation(relation=_make_key(cached)))

|

||||

fire_event_if(flags.LOG_CACHE_EVENTS, lambda: DumpBeforeAddGraph(dump=self.dump_graph()))

|

||||

|

||||

with self.lock:

|

||||

self._setdefault(cached)

|

||||

|

||||

lazy_log('after adding: {!s}', self.dump_graph)

|

||||

fire_event_if(flags.LOG_CACHE_EVENTS, lambda: DumpAfterAddGraph(dump=self.dump_graph()))

|

||||

|

||||

def _remove_refs(self, keys):

|

||||

"""Removes all references to all entries in keys. This does not

|

||||

@@ -359,13 +350,10 @@ class RelationsCache:

|

||||

:param _CachedRelation dropped: An existing _CachedRelation to drop.

|

||||

"""

|

||||

if dropped not in self.relations:

|

||||

logger.debug('dropped a nonexistent relationship: {!s}'

|

||||

.format(dropped))

|

||||

fire_event(DropMissingRelation(relation=dropped))

|

||||

return

|

||||

consequences = self.relations[dropped].collect_consequences()

|

||||

logger.debug(

|

||||

'drop {} is cascading to {}'.format(dropped, consequences)

|

||||

)

|

||||

fire_event(DropCascade(dropped=dropped, consequences=consequences))

|

||||

self._remove_refs(consequences)

|

||||

|

||||

def drop(self, relation):

|

||||

@@ -380,7 +368,7 @@ class RelationsCache:

|

||||

:param str identifier: The identifier of the relation to drop.

|

||||

"""

|

||||

dropped = _make_key(relation)

|

||||

logger.debug('Dropping relation: {!s}'.format(dropped))

|

||||

fire_event(DropRelation(dropped=dropped))

|

||||

with self.lock:

|

||||

self._drop_cascade_relation(dropped)

|

||||

|

||||

@@ -403,9 +391,8 @@ class RelationsCache:

|

||||

# update all the relations that refer to it

|

||||

for cached in self.relations.values():

|

||||

if cached.is_referenced_by(old_key):

|

||||

logger.debug(

|

||||

'updated reference from {0} -> {2} to {1} -> {2}'

|

||||

.format(old_key, new_key, cached.key())

|

||||

fire_event(

|

||||

UpdateReference(old_key=old_key, new_key=new_key, cached_key=cached.key())

|

||||

)

|

||||

cached.rename_key(old_key, new_key)

|

||||

|

||||

@@ -435,10 +422,7 @@ class RelationsCache:

|

||||

)

|

||||

|

||||

if old_key not in self.relations:

|

||||

logger.debug(

|

||||

'old key {} not found in self.relations, assuming temporary'

|

||||

.format(old_key)

|

||||

)

|

||||

fire_event(TemporaryRelation(key=old_key))

|

||||

return False

|

||||

return True

|

||||

|

||||

@@ -456,11 +440,11 @@ class RelationsCache:

|

||||

"""

|

||||

old_key = _make_key(old)

|

||||

new_key = _make_key(new)

|

||||

logger.debug('Renaming relation {!s} to {!s}'.format(

|

||||

old_key, new_key

|

||||

))

|

||||

|

||||

lazy_log('before rename: {!s}', self.dump_graph)

|

||||

fire_event(RenameSchema(old_key=old_key, new_key=new_key))

|

||||

fire_event_if(

|

||||

flags.LOG_CACHE_EVENTS,

|

||||

lambda: DumpBeforeRenameSchema(dump=self.dump_graph())

|

||||

)

|

||||

|

||||

with self.lock:

|

||||

if self._check_rename_constraints(old_key, new_key):

|

||||

@@ -468,7 +452,10 @@ class RelationsCache:

|

||||

else:

|

||||

self._setdefault(_CachedRelation(new))

|

||||

|

||||

lazy_log('after rename: {!s}', self.dump_graph)

|

||||

fire_event_if(

|

||||

flags.LOG_CACHE_EVENTS,

|

||||

lambda: DumpAfterRenameSchema(dump=self.dump_graph())

|

||||

)

|

||||

|

||||

def get_relations(

|

||||

self, database: Optional[str], schema: Optional[str]

|

||||

|

||||

@@ -8,10 +8,9 @@ from dbt.include.global_project import (

|

||||

PACKAGE_PATH as GLOBAL_PROJECT_PATH,

|

||||

PROJECT_NAME as GLOBAL_PROJECT_NAME,

|

||||

)

|

||||

from dbt.logger import GLOBAL_LOGGER as logger

|

||||

from dbt.events.functions import fire_event

|

||||

from dbt.events.types import AdapterImportError, PluginLoadError

|

||||

from dbt.contracts.connection import Credentials, AdapterRequiredConfig

|

||||

|

||||

|

||||

from dbt.adapters.protocol import (

|

||||

AdapterProtocol,

|

||||

AdapterConfig,

|

||||

@@ -67,11 +66,12 @@ class AdapterContainer:

|

||||

# if we failed to import the target module in particular, inform

|

||||

# the user about it via a runtime error

|

||||

if exc.name == 'dbt.adapters.' + name:

|

||||

fire_event(AdapterImportError(exc=exc))

|

||||

raise RuntimeException(f'Could not find adapter type {name}!')

|

||||

logger.info(f'Error importing adapter: {exc}')

|

||||

# otherwise, the error had to have come from some underlying

|

||||

# library. Log the stack trace.

|

||||

logger.debug('', exc_info=True)

|

||||

|

||||

fire_event(PluginLoadError())

|

||||

raise

|

||||

plugin: AdapterPlugin = mod.Plugin

|

||||

plugin_type = plugin.adapter.type()

|

||||

|

||||

24

core/dbt/adapters/reference_keys.py

Normal file

24

core/dbt/adapters/reference_keys.py

Normal file

@@ -0,0 +1,24 @@

|

||||

# this module exists to resolve circular imports with the events module

|

||||

|

||||

from collections import namedtuple

|

||||

from typing import Optional

|

||||

|

||||

|

||||

_ReferenceKey = namedtuple('_ReferenceKey', 'database schema identifier')

|

||||

|

||||

|

||||