mirror of

https://github.com/dbt-labs/dbt-core

synced 2025-12-21 11:21:28 +00:00

Compare commits

63 Commits

experiment

...

testing-pr

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

42058de028 | ||

|

|

6a5ed4f418 | ||

|

|

ef25698d3d | ||

|

|

ab3f994626 | ||

|

|

db325d0fde | ||

|

|

24e4b75c35 | ||

|

|

34174abf26 | ||

|

|

af778312cb | ||

|

|

280f5614ef | ||

|

|

034a44e625 | ||

|

|

84155fdff7 | ||

|

|

b70fb543f5 | ||

|

|

31c88f9f5a | ||

|

|

344a14416d | ||

|

|

be47a0c5db | ||

|

|

c7c057483d | ||

|

|

7f5170ae4d | ||

|

|

49b8693b11 | ||

|

|

d7b0a14eb5 | ||

|

|

8996cb1e18 | ||

|

|

38f278cce0 | ||

|

|

bb4e475044 | ||

|

|

4fbe36a8e9 | ||

|

|

a1a40b562a | ||

|

|

3a4a1bb005 | ||

|

|

4833348769 | ||

|

|

ad07d59a78 | ||

|

|

e8aaabd1d3 | ||

|

|

d7d7396eeb | ||

|

|

41538860cd | ||

|

|

5c9f8a0cf0 | ||

|

|

11c997c3e9 | ||

|

|

1b1184a5e1 | ||

|

|

4ffcc43ed9 | ||

|

|

4ccaac46a6 | ||

|

|

ba88b84055 | ||

|

|

e88f1f1edb | ||

|

|

13c7486f0e | ||

|

|

8e811ba141 | ||

|

|

c5d86afed6 | ||

|

|

43a0cfbee1 | ||

|

|

8567d5f302 | ||

|

|

36d1bddc5b | ||

|

|

bf992680af | ||

|

|

e064298dfc | ||

|

|

e01a10ced5 | ||

|

|

2aa10fb1ed | ||

|

|

66f442ad76 | ||

|

|

11f1ecebcf | ||

|

|

e339cb27f6 | ||

|

|

bce3232b39 | ||

|

|

b08970ce39 | ||

|

|

533f88ceaf | ||

|

|

c8f0469a44 | ||

|

|

a1fc24e532 | ||

|

|

d80daa48df | ||

|

|

92aae2803f | ||

|

|

77cbbbfaf2 | ||

|

|

6c6649f912 | ||

|

|

56c2518936 | ||

|

|

e52a599be6 | ||

|

|

99744bd318 | ||

|

|

1b666d01cf |

@@ -4,7 +4,7 @@ parse = (?P<major>\d+)

|

|||||||

\.(?P<minor>\d+)

|

\.(?P<minor>\d+)

|

||||||

\.(?P<patch>\d+)

|

\.(?P<patch>\d+)

|

||||||

((?P<prerelease>[a-z]+)(?P<num>\d+))?

|

((?P<prerelease>[a-z]+)(?P<num>\d+))?

|

||||||

serialize =

|

serialize =

|

||||||

{major}.{minor}.{patch}{prerelease}{num}

|

{major}.{minor}.{patch}{prerelease}{num}

|

||||||

{major}.{minor}.{patch}

|

{major}.{minor}.{patch}

|

||||||

commit = False

|

commit = False

|

||||||

@@ -12,7 +12,7 @@ tag = False

|

|||||||

|

|

||||||

[bumpversion:part:prerelease]

|

[bumpversion:part:prerelease]

|

||||||

first_value = a

|

first_value = a

|

||||||

values =

|

values =

|

||||||

a

|

a

|

||||||

b

|

b

|

||||||

rc

|

rc

|

||||||

@@ -41,4 +41,3 @@ first_value = 1

|

|||||||

[bumpversion:file:plugins/snowflake/dbt/adapters/snowflake/__version__.py]

|

[bumpversion:file:plugins/snowflake/dbt/adapters/snowflake/__version__.py]

|

||||||

|

|

||||||

[bumpversion:file:plugins/bigquery/dbt/adapters/bigquery/__version__.py]

|

[bumpversion:file:plugins/bigquery/dbt/adapters/bigquery/__version__.py]

|

||||||

|

|

||||||

|

|||||||

45

.github/dependabot.yml

vendored

Normal file

45

.github/dependabot.yml

vendored

Normal file

@@ -0,0 +1,45 @@

|

|||||||

|

version: 2

|

||||||

|

updates:

|

||||||

|

# python dependencies

|

||||||

|

- package-ecosystem: "pip"

|

||||||

|

directory: "/"

|

||||||

|

schedule:

|

||||||

|

interval: "daily"

|

||||||

|

rebase-strategy: "disabled"

|

||||||

|

- package-ecosystem: "pip"

|

||||||

|

directory: "/core"

|

||||||

|

schedule:

|

||||||

|

interval: "daily"

|

||||||

|

rebase-strategy: "disabled"

|

||||||

|

- package-ecosystem: "pip"

|

||||||

|

directory: "/plugins/bigquery"

|

||||||

|

schedule:

|

||||||

|

interval: "daily"

|

||||||

|

rebase-strategy: "disabled"

|

||||||

|

- package-ecosystem: "pip"

|

||||||

|

directory: "/plugins/postgres"

|

||||||

|

schedule:

|

||||||

|

interval: "daily"

|

||||||

|

rebase-strategy: "disabled"

|

||||||

|

- package-ecosystem: "pip"

|

||||||

|

directory: "/plugins/redshift"

|

||||||

|

schedule:

|

||||||

|

interval: "daily"

|

||||||

|

rebase-strategy: "disabled"

|

||||||

|

- package-ecosystem: "pip"

|

||||||

|

directory: "/plugins/snowflake"

|

||||||

|

schedule:

|

||||||

|

interval: "daily"

|

||||||

|

rebase-strategy: "disabled"

|

||||||

|

|

||||||

|

# docker dependencies

|

||||||

|

- package-ecosystem: "docker"

|

||||||

|

directory: "/"

|

||||||

|

schedule:

|

||||||

|

interval: "weekly"

|

||||||

|

rebase-strategy: "disabled"

|

||||||

|

- package-ecosystem: "docker"

|

||||||

|

directory: "/docker"

|

||||||

|

schedule:

|

||||||

|

interval: "weekly"

|

||||||

|

rebase-strategy: "disabled"

|

||||||

20

.pre-commit-config.yaml

Normal file

20

.pre-commit-config.yaml

Normal file

@@ -0,0 +1,20 @@

|

|||||||

|

repos:

|

||||||

|

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||||

|

rev: v2.3.0

|

||||||

|

hooks:

|

||||||

|

- id: check-yaml

|

||||||

|

- id: end-of-file-fixer

|

||||||

|

- id: trailing-whitespace

|

||||||

|

- repo: https://github.com/psf/black

|

||||||

|

rev: 20.8b1

|

||||||

|

hooks:

|

||||||

|

- id: black

|

||||||

|

- repo: https://gitlab.com/PyCQA/flake8

|

||||||

|

rev: 3.9.0

|

||||||

|

hooks:

|

||||||

|

- id: flake8

|

||||||

|

- repo: https://github.com/pre-commit/mirrors-mypy

|

||||||

|

rev: v0.812

|

||||||

|

hooks:

|

||||||

|

- id: mypy

|

||||||

|

files: ^core/dbt/

|

||||||

49

ARCHITECTURE.md

Normal file

49

ARCHITECTURE.md

Normal file

@@ -0,0 +1,49 @@

|

|||||||

|

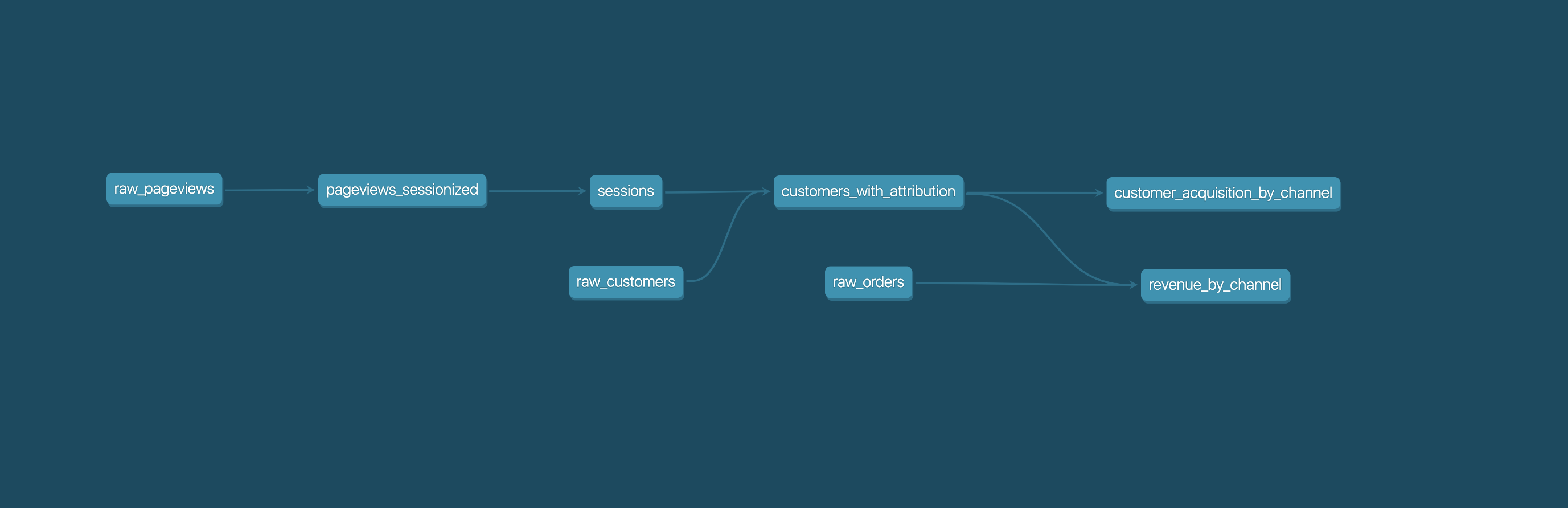

The core function of dbt is SQL compilation and execution. Users create projects of dbt resources (models, tests, seeds, snapshots, ...), defined in SQL and YAML files, and they invoke dbt to create, update, or query associated views and tables. Today, dbt makes heavy use of Jinja2 to enable the templating of SQL, and to construct a DAG (Directed Acyclic Graph) from all of the resources in a project. Users can also extend their projects by installing resources (including Jinja macros) from other projects, called "packages."

|

||||||

|

|

||||||

|

## dbt-core

|

||||||

|

|

||||||

|

Most of the python code in the repository is within the `core/dbt` directory. Currently the main subdirectories are:

|

||||||

|

- [`adapters`](core/dbt/adapters): Define base classes for behavior that is likely to differ across databases

|

||||||

|

- [`clients`](core/dbt/clients): Interface with dependencies (agate, jinja) or across operating systems

|

||||||

|

- [`config`](core/dbt/config): Reconcile user-supplied configuration from connection profiles, project files, and Jinja macros

|

||||||

|

- [`context`](core/dbt/context): Build and expose dbt-specific Jinja functionality

|

||||||

|

- [`contracts`](core/dbt/contracts): Define Python objects (dataclasses) that dbt expects to create and validate

|

||||||

|

- [`deps`](core/dbt/deps): Package installation and dependency resolution

|

||||||

|

- [`graph`](core/dbt/graph): Produce a `networkx` DAG of project resources, and selecting those resources given user-supplied criteria

|

||||||

|

- [`include`](core/dbt/include): The dbt "global project," which defines default implementations of Jinja2 macros

|

||||||

|

- [`parser`](core/dbt/parser): Read project files, validate, construct python objects

|

||||||

|

- [`rpc`](core/dbt/rpc): Provide remote procedure call server for invoking dbt, following JSON-RPC 2.0 spec

|

||||||

|

- [`task`](core/dbt/task): Set forth the actions that dbt can perform when invoked

|

||||||

|

|

||||||

|

### Invoking dbt

|

||||||

|

|

||||||

|

There are two supported ways of invoking dbt: from the command line and using an RPC server.

|

||||||

|

|

||||||

|

The "tasks" map to top-level dbt commands. So `dbt run` => task.run.RunTask, etc. Some are more like abstract base classes (GraphRunnableTask, for example) but all the concrete types outside of task/rpc should map to tasks. Currently one executes at a time. The tasks kick off their “Runners” and those do execute in parallel. The parallelism is managed via a thread pool, in GraphRunnableTask.

|

||||||

|

|

||||||

|

core/dbt/include/index.html

|

||||||

|

This is the docs website code. It comes from the dbt-docs repository, and is generated when a release is packaged.

|

||||||

|

|

||||||

|

## Adapters

|

||||||

|

|

||||||

|

dbt uses an adapter-plugin pattern to extend support to different databases, warehouses, query engines, etc. The four core adapters that are in the main repository, contained within the [`plugins`](plugins) subdirectory, are: Postgres Redshift, Snowflake and BigQuery. Other warehouses use adapter plugins defined in separate repositories (e.g. [dbt-spark](https://github.com/fishtown-analytics/dbt-spark), [dbt-presto](https://github.com/fishtown-analytics/dbt-presto)).

|

||||||

|

|

||||||

|

Each adapter is a mix of python, Jinja2, and SQL. The adapter code also makes heavy use of Jinja2 to wrap modular chunks of SQL functionality, define default implementations, and allow plugins to override it.

|

||||||

|

|

||||||

|

Each adapter plugin is a standalone python package that includes:

|

||||||

|

|

||||||

|

- `dbt/include/[name]`: A "sub-global" dbt project, of YAML and SQL files, that reimplements Jinja macros to use the adapter's supported SQL syntax

|

||||||

|

- `dbt/adapters/[name]`: Python modules that inherit, and optionally reimplement, the base adapter classes defined in dbt-core

|

||||||

|

- `setup.py`

|

||||||

|

|

||||||

|

The Postgres adapter code is the most central, and many of its implementations are used as the default defined in the dbt-core global project. The greater the distance of a data technology from Postgres, the more its adapter plugin may need to reimplement.

|

||||||

|

|

||||||

|

## Testing dbt

|

||||||

|

|

||||||

|

The [`test/`](test/) subdirectory includes unit and integration tests that run as continuous integration checks against open pull requests. Unit tests check mock inputs and outputs of specific python functions. Integration tests perform end-to-end dbt invocations against real adapters (Postgres, Redshift, Snowflake, BigQuery) and assert that the results match expectations. See [the contributing guide](CONTRIBUTING.md) for a step-by-step walkthrough of setting up a local development and testing environment.

|

||||||

|

|

||||||

|

## Everything else

|

||||||

|

|

||||||

|

- [docker](docker/): All dbt versions are published as Docker images on DockerHub. This subfolder contains the `Dockerfile` (constant) and `requirements.txt` (one for each version).

|

||||||

|

- [etc](etc/): Images for README

|

||||||

|

- [scripts](scripts/): Helper scripts for testing, releasing, and producing JSON schemas. These are not included in distributions of dbt, not are they rigorously tested—they're just handy tools for the dbt maintainers :)

|

||||||

21

CHANGELOG.md

21

CHANGELOG.md

@@ -1,24 +1,43 @@

|

|||||||

## dbt 0.20.0 (Release TBD)

|

## dbt 0.20.0 (Release TBD)

|

||||||

|

|

||||||

### Fixes

|

### Fixes

|

||||||

|

|

||||||

- Fix exit code from dbt debug not returning a failure when one of the tests fail ([#3017](https://github.com/fishtown-analytics/dbt/issues/3017))

|

- Fix exit code from dbt debug not returning a failure when one of the tests fail ([#3017](https://github.com/fishtown-analytics/dbt/issues/3017))

|

||||||

- Auto-generated CTEs in tests and ephemeral models have lowercase names to comply with dbt coding conventions ([#3027](https://github.com/fishtown-analytics/dbt/issues/3027), [#3028](https://github.com/fishtown-analytics/dbt/issues/3028))

|

- Auto-generated CTEs in tests and ephemeral models have lowercase names to comply with dbt coding conventions ([#3027](https://github.com/fishtown-analytics/dbt/issues/3027), [#3028](https://github.com/fishtown-analytics/dbt/issues/3028))

|

||||||

|

- Fix incorrect error message when a selector does not match any node [#3036](https://github.com/fishtown-analytics/dbt/issues/3036))

|

||||||

|

- Fix variable `_dbt_max_partition` declaration and initialization for BigQuery incremental models ([#2940](https://github.com/fishtown-analytics/dbt/issues/2940), [#2976](https://github.com/fishtown-analytics/dbt/pull/2976))

|

||||||

|

- Moving from 'master' to 'HEAD' default branch in git ([#3057](https://github.com/fishtown-analytics/dbt/issues/3057), [#3104](https://github.com/fishtown-analytics/dbt/issues/3104), [#3117](https://github.com/fishtown-analytics/dbt/issues/3117)))

|

||||||

|

- Requirement on `dataclasses` is relaxed to be between `>=0.6,<0.9` allowing dbt to cohabit with other libraries which required higher versions. ([#3150](https://github.com/fishtown-analytics/dbt/issues/3150), [#3151](https://github.com/fishtown-analytics/dbt/pull/3151))

|

||||||

|

|

||||||

### Features

|

### Features

|

||||||

- Add optional configs for `require_partition_filter` and `partition_expiration_days` in BigQuery ([#1843](https://github.com/fishtown-analytics/dbt/issues/1843), [#2928](https://github.com/fishtown-analytics/dbt/pull/2928))

|

- Add optional configs for `require_partition_filter` and `partition_expiration_days` in BigQuery ([#1843](https://github.com/fishtown-analytics/dbt/issues/1843), [#2928](https://github.com/fishtown-analytics/dbt/pull/2928))

|

||||||

- Fix for EOL SQL comments prevent entire line execution ([#2731](https://github.com/fishtown-analytics/dbt/issues/2731), [#2974](https://github.com/fishtown-analytics/dbt/pull/2974))

|

- Fix for EOL SQL comments prevent entire line execution ([#2731](https://github.com/fishtown-analytics/dbt/issues/2731), [#2974](https://github.com/fishtown-analytics/dbt/pull/2974))

|

||||||

|

|

||||||

|

### Under the hood

|

||||||

|

- Add dependabot configuration for alerting maintainers about keeping dependencies up to date and secure. ([#3061](https://github.com/fishtown-analytics/dbt/issues/3061), [#3062](https://github.com/fishtown-analytics/dbt/pull/3062))

|

||||||

|

- Update script to collect and write json schema for dbt artifacts ([#2870](https://github.com/fishtown-analytics/dbt/issues/2870), [#3065](https://github.com/fishtown-analytics/dbt/pull/3065))

|

||||||

|

|

||||||

Contributors:

|

Contributors:

|

||||||

- [@yu-iskw](https://github.com/yu-iskw) ([#2928](https://github.com/fishtown-analytics/dbt/pull/2928))

|

- [@yu-iskw](https://github.com/yu-iskw) ([#2928](https://github.com/fishtown-analytics/dbt/pull/2928))

|

||||||

- [@sdebruyn](https://github.com/sdebruyn) / [@lynxcare](https://github.com/lynxcare) ([#3018](https://github.com/fishtown-analytics/dbt/pull/3018))

|

- [@sdebruyn](https://github.com/sdebruyn) / [@lynxcare](https://github.com/lynxcare) ([#3018](https://github.com/fishtown-analytics/dbt/pull/3018))

|

||||||

- [@rvacaru](https://github.com/rvacaru) ([#2974](https://github.com/fishtown-analytics/dbt/pull/2974))

|

- [@rvacaru](https://github.com/rvacaru) ([#2974](https://github.com/fishtown-analytics/dbt/pull/2974))

|

||||||

- [@NiallRees](https://github.com/NiallRees) ([#3028](https://github.com/fishtown-analytics/dbt/pull/3028))

|

- [@NiallRees](https://github.com/NiallRees) ([#3028](https://github.com/fishtown-analytics/dbt/pull/3028))

|

||||||

|

- [ran-eh](https://github.com/ran-eh) ([#3036](https://github.com/fishtown-analytics/dbt/pull/3036))

|

||||||

|

- [@pcasteran](https://github.com/pcasteran) ([#2976](https://github.com/fishtown-analytics/dbt/pull/2976))

|

||||||

|

- [@VasiliiSurov](https://github.com/VasiliiSurov) ([#3104](https://github.com/fishtown-analytics/dbt/pull/3104))

|

||||||

|

- [@bastienboutonnet](https://github.com/bastienboutonnet) ([#3151](https://github.com/fishtown-analytics/dbt/pull/3151))

|

||||||

|

|

||||||

## dbt 0.19.1 (Release TBD)

|

## dbt 0.19.1 (Release TBD)

|

||||||

|

|

||||||

|

### Fixes

|

||||||

|

|

||||||

|

- On BigQuery, fix regressions for `insert_overwrite` incremental strategy with `int64` and `timestamp` partition columns ([#3063](https://github.com/fishtown-analytics/dbt/issues/3063), [#3095](https://github.com/fishtown-analytics/dbt/issues/3095), [#3098](https://github.com/fishtown-analytics/dbt/issues/3098))

|

||||||

|

|

||||||

### Under the hood

|

### Under the hood

|

||||||

- Bump werkzeug upper bound dependency to `<v2.0` ([#3011](https://github.com/fishtown-analytics/dbt/pull/3011))

|

- Bump werkzeug upper bound dependency to `<v2.0` ([#3011](https://github.com/fishtown-analytics/dbt/pull/3011))

|

||||||

|

- Performance fixes for many different things ([#2862](https://github.com/fishtown-analytics/dbt/issues/2862), [#3034](https://github.com/fishtown-analytics/dbt/pull/3034))

|

||||||

|

- Update code to use Mashumaro 2.0 ([#3138](https://github.com/fishtown-analytics/dbt/pull/3138))

|

||||||

|

- Add an event to track resource counts ([#3050](https://github.com/fishtown-analytics/dbt/issues/3050), [#3156](https://github.com/fishtown-analytics/dbt/pull/3156))

|

||||||

|

- Pin `agate<1.6.2` to avoid installation errors relating to its new dependency `PyICU` ([#3160](https://github.com/fishtown-analytics/dbt/issues/3160), [#3161](https://github.com/fishtown-analytics/dbt/pull/3161))

|

||||||

|

|

||||||

Contributors:

|

Contributors:

|

||||||

- [@Bl3f](https://github.com/Bl3f) ([#3011](https://github.com/fishtown-analytics/dbt/pull/3011))

|

- [@Bl3f](https://github.com/Bl3f) ([#3011](https://github.com/fishtown-analytics/dbt/pull/3011))

|

||||||

|

|||||||

@@ -62,6 +62,13 @@ The dbt maintainers use labels to categorize open issues. Some labels indicate t

|

|||||||

| [stale](https://github.com/fishtown-analytics/dbt/labels/stale) | This is an old issue which has not recently been updated. Stale issues will periodically be closed by dbt maintainers, but they can be re-opened if the discussion is restarted. |

|

| [stale](https://github.com/fishtown-analytics/dbt/labels/stale) | This is an old issue which has not recently been updated. Stale issues will periodically be closed by dbt maintainers, but they can be re-opened if the discussion is restarted. |

|

||||||

| [wontfix](https://github.com/fishtown-analytics/dbt/labels/wontfix) | This issue does not require a code change in the dbt repository, or the maintainers are unwilling/unable to merge a Pull Request which implements the behavior described in the issue. |

|

| [wontfix](https://github.com/fishtown-analytics/dbt/labels/wontfix) | This issue does not require a code change in the dbt repository, or the maintainers are unwilling/unable to merge a Pull Request which implements the behavior described in the issue. |

|

||||||

|

|

||||||

|

#### Branching Strategy

|

||||||

|

|

||||||

|

dbt has three types of branches:

|

||||||

|

|

||||||

|

- **Trunks** are where active development of the next release takes place. There is one trunk named `develop` at the time of writing this, and will be the default branch of the repository.

|

||||||

|

- **Release Branches** track a specific, not yet complete release of dbt. Each minor version release has a corresponding release branch. For example, the `0.11.x` series of releases has a branch called `0.11.latest`. This allows us to release new patch versions under `0.11` without necessarily needing to pull them into the latest version of dbt.

|

||||||

|

- **Feature Branches** track individual features and fixes. On completion they should be merged into the trunk brnach or a specific release branch.

|

||||||

|

|

||||||

## Getting the code

|

## Getting the code

|

||||||

|

|

||||||

@@ -81,10 +88,9 @@ If you are not a member of the `fishtown-analytics` GitHub organization, you can

|

|||||||

|

|

||||||

### Core contributors

|

### Core contributors

|

||||||

|

|

||||||

If you are a member of the `fishtown-analytics` GitHub organization, you will have push access to the dbt repo. Rather than

|

If you are a member of the `fishtown-analytics` GitHub organization, you will have push access to the dbt repo. Rather than

|

||||||

forking dbt to make your changes, just clone the repository, check out a new branch, and push directly to that branch.

|

forking dbt to make your changes, just clone the repository, check out a new branch, and push directly to that branch.

|

||||||

|

|

||||||

|

|

||||||

## Setting up an environment

|

## Setting up an environment

|

||||||

|

|

||||||

There are some tools that will be helpful to you in developing locally. While this is the list relevant for dbt development, many of these tools are used commonly across open-source python projects.

|

There are some tools that will be helpful to you in developing locally. While this is the list relevant for dbt development, many of these tools are used commonly across open-source python projects.

|

||||||

@@ -115,7 +121,7 @@ This will create and activate a new Python virtual environment.

|

|||||||

|

|

||||||

#### docker and docker-compose

|

#### docker and docker-compose

|

||||||

|

|

||||||

Docker and docker-compose are both used in testing. For macOS, the easiest thing to do is to [download docker for mac](https://store.docker.com/editions/community/docker-ce-desktop-mac). You'll need to make an account. On Linux, you can use one of the packages [here](https://docs.docker.com/install/#server). We recommend installing from docker.com instead of from your package manager. On Linux you also have to install docker-compose separately, following [these instructions](https://docs.docker.com/compose/install/#install-compose).

|

Docker and docker-compose are both used in testing. Specific instructions for you OS can be found [here](https://docs.docker.com/get-docker/).

|

||||||

|

|

||||||

|

|

||||||

#### postgres (optional)

|

#### postgres (optional)

|

||||||

@@ -133,7 +139,7 @@ brew install postgresql

|

|||||||

First make sure that you set up your `virtualenv` as described in section _Setting up an environment_. Next, install dbt (and its dependencies) with:

|

First make sure that you set up your `virtualenv` as described in section _Setting up an environment_. Next, install dbt (and its dependencies) with:

|

||||||

|

|

||||||

```

|

```

|

||||||

pip install -r editable_requirements.txt

|

pip install -r requirements-editable.txt

|

||||||

```

|

```

|

||||||

|

|

||||||

When dbt is installed from source in this way, any changes you make to the dbt source code will be reflected immediately in your next `dbt` run.

|

When dbt is installed from source in this way, any changes you make to the dbt source code will be reflected immediately in your next `dbt` run.

|

||||||

@@ -159,7 +165,6 @@ dbt uses test credentials specified in a `test.env` file in the root of the repo

|

|||||||

|

|

||||||

```

|

```

|

||||||

cp test.env.sample test.env

|

cp test.env.sample test.env

|

||||||

atom test.env # supply your credentials

|

|

||||||

```

|

```

|

||||||

|

|

||||||

We recommend starting with dbt's Postgres tests. These tests cover most of the functionality in dbt, are the fastest to run, and are the easiest to set up. dbt's test suite runs Postgres in a Docker container, so no setup should be required to run these tests.

|

We recommend starting with dbt's Postgres tests. These tests cover most of the functionality in dbt, are the fastest to run, and are the easiest to set up. dbt's test suite runs Postgres in a Docker container, so no setup should be required to run these tests.

|

||||||

|

|||||||

7

Makefile

7

Makefile

@@ -1,10 +1,5 @@

|

|||||||

.PHONY: install test test-unit test-integration

|

.PHONY: install test test-unit test-integration

|

||||||

|

|

||||||

changed_tests := `git status --porcelain | grep '^\(M\| M\|A\| A\)' | awk '{ print $$2 }' | grep '\/test_[a-zA-Z_\-\.]\+.py'`

|

|

||||||

|

|

||||||

install:

|

|

||||||

pip install -e .

|

|

||||||

|

|

||||||

test: .env

|

test: .env

|

||||||

@echo "Full test run starting..."

|

@echo "Full test run starting..."

|

||||||

@time docker-compose run --rm test tox

|

@time docker-compose run --rm test tox

|

||||||

@@ -18,7 +13,7 @@ test-integration: .env

|

|||||||

@time docker-compose run --rm test tox -e integration-postgres-py36,integration-redshift-py36,integration-snowflake-py36,integration-bigquery-py36

|

@time docker-compose run --rm test tox -e integration-postgres-py36,integration-redshift-py36,integration-snowflake-py36,integration-bigquery-py36

|

||||||

|

|

||||||

test-quick: .env

|

test-quick: .env

|

||||||

@echo "Integration test run starting..."

|

@echo "Integration test run starting, will exit on first failure..."

|

||||||

@time docker-compose run --rm test tox -e integration-postgres-py36 -- -x

|

@time docker-compose run --rm test tox -e integration-postgres-py36 -- -x

|

||||||

|

|

||||||

# This rule creates a file named .env that is used by docker-compose for passing

|

# This rule creates a file named .env that is used by docker-compose for passing

|

||||||

|

|||||||

@@ -1,5 +1,5 @@

|

|||||||

<p align="center">

|

<p align="center">

|

||||||

<img src="/etc/dbt-logo-full.svg" alt="dbt logo" width="500"/>

|

<img src="https://raw.githubusercontent.com/fishtown-analytics/dbt/6c6649f9129d5d108aa3b0526f634cd8f3a9d1ed/etc/dbt-logo-full.svg" alt="dbt logo" width="500"/>

|

||||||

</p>

|

</p>

|

||||||

<p align="center">

|

<p align="center">

|

||||||

<a href="https://codeclimate.com/github/fishtown-analytics/dbt">

|

<a href="https://codeclimate.com/github/fishtown-analytics/dbt">

|

||||||

@@ -20,7 +20,7 @@

|

|||||||

|

|

||||||

dbt is the T in ELT. Organize, cleanse, denormalize, filter, rename, and pre-aggregate the raw data in your warehouse so that it's ready for analysis.

|

dbt is the T in ELT. Organize, cleanse, denormalize, filter, rename, and pre-aggregate the raw data in your warehouse so that it's ready for analysis.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

dbt can be used to [aggregate pageviews into sessions](https://github.com/fishtown-analytics/snowplow), calculate [ad spend ROI](https://github.com/fishtown-analytics/facebook-ads), or report on [email campaign performance](https://github.com/fishtown-analytics/mailchimp).

|

dbt can be used to [aggregate pageviews into sessions](https://github.com/fishtown-analytics/snowplow), calculate [ad spend ROI](https://github.com/fishtown-analytics/facebook-ads), or report on [email campaign performance](https://github.com/fishtown-analytics/mailchimp).

|

||||||

|

|

||||||

@@ -30,7 +30,7 @@ Analysts using dbt can transform their data by simply writing select statements,

|

|||||||

|

|

||||||

These select statements, or "models", form a dbt project. Models frequently build on top of one another – dbt makes it easy to [manage relationships](https://docs.getdbt.com/docs/ref) between models, and [visualize these relationships](https://docs.getdbt.com/docs/documentation), as well as assure the quality of your transformations through [testing](https://docs.getdbt.com/docs/testing).

|

These select statements, or "models", form a dbt project. Models frequently build on top of one another – dbt makes it easy to [manage relationships](https://docs.getdbt.com/docs/ref) between models, and [visualize these relationships](https://docs.getdbt.com/docs/documentation), as well as assure the quality of your transformations through [testing](https://docs.getdbt.com/docs/testing).

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Getting started

|

## Getting started

|

||||||

|

|

||||||

@@ -51,7 +51,7 @@ These select statements, or "models", form a dbt project. Models frequently buil

|

|||||||

## Reporting bugs and contributing code

|

## Reporting bugs and contributing code

|

||||||

|

|

||||||

- Want to report a bug or request a feature? Let us know on [Slack](http://community.getdbt.com/), or open [an issue](https://github.com/fishtown-analytics/dbt/issues/new).

|

- Want to report a bug or request a feature? Let us know on [Slack](http://community.getdbt.com/), or open [an issue](https://github.com/fishtown-analytics/dbt/issues/new).

|

||||||

- Want to help us build dbt? Check out the [Contributing Getting Started Guide](/CONTRIBUTING.md)

|

- Want to help us build dbt? Check out the [Contributing Getting Started Guide](https://github.com/fishtown-analytics/dbt/blob/HEAD/CONTRIBUTING.md)

|

||||||

|

|

||||||

## Code of Conduct

|

## Code of Conduct

|

||||||

|

|

||||||

|

|||||||

92

RELEASE.md

92

RELEASE.md

@@ -1,92 +0,0 @@

|

|||||||

### Release Procedure :shipit:

|

|

||||||

|

|

||||||

#### Branching Strategy

|

|

||||||

|

|

||||||

dbt has three types of branches:

|

|

||||||

|

|

||||||

- **Trunks** track the latest release of a minor version of dbt. Historically, we used the `master` branch as the trunk. Each minor version release has a corresponding trunk. For example, the `0.11.x` series of releases has a branch called `0.11.latest`. This allows us to release new patch versions under `0.11` without necessarily needing to pull them into the latest version of dbt.

|

|

||||||

- **Release Branches** track a specific, not yet complete release of dbt. These releases are codenamed since we don't always know what their semantic version will be. Example: `dev/lucretia-mott` became `0.11.1`.

|

|

||||||

- **Feature Branches** track individual features and fixes. On completion they should be merged into a release branch.

|

|

||||||

|

|

||||||

#### Git & PyPI

|

|

||||||

|

|

||||||

1. Update CHANGELOG.md with the most recent changes

|

|

||||||

2. If this is a release candidate, you want to create it off of your release branch. If it's an actual release, you must first merge to a master branch. Open a Pull Request in Github to merge it into the appropriate trunk (`X.X.latest`)

|

|

||||||

3. Bump the version using `bumpversion`:

|

|

||||||

- Dry run first by running `bumpversion --new-version <desired-version> <part>` and checking the diff. If it looks correct, clean up the chanages and move on:

|

|

||||||

- Alpha releases: `bumpversion --commit --no-tag --new-version 0.10.2a1 num`

|

|

||||||

- Patch releases: `bumpversion --commit --no-tag --new-version 0.10.2 patch`

|

|

||||||

- Minor releases: `bumpversion --commit --no-tag --new-version 0.11.0 minor`

|

|

||||||

- Major releases: `bumpversion --commit --no-tag --new-version 1.0.0 major`

|

|

||||||

4. (If this is a not a release candidate) Merge to `x.x.latest` and (optionally) `master`.

|

|

||||||

5. Update the default branch to the next dev release branch.

|

|

||||||

6. Build source distributions for all packages by running `./scripts/build-sdists.sh`. Note that this will clean out your `dist/` folder, so if you have important stuff in there, don't run it!!!

|

|

||||||

7. Deploy to pypi

|

|

||||||

- `twine upload dist/*`

|

|

||||||

8. Deploy to homebrew (see below)

|

|

||||||

9. Deploy to conda-forge (see below)

|

|

||||||

10. Git release notes (points to changelog)

|

|

||||||

11. Post to slack (point to changelog)

|

|

||||||

|

|

||||||

After releasing a new version, it's important to merge the changes back into the other outstanding release branches. This avoids merge conflicts moving forward.

|

|

||||||

|

|

||||||

In some cases, where the branches have diverged wildly, it's ok to skip this step. But this means that the changes you just released won't be included in future releases.

|

|

||||||

|

|

||||||

#### Homebrew Release Process

|

|

||||||

|

|

||||||

1. Clone the `homebrew-dbt` repository:

|

|

||||||

|

|

||||||

```

|

|

||||||

git clone git@github.com:fishtown-analytics/homebrew-dbt.git

|

|

||||||

```

|

|

||||||

|

|

||||||

2. For ALL releases (prereleases and version releases), copy the relevant formula. To copy from the latest version release of dbt, do:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

cp Formula/dbt.rb Formula/dbt@{NEW-VERSION}.rb

|

|

||||||

```

|

|

||||||

|

|

||||||

To copy from a different version, simply copy the corresponding file.

|

|

||||||

|

|

||||||

3. Open the file, and edit the following:

|

|

||||||

- the name of the ruby class: this is important, homebrew won't function properly if the class name is wrong. Check historical versions to figure out the right name.

|

|

||||||

- under the `bottle` section, remove all of the hashes (lines starting with `sha256`)

|

|

||||||

|

|

||||||

4. Create a **Python 3.7** virtualenv, activate it, and then install two packages: `homebrew-pypi-poet`, and the version of dbt you are preparing. I use:

|

|

||||||

|

|

||||||

```

|

|

||||||

pyenv virtualenv 3.7.0 homebrew-dbt-{VERSION}

|

|

||||||

pyenv activate homebrew-dbt-{VERSION}

|

|

||||||

pip install dbt=={VERSION} homebrew-pypi-poet

|

|

||||||

```

|

|

||||||

|

|

||||||

homebrew-pypi-poet is a program that generates a valid homebrew formula for an installed pip package. You want to use it to generate a diff against the existing formula. Then you want to apply the diff for the dependency packages only -- e.g. it will tell you that `google-api-core` has been updated and that you need to use the latest version.

|

|

||||||

|

|

||||||

5. reinstall, test, and audit dbt. if the test or audit fails, fix the formula with step 1.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

brew uninstall --force Formula/{YOUR-FILE}.rb

|

|

||||||

brew install Formula/{YOUR-FILE}.rb

|

|

||||||

brew test dbt

|

|

||||||

brew audit --strict dbt

|

|

||||||

```

|

|

||||||

|

|

||||||

6. Ask Connor to bottle the change (only his laptop can do it!)

|

|

||||||

|

|

||||||

#### Conda Forge Release Process

|

|

||||||

|

|

||||||

1. Clone the fork of `conda-forge/dbt-feedstock` [here](https://github.com/fishtown-analytics/dbt-feedstock)

|

|

||||||

```bash

|

|

||||||

git clone git@github.com:fishtown-analytics/dbt-feedstock.git

|

|

||||||

|

|

||||||

```

|

|

||||||

2. Update the version and sha256 in `recipe/meta.yml`. To calculate the sha256, run:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

wget https://github.com/fishtown-analytics/dbt/archive/v{version}.tar.gz

|

|

||||||

openssl sha256 v{version}.tar.gz

|

|

||||||

```

|

|

||||||

|

|

||||||

3. Push the changes and create a PR against `conda-forge/dbt-feedstock`

|

|

||||||

|

|

||||||

4. Confirm that all automated conda-forge tests are passing

|

|

||||||

@@ -139,7 +139,7 @@ jobs:

|

|||||||

inputs:

|

inputs:

|

||||||

versionSpec: '3.7'

|

versionSpec: '3.7'

|

||||||

architecture: 'x64'

|

architecture: 'x64'

|

||||||

- script: python -m pip install --upgrade pip setuptools && python -m pip install -r requirements.txt && python -m pip install -r dev_requirements.txt

|

- script: python -m pip install --upgrade pip setuptools && python -m pip install -r requirements.txt && python -m pip install -r requirements-dev.txt

|

||||||

displayName: Install dependencies

|

displayName: Install dependencies

|

||||||

- task: ShellScript@2

|

- task: ShellScript@2

|

||||||

inputs:

|

inputs:

|

||||||

|

|||||||

@@ -63,11 +63,13 @@ def main():

|

|||||||

packages = registry.packages()

|

packages = registry.packages()

|

||||||

project_json = init_project_in_packages(args, packages)

|

project_json = init_project_in_packages(args, packages)

|

||||||

if args.project["version"] in project_json["versions"]:

|

if args.project["version"] in project_json["versions"]:

|

||||||

raise Exception("Version {} already in packages JSON"

|

raise Exception(

|

||||||

.format(args.project["version"]),

|

"Version {} already in packages JSON".format(args.project["version"]),

|

||||||

file=sys.stderr)

|

file=sys.stderr,

|

||||||

|

)

|

||||||

add_version_to_package(args, project_json)

|

add_version_to_package(args, project_json)

|

||||||

print(json.dumps(packages, indent=2))

|

print(json.dumps(packages, indent=2))

|

||||||

|

|

||||||

|

|

||||||

if __name__ == "__main__":

|

if __name__ == "__main__":

|

||||||

main()

|

main()

|

||||||

|

|||||||

@@ -1,19 +1,17 @@

|

|||||||

from dataclasses import dataclass

|

from dataclasses import dataclass

|

||||||

import re

|

import re

|

||||||

|

|

||||||

from hologram import JsonSchemaMixin

|

|

||||||

from dbt.exceptions import RuntimeException

|

|

||||||

|

|

||||||

from typing import Dict, ClassVar, Any, Optional

|

from typing import Dict, ClassVar, Any, Optional

|

||||||

|

|

||||||

|

from dbt.exceptions import RuntimeException

|

||||||

|

|

||||||

|

|

||||||

@dataclass

|

@dataclass

|

||||||

class Column(JsonSchemaMixin):

|

class Column:

|

||||||

TYPE_LABELS: ClassVar[Dict[str, str]] = {

|

TYPE_LABELS: ClassVar[Dict[str, str]] = {

|

||||||

'STRING': 'TEXT',

|

"STRING": "TEXT",

|

||||||

'TIMESTAMP': 'TIMESTAMP',

|

"TIMESTAMP": "TIMESTAMP",

|

||||||

'FLOAT': 'FLOAT',

|

"FLOAT": "FLOAT",

|

||||||

'INTEGER': 'INT'

|

"INTEGER": "INT",

|

||||||

}

|

}

|

||||||

column: str

|

column: str

|

||||||

dtype: str

|

dtype: str

|

||||||

@@ -26,7 +24,7 @@ class Column(JsonSchemaMixin):

|

|||||||

return cls.TYPE_LABELS.get(dtype.upper(), dtype)

|

return cls.TYPE_LABELS.get(dtype.upper(), dtype)

|

||||||

|

|

||||||

@classmethod

|

@classmethod

|

||||||

def create(cls, name, label_or_dtype: str) -> 'Column':

|

def create(cls, name, label_or_dtype: str) -> "Column":

|

||||||

column_type = cls.translate_type(label_or_dtype)

|

column_type = cls.translate_type(label_or_dtype)

|

||||||

return cls(name, column_type)

|

return cls(name, column_type)

|

||||||

|

|

||||||

@@ -43,14 +41,19 @@ class Column(JsonSchemaMixin):

|

|||||||

if self.is_string():

|

if self.is_string():

|

||||||

return Column.string_type(self.string_size())

|

return Column.string_type(self.string_size())

|

||||||

elif self.is_numeric():

|

elif self.is_numeric():

|

||||||

return Column.numeric_type(self.dtype, self.numeric_precision,

|

return Column.numeric_type(

|

||||||

self.numeric_scale)

|

self.dtype, self.numeric_precision, self.numeric_scale

|

||||||

|

)

|

||||||

else:

|

else:

|

||||||

return self.dtype

|

return self.dtype

|

||||||

|

|

||||||

def is_string(self) -> bool:

|

def is_string(self) -> bool:

|

||||||

return self.dtype.lower() in ['text', 'character varying', 'character',

|

return self.dtype.lower() in [

|

||||||

'varchar']

|

"text",

|

||||||

|

"character varying",

|

||||||

|

"character",

|

||||||

|

"varchar",

|

||||||

|

]

|

||||||

|

|

||||||

def is_number(self):

|

def is_number(self):

|

||||||

return any([self.is_integer(), self.is_numeric(), self.is_float()])

|

return any([self.is_integer(), self.is_numeric(), self.is_float()])

|

||||||

@@ -58,33 +61,45 @@ class Column(JsonSchemaMixin):

|

|||||||

def is_float(self):

|

def is_float(self):

|

||||||

return self.dtype.lower() in [

|

return self.dtype.lower() in [

|

||||||

# floats

|

# floats

|

||||||

'real', 'float4', 'float', 'double precision', 'float8'

|

"real",

|

||||||

|

"float4",

|

||||||

|

"float",

|

||||||

|

"double precision",

|

||||||

|

"float8",

|

||||||

]

|

]

|

||||||

|

|

||||||

def is_integer(self) -> bool:

|

def is_integer(self) -> bool:

|

||||||

return self.dtype.lower() in [

|

return self.dtype.lower() in [

|

||||||

# real types

|

# real types

|

||||||

'smallint', 'integer', 'bigint',

|

"smallint",

|

||||||

'smallserial', 'serial', 'bigserial',

|

"integer",

|

||||||

|

"bigint",

|

||||||

|

"smallserial",

|

||||||

|

"serial",

|

||||||

|

"bigserial",

|

||||||

# aliases

|

# aliases

|

||||||

'int2', 'int4', 'int8',

|

"int2",

|

||||||

'serial2', 'serial4', 'serial8',

|

"int4",

|

||||||

|

"int8",

|

||||||

|

"serial2",

|

||||||

|

"serial4",

|

||||||

|

"serial8",

|

||||||

]

|

]

|

||||||

|

|

||||||

def is_numeric(self) -> bool:

|

def is_numeric(self) -> bool:

|

||||||

return self.dtype.lower() in ['numeric', 'decimal']

|

return self.dtype.lower() in ["numeric", "decimal"]

|

||||||

|

|

||||||

def string_size(self) -> int:

|

def string_size(self) -> int:

|

||||||

if not self.is_string():

|

if not self.is_string():

|

||||||

raise RuntimeException("Called string_size() on non-string field!")

|

raise RuntimeException("Called string_size() on non-string field!")

|

||||||

|

|

||||||

if self.dtype == 'text' or self.char_size is None:

|

if self.dtype == "text" or self.char_size is None:

|

||||||

# char_size should never be None. Handle it reasonably just in case

|

# char_size should never be None. Handle it reasonably just in case

|

||||||

return 256

|

return 256

|

||||||

else:

|

else:

|

||||||

return int(self.char_size)

|

return int(self.char_size)

|

||||||

|

|

||||||

def can_expand_to(self, other_column: 'Column') -> bool:

|

def can_expand_to(self, other_column: "Column") -> bool:

|

||||||

"""returns True if this column can be expanded to the size of the

|

"""returns True if this column can be expanded to the size of the

|

||||||

other column"""

|

other column"""

|

||||||

if not self.is_string() or not other_column.is_string():

|

if not self.is_string() or not other_column.is_string():

|

||||||

@@ -112,12 +127,10 @@ class Column(JsonSchemaMixin):

|

|||||||

return "<Column {} ({})>".format(self.name, self.data_type)

|

return "<Column {} ({})>".format(self.name, self.data_type)

|

||||||

|

|

||||||

@classmethod

|

@classmethod

|

||||||

def from_description(cls, name: str, raw_data_type: str) -> 'Column':

|

def from_description(cls, name: str, raw_data_type: str) -> "Column":

|

||||||

match = re.match(r'([^(]+)(\([^)]+\))?', raw_data_type)

|

match = re.match(r"([^(]+)(\([^)]+\))?", raw_data_type)

|

||||||

if match is None:

|

if match is None:

|

||||||

raise RuntimeException(

|

raise RuntimeException(f'Could not interpret data type "{raw_data_type}"')

|

||||||

f'Could not interpret data type "{raw_data_type}"'

|

|

||||||

)

|

|

||||||

data_type, size_info = match.groups()

|

data_type, size_info = match.groups()

|

||||||

char_size = None

|

char_size = None

|

||||||

numeric_precision = None

|

numeric_precision = None

|

||||||

@@ -125,7 +138,7 @@ class Column(JsonSchemaMixin):

|

|||||||

if size_info is not None:

|

if size_info is not None:

|

||||||

# strip out the parentheses

|

# strip out the parentheses

|

||||||

size_info = size_info[1:-1]

|

size_info = size_info[1:-1]

|

||||||

parts = size_info.split(',')

|

parts = size_info.split(",")

|

||||||

if len(parts) == 1:

|

if len(parts) == 1:

|

||||||

try:

|

try:

|

||||||

char_size = int(parts[0])

|

char_size = int(parts[0])

|

||||||

@@ -150,6 +163,4 @@ class Column(JsonSchemaMixin):

|

|||||||

f'could not convert "{parts[1]}" to an integer'

|

f'could not convert "{parts[1]}" to an integer'

|

||||||

)

|

)

|

||||||

|

|

||||||

return cls(

|

return cls(name, data_type, char_size, numeric_precision, numeric_scale)

|

||||||

name, data_type, char_size, numeric_precision, numeric_scale

|

|

||||||

)

|

|

||||||

|

|||||||

@@ -1,18 +1,21 @@

|

|||||||

import abc

|

import abc

|

||||||

import os

|

import os

|

||||||

|

|

||||||

# multiprocessing.RLock is a function returning this type

|

# multiprocessing.RLock is a function returning this type

|

||||||

from multiprocessing.synchronize import RLock

|

from multiprocessing.synchronize import RLock

|

||||||

from threading import get_ident

|

from threading import get_ident

|

||||||

from typing import (

|

from typing import Dict, Tuple, Hashable, Optional, ContextManager, List, Union

|

||||||

Dict, Tuple, Hashable, Optional, ContextManager, List, Union

|

|

||||||

)

|

|

||||||

|

|

||||||

import agate

|

import agate

|

||||||

|

|

||||||

import dbt.exceptions

|

import dbt.exceptions

|

||||||

from dbt.contracts.connection import (

|

from dbt.contracts.connection import (

|

||||||

Connection, Identifier, ConnectionState,

|

Connection,

|

||||||

AdapterRequiredConfig, LazyHandle, AdapterResponse

|

Identifier,

|

||||||

|

ConnectionState,

|

||||||

|

AdapterRequiredConfig,

|

||||||

|

LazyHandle,

|

||||||

|

AdapterResponse,

|

||||||

)

|

)

|

||||||

from dbt.contracts.graph.manifest import Manifest

|

from dbt.contracts.graph.manifest import Manifest

|

||||||

from dbt.adapters.base.query_headers import (

|

from dbt.adapters.base.query_headers import (

|

||||||

@@ -35,6 +38,7 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

You must also set the 'TYPE' class attribute with a class-unique constant

|

You must also set the 'TYPE' class attribute with a class-unique constant

|

||||||

string.

|

string.

|

||||||

"""

|

"""

|

||||||

|

|

||||||

TYPE: str = NotImplemented

|

TYPE: str = NotImplemented

|

||||||

|

|

||||||

def __init__(self, profile: AdapterRequiredConfig):

|

def __init__(self, profile: AdapterRequiredConfig):

|

||||||

@@ -65,7 +69,7 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

key = self.get_thread_identifier()

|

key = self.get_thread_identifier()

|

||||||

if key in self.thread_connections:

|

if key in self.thread_connections:

|

||||||

raise dbt.exceptions.InternalException(

|

raise dbt.exceptions.InternalException(

|

||||||

'In set_thread_connection, existing connection exists for {}'

|

"In set_thread_connection, existing connection exists for {}"

|

||||||

)

|

)

|

||||||

self.thread_connections[key] = conn

|

self.thread_connections[key] = conn

|

||||||

|

|

||||||

@@ -105,18 +109,19 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

underlying database.

|

underlying database.

|

||||||

"""

|

"""

|

||||||

raise dbt.exceptions.NotImplementedException(

|

raise dbt.exceptions.NotImplementedException(

|

||||||

'`exception_handler` is not implemented for this adapter!')

|

"`exception_handler` is not implemented for this adapter!"

|

||||||

|

)

|

||||||

|

|

||||||

def set_connection_name(self, name: Optional[str] = None) -> Connection:

|

def set_connection_name(self, name: Optional[str] = None) -> Connection:

|

||||||

conn_name: str

|

conn_name: str

|

||||||

if name is None:

|

if name is None:

|

||||||

# if a name isn't specified, we'll re-use a single handle

|

# if a name isn't specified, we'll re-use a single handle

|

||||||

# named 'master'

|

# named 'master'

|

||||||

conn_name = 'master'

|

conn_name = "master"

|

||||||

else:

|

else:

|

||||||

if not isinstance(name, str):

|

if not isinstance(name, str):

|

||||||

raise dbt.exceptions.CompilerException(

|

raise dbt.exceptions.CompilerException(

|

||||||

f'For connection name, got {name} - not a string!'

|

f"For connection name, got {name} - not a string!"

|

||||||

)

|

)

|

||||||

assert isinstance(name, str)

|

assert isinstance(name, str)

|

||||||

conn_name = name

|

conn_name = name

|

||||||

@@ -129,20 +134,20 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

state=ConnectionState.INIT,

|

state=ConnectionState.INIT,

|

||||||

transaction_open=False,

|

transaction_open=False,

|

||||||

handle=None,

|

handle=None,

|

||||||

credentials=self.profile.credentials

|

credentials=self.profile.credentials,

|

||||||

)

|

)

|

||||||

self.set_thread_connection(conn)

|

self.set_thread_connection(conn)

|

||||||

|

|

||||||

if conn.name == conn_name and conn.state == 'open':

|

if conn.name == conn_name and conn.state == "open":

|

||||||

return conn

|

return conn

|

||||||

|

|

||||||

logger.debug(

|

logger.debug('Acquiring new {} connection "{}".'.format(self.TYPE, conn_name))

|

||||||

'Acquiring new {} connection "{}".'.format(self.TYPE, conn_name))

|

|

||||||

|

|

||||||

if conn.state == 'open':

|

if conn.state == "open":

|

||||||

logger.debug(

|

logger.debug(

|

||||||

'Re-using an available connection from the pool (formerly {}).'

|

"Re-using an available connection from the pool (formerly {}).".format(

|

||||||

.format(conn.name)

|

conn.name

|

||||||

|

)

|

||||||

)

|

)

|

||||||

else:

|

else:

|

||||||

conn.handle = LazyHandle(self.open)

|

conn.handle = LazyHandle(self.open)

|

||||||

@@ -154,7 +159,7 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

def cancel_open(self) -> Optional[List[str]]:

|

def cancel_open(self) -> Optional[List[str]]:

|

||||||

"""Cancel all open connections on the adapter. (passable)"""

|

"""Cancel all open connections on the adapter. (passable)"""

|

||||||

raise dbt.exceptions.NotImplementedException(

|

raise dbt.exceptions.NotImplementedException(

|

||||||

'`cancel_open` is not implemented for this adapter!'

|

"`cancel_open` is not implemented for this adapter!"

|

||||||

)

|

)

|

||||||

|

|

||||||

@abc.abstractclassmethod

|

@abc.abstractclassmethod

|

||||||

@@ -168,7 +173,7 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

connection should not be in either in_use or available.

|

connection should not be in either in_use or available.

|

||||||

"""

|

"""

|

||||||

raise dbt.exceptions.NotImplementedException(

|

raise dbt.exceptions.NotImplementedException(

|

||||||

'`open` is not implemented for this adapter!'

|

"`open` is not implemented for this adapter!"

|

||||||

)

|

)

|

||||||

|

|

||||||

def release(self) -> None:

|

def release(self) -> None:

|

||||||

@@ -189,12 +194,14 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

def cleanup_all(self) -> None:

|

def cleanup_all(self) -> None:

|

||||||

with self.lock:

|

with self.lock:

|

||||||

for connection in self.thread_connections.values():

|

for connection in self.thread_connections.values():

|

||||||

if connection.state not in {'closed', 'init'}:

|

if connection.state not in {"closed", "init"}:

|

||||||

logger.debug("Connection '{}' was left open."

|

logger.debug(

|

||||||

.format(connection.name))

|

"Connection '{}' was left open.".format(connection.name)

|

||||||

|

)

|

||||||

else:

|

else:

|

||||||

logger.debug("Connection '{}' was properly closed."

|

logger.debug(

|

||||||

.format(connection.name))

|

"Connection '{}' was properly closed.".format(connection.name)

|

||||||

|

)

|

||||||

self.close(connection)

|

self.close(connection)

|

||||||

|

|

||||||

# garbage collect these connections

|

# garbage collect these connections

|

||||||

@@ -204,14 +211,14 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

def begin(self) -> None:

|

def begin(self) -> None:

|

||||||

"""Begin a transaction. (passable)"""

|

"""Begin a transaction. (passable)"""

|

||||||

raise dbt.exceptions.NotImplementedException(

|

raise dbt.exceptions.NotImplementedException(

|

||||||

'`begin` is not implemented for this adapter!'

|

"`begin` is not implemented for this adapter!"

|

||||||

)

|

)

|

||||||

|

|

||||||

@abc.abstractmethod

|

@abc.abstractmethod

|

||||||

def commit(self) -> None:

|

def commit(self) -> None:

|

||||||

"""Commit a transaction. (passable)"""

|

"""Commit a transaction. (passable)"""

|

||||||

raise dbt.exceptions.NotImplementedException(

|

raise dbt.exceptions.NotImplementedException(

|

||||||

'`commit` is not implemented for this adapter!'

|

"`commit` is not implemented for this adapter!"

|

||||||

)

|

)

|

||||||

|

|

||||||

@classmethod

|

@classmethod

|

||||||

@@ -220,20 +227,17 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

try:

|

try:

|

||||||

connection.handle.rollback()

|

connection.handle.rollback()

|

||||||

except Exception:

|

except Exception:

|

||||||

logger.debug(

|

logger.debug("Failed to rollback {}".format(connection.name), exc_info=True)

|

||||||

'Failed to rollback {}'.format(connection.name),

|

|

||||||

exc_info=True

|

|

||||||

)

|

|

||||||

|

|

||||||

@classmethod

|

@classmethod

|

||||||

def _close_handle(cls, connection: Connection) -> None:

|

def _close_handle(cls, connection: Connection) -> None:

|

||||||

"""Perform the actual close operation."""

|

"""Perform the actual close operation."""

|

||||||

# On windows, sometimes connection handles don't have a close() attr.

|

# On windows, sometimes connection handles don't have a close() attr.

|

||||||

if hasattr(connection.handle, 'close'):

|

if hasattr(connection.handle, "close"):

|

||||||

logger.debug(f'On {connection.name}: Close')

|

logger.debug(f"On {connection.name}: Close")

|

||||||

connection.handle.close()

|

connection.handle.close()

|

||||||

else:

|

else:

|

||||||

logger.debug(f'On {connection.name}: No close available on handle')

|

logger.debug(f"On {connection.name}: No close available on handle")

|

||||||

|

|

||||||

@classmethod

|

@classmethod

|

||||||

def _rollback(cls, connection: Connection) -> None:

|

def _rollback(cls, connection: Connection) -> None:

|

||||||

@@ -241,16 +245,16 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

if flags.STRICT_MODE:

|

if flags.STRICT_MODE:

|

||||||

if not isinstance(connection, Connection):

|

if not isinstance(connection, Connection):

|

||||||

raise dbt.exceptions.CompilerException(

|

raise dbt.exceptions.CompilerException(

|

||||||

f'In _rollback, got {connection} - not a Connection!'

|

f"In _rollback, got {connection} - not a Connection!"

|

||||||

)

|

)

|

||||||

|

|

||||||

if connection.transaction_open is False:

|

if connection.transaction_open is False:

|

||||||

raise dbt.exceptions.InternalException(

|

raise dbt.exceptions.InternalException(

|

||||||

f'Tried to rollback transaction on connection '

|

f"Tried to rollback transaction on connection "

|

||||||

f'"{connection.name}", but it does not have one open!'

|

f'"{connection.name}", but it does not have one open!'

|

||||||

)

|

)

|

||||||

|

|

||||||

logger.debug(f'On {connection.name}: ROLLBACK')

|

logger.debug(f"On {connection.name}: ROLLBACK")

|

||||||

cls._rollback_handle(connection)

|

cls._rollback_handle(connection)

|

||||||

|

|

||||||

connection.transaction_open = False

|

connection.transaction_open = False

|

||||||

@@ -260,7 +264,7 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

if flags.STRICT_MODE:

|

if flags.STRICT_MODE:

|

||||||

if not isinstance(connection, Connection):

|

if not isinstance(connection, Connection):

|

||||||

raise dbt.exceptions.CompilerException(

|

raise dbt.exceptions.CompilerException(

|

||||||

f'In close, got {connection} - not a Connection!'

|

f"In close, got {connection} - not a Connection!"

|

||||||

)

|

)

|

||||||

|

|

||||||

# if the connection is in closed or init, there's nothing to do

|

# if the connection is in closed or init, there's nothing to do

|

||||||

@@ -268,7 +272,7 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

return connection

|

return connection

|

||||||

|

|

||||||

if connection.transaction_open and connection.handle:

|

if connection.transaction_open and connection.handle:

|

||||||

logger.debug('On {}: ROLLBACK'.format(connection.name))

|

logger.debug("On {}: ROLLBACK".format(connection.name))

|

||||||

cls._rollback_handle(connection)

|

cls._rollback_handle(connection)

|

||||||

connection.transaction_open = False

|

connection.transaction_open = False

|

||||||

|

|

||||||

@@ -302,5 +306,5 @@ class BaseConnectionManager(metaclass=abc.ABCMeta):

|

|||||||

:rtype: Tuple[Union[str, AdapterResponse], agate.Table]

|

:rtype: Tuple[Union[str, AdapterResponse], agate.Table]

|

||||||

"""

|

"""

|

||||||

raise dbt.exceptions.NotImplementedException(

|

raise dbt.exceptions.NotImplementedException(

|

||||||

'`execute` is not implemented for this adapter!'

|

"`execute` is not implemented for this adapter!"

|

||||||

)

|

)

|

||||||

|

|||||||

@@ -4,17 +4,31 @@ from contextlib import contextmanager

|

|||||||

from datetime import datetime

|

from datetime import datetime

|

||||||

from itertools import chain

|

from itertools import chain

|

||||||

from typing import (

|

from typing import (

|

||||||

Optional, Tuple, Callable, Iterable, Type, Dict, Any, List, Mapping,

|

Optional,

|

||||||

Iterator, Union, Set

|

Tuple,

|

||||||

|

Callable,

|

||||||

|

Iterable,

|

||||||

|

Type,

|

||||||

|

Dict,

|

||||||

|

Any,

|

||||||

|

List,

|

||||||

|

Mapping,

|

||||||

|

Iterator,

|

||||||

|

Union,

|

||||||

|

Set,

|

||||||

)

|

)

|

||||||

|

|

||||||

import agate

|

import agate

|

||||||

import pytz

|

import pytz

|

||||||

|

|

||||||

from dbt.exceptions import (

|

from dbt.exceptions import (

|

||||||

raise_database_error, raise_compiler_error, invalid_type_error,

|

raise_database_error,

|

||||||

|

raise_compiler_error,

|

||||||

|

invalid_type_error,

|

||||||

get_relation_returned_multiple_results,

|

get_relation_returned_multiple_results,

|

||||||

InternalException, NotImplementedException, RuntimeException,

|

InternalException,

|

||||||

|

NotImplementedException,

|

||||||

|

RuntimeException,

|

||||||

)

|

)

|

||||||

from dbt import flags

|

from dbt import flags

|

||||||

|

|

||||||

@@ -25,10 +39,8 @@ from dbt.adapters.protocol import (

|

|||||||

)

|

)

|

||||||

from dbt.clients.agate_helper import empty_table, merge_tables, table_from_rows

|

from dbt.clients.agate_helper import empty_table, merge_tables, table_from_rows

|

||||||

from dbt.clients.jinja import MacroGenerator

|

from dbt.clients.jinja import MacroGenerator

|

||||||

from dbt.contracts.graph.compiled import (

|

from dbt.contracts.graph.compiled import CompileResultNode, CompiledSeedNode

|

||||||

CompileResultNode, CompiledSeedNode

|

from dbt.contracts.graph.manifest import Manifest, MacroManifest

|

||||||

)

|

|

||||||

from dbt.contracts.graph.manifest import Manifest

|

|

||||||

from dbt.contracts.graph.parsed import ParsedSeedNode

|

from dbt.contracts.graph.parsed import ParsedSeedNode

|

||||||

from dbt.exceptions import warn_or_error

|

from dbt.exceptions import warn_or_error

|

||||||

from dbt.node_types import NodeType

|

from dbt.node_types import NodeType

|

||||||

@@ -38,7 +50,10 @@ from dbt.utils import filter_null_values, executor

|

|||||||

from dbt.adapters.base.connections import Connection, AdapterResponse

|

from dbt.adapters.base.connections import Connection, AdapterResponse

|

||||||

from dbt.adapters.base.meta import AdapterMeta, available

|

from dbt.adapters.base.meta import AdapterMeta, available

|

||||||

from dbt.adapters.base.relation import (

|

from dbt.adapters.base.relation import (

|

||||||

ComponentName, BaseRelation, InformationSchema, SchemaSearchMap

|

ComponentName,

|

||||||

|

BaseRelation,

|

||||||

|

InformationSchema,

|

||||||

|

SchemaSearchMap,

|

||||||

)

|

)

|

||||||

from dbt.adapters.base import Column as BaseColumn

|

from dbt.adapters.base import Column as BaseColumn

|

||||||

from dbt.adapters.cache import RelationsCache

|

from dbt.adapters.cache import RelationsCache

|

||||||

@@ -47,15 +62,14 @@ from dbt.adapters.cache import RelationsCache

|

|||||||

SeedModel = Union[ParsedSeedNode, CompiledSeedNode]

|

SeedModel = Union[ParsedSeedNode, CompiledSeedNode]

|

||||||

|

|

||||||

|

|

||||||

GET_CATALOG_MACRO_NAME = 'get_catalog'

|

GET_CATALOG_MACRO_NAME = "get_catalog"

|